Xianglin Yang

Enhancing Model Defense Against Jailbreaks with Proactive Safety Reasoning

Jan 31, 2025

Abstract:Large language models (LLMs) are vital for a wide range of applications yet remain susceptible to jailbreak threats, which could lead to the generation of inappropriate responses. Conventional defenses, such as refusal and adversarial training, often fail to cover corner cases or rare domains, leaving LLMs still vulnerable to more sophisticated attacks. We propose a novel defense strategy, Safety Chain-of-Thought (SCoT), which harnesses the enhanced \textit{reasoning capabilities} of LLMs for proactive assessment of harmful inputs, rather than simply blocking them. SCoT augments any refusal training datasets to critically analyze the intent behind each request before generating answers. By employing proactive reasoning, SCoT enhances the generalization of LLMs across varied harmful queries and scenarios not covered in the safety alignment corpus. Additionally, it generates detailed refusals specifying the rules violated. Comparative evaluations show that SCoT significantly surpasses existing defenses, reducing vulnerability to out-of-distribution issues and adversarial manipulations while maintaining strong general capabilities.

Exploring the Evolution of Hidden Activations with Live-Update Visualization

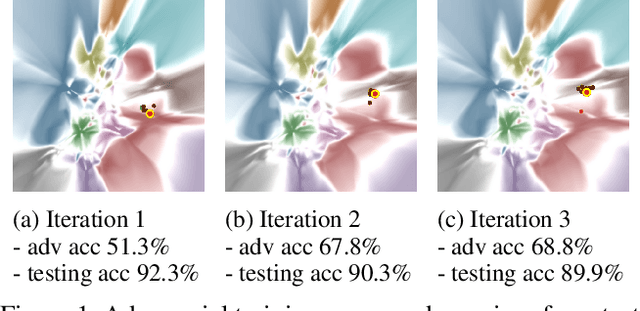

May 24, 2024Abstract:Monitoring the training of neural networks is essential for identifying potential data anomalies, enabling timely interventions and conserving significant computational resources. Apart from the commonly used metrics such as losses and validation accuracies, the hidden representation could give more insight into the model progression. To this end, we introduce SentryCam, an automated, real-time visualization tool that reveals the progression of hidden representations during training. Our results show that this visualization offers a more comprehensive view of the learning dynamics compared to basic metrics such as loss and accuracy over various datasets. Furthermore, we show that SentryCam could facilitate detailed analysis such as task transfer and catastrophic forgetting to a continual learning setting. The code is available at https://github.com/xianglinyang/SentryCam.

DeepVisualInsight: Time-Travelling Visualization for Spatio-Temporal Causality of Deep Classification Training

Dec 31, 2021

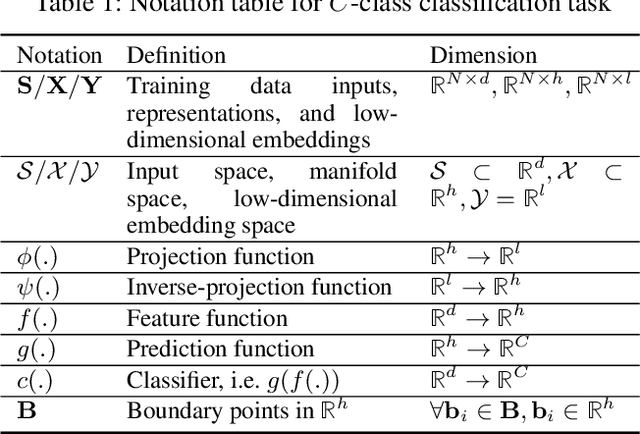

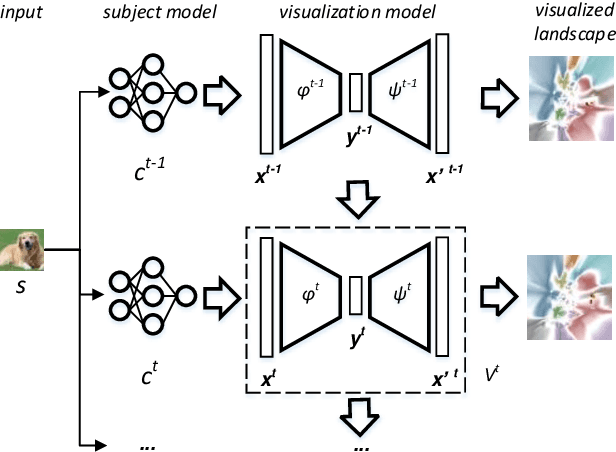

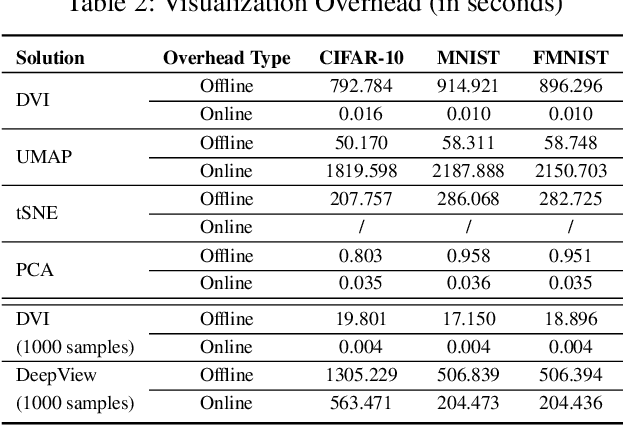

Abstract:Understanding how the predictions of deep learning models are formed during the training process is crucial to improve model performance and fix model defects, especially when we need to investigate nontrivial training strategies such as active learning, and track the root cause of unexpected training results such as performance degeneration. In this work, we propose a time-travelling visual solution DeepVisualInsight (DVI), aiming to manifest the spatio-temporal causality while training a deep learning image classifier. The spatio-temporal causality demonstrates how the gradient-descent algorithm and various training data sampling techniques can influence and reshape the layout of learnt input representation and the classification boundaries in consecutive epochs. Such causality allows us to observe and analyze the whole learning process in the visible low dimensional space. Technically, we propose four spatial and temporal properties and design our visualization solution to satisfy them. These properties preserve the most important information when inverse-)projecting input samples between the visible low-dimensional and the invisible high-dimensional space, for causal analyses. Our extensive experiments show that, comparing to baseline approaches, we achieve the best visualization performance regarding the spatial/temporal properties and visualization efficiency. Moreover, our case study shows that our visual solution can well reflect the characteristics of various training scenarios, showing good potential of DVI as a debugging tool for analyzing deep learning training processes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge