Xian Chen

A Survey of Machine Learning Models and Datasets for the Multi-label Classification of Textual Hate Speech in English

Apr 11, 2025Abstract:The dissemination of online hate speech can have serious negative consequences for individuals, online communities, and entire societies. This and the large volume of hateful online content prompted both practitioners', i.e., in content moderation or law enforcement, and researchers' interest in machine learning models to automatically classify instances of hate speech. Whereas most scientific works address hate speech classification as a binary task, practice often requires a differentiation into sub-types, e.g., according to target, severity, or legality, which may overlap for individual content. Hence, researchers created datasets and machine learning models that approach hate speech classification in textual data as a multi-label problem. This work presents the first systematic and comprehensive survey of scientific literature on this emerging research landscape in English (N=46). We contribute with a concise overview of 28 datasets suited for training multi-label classification models that reveals significant heterogeneity regarding label-set, size, meta-concept, annotation process, and inter-annotator agreement. Our analysis of 24 publications proposing suitable classification models further establishes inconsistency in evaluation and a preference for architectures based on Bidirectional Encoder Representation from Transformers (BERT) and Recurrent Neural Networks (RNNs). We identify imbalanced training data, reliance on crowdsourcing platforms, small and sparse datasets, and missing methodological alignment as critical open issues and formulate ten recommendations for research.

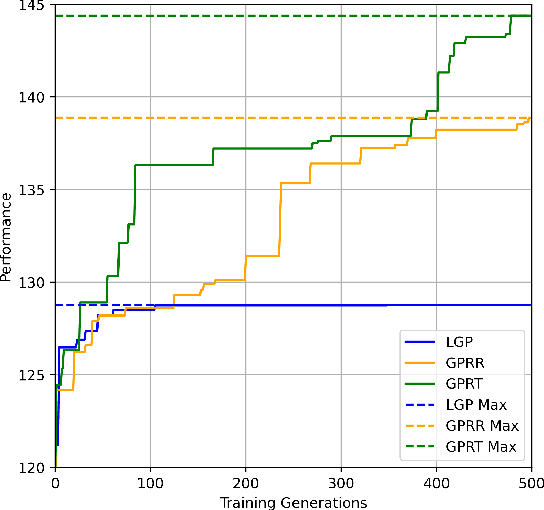

Genetic Programming with Reinforcement Learning Trained Transformer for Real-World Dynamic Scheduling Problems

Apr 10, 2025

Abstract:Dynamic scheduling in real-world environments often struggles to adapt to unforeseen disruptions, making traditional static scheduling methods and human-designed heuristics inadequate. This paper introduces an innovative approach that combines Genetic Programming (GP) with a Transformer trained through Reinforcement Learning (GPRT), specifically designed to tackle the complexities of dynamic scheduling scenarios. GPRT leverages the Transformer to refine heuristics generated by GP while also seeding and guiding the evolution of GP. This dual functionality enhances the adaptability and effectiveness of the scheduling heuristics, enabling them to better respond to the dynamic nature of real-world tasks. The efficacy of this integrated approach is demonstrated through a practical application in container terminal truck scheduling, where the GPRT method outperforms traditional GP, standalone Transformer methods, and other state-of-the-art competitors. The key contribution of this research is the development of the GPRT method, which showcases a novel combination of GP and Reinforcement Learning (RL) to produce robust and efficient scheduling solutions. Importantly, GPRT is not limited to container port truck scheduling; it offers a versatile framework applicable to various dynamic scheduling challenges. Its practicality, coupled with its interpretability and ease of modification, makes it a valuable tool for diverse real-world scenarios.

External Large Foundation Model: How to Efficiently Serve Trillions of Parameters for Online Ads Recommendation

Feb 26, 2025

Abstract:Ads recommendation is a prominent service of online advertising systems and has been actively studied. Recent studies indicate that scaling-up and advanced design of the recommendation model can bring significant performance improvement. However, with a larger model scale, such prior studies have a significantly increasing gap from industry as they often neglect two fundamental challenges in industrial-scale applications. First, training and inference budgets are restricted for the model to be served, exceeding which may incur latency and impair user experience. Second, large-volume data arrive in a streaming mode with data distributions dynamically shifting, as new users/ads join and existing users/ads leave the system. We propose the External Large Foundation Model (ExFM) framework to address the overlooked challenges. Specifically, we develop external distillation and a data augmentation system (DAS) to control the computational cost of training/inference while maintaining high performance. We design the teacher in a way like a foundation model (FM) that can serve multiple students as vertical models (VMs) to amortize its building cost. We propose Auxiliary Head and Student Adapter to mitigate the data distribution gap between FM and VMs caused by the streaming data issue. Comprehensive experiments on internal industrial-scale applications and public datasets demonstrate significant performance gain by ExFM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge