Xavier Lagorce

A Linear N-Point Solver for Structure and Motion from Asynchronous Tracks

Jul 30, 2025Abstract:Structure and continuous motion estimation from point correspondences is a fundamental problem in computer vision that has been powered by well-known algorithms such as the familiar 5-point or 8-point algorithm. However, despite their acclaim, these algorithms are limited to processing point correspondences originating from a pair of views each one representing an instantaneous capture of the scene. Yet, in the case of rolling shutter cameras, or more recently, event cameras, this synchronization breaks down. In this work, we present a unified approach for structure and linear motion estimation from 2D point correspondences with arbitrary timestamps, from an arbitrary set of views. By formulating the problem in terms of first-order dynamics and leveraging a constant velocity motion model, we derive a novel, linear point incidence relation allowing for the efficient recovery of both linear velocity and 3D points with predictable degeneracies and solution multiplicities. Owing to its general formulation, it can handle correspondences from a wide range of sensing modalities such as global shutter, rolling shutter, and event cameras, and can even combine correspondences from different collocated sensors. We validate the effectiveness of our solver on both simulated and real-world data, where we show consistent improvement across all modalities when compared to recent approaches. We believe our work opens the door to efficient structure and motion estimation from asynchronous data. Code can be found at https://github.com/suhang99/AsyncTrack-Motion-Solver.

GS-EVT: Cross-Modal Event Camera Tracking based on Gaussian Splatting

Sep 28, 2024Abstract:Reliable self-localization is a foundational skill for many intelligent mobile platforms. This paper explores the use of event cameras for motion tracking thereby providing a solution with inherent robustness under difficult dynamics and illumination. In order to circumvent the challenge of event camera-based mapping, the solution is framed in a cross-modal way. It tracks a map representation that comes directly from frame-based cameras. Specifically, the proposed method operates on top of gaussian splatting, a state-of-the-art representation that permits highly efficient and realistic novel view synthesis. The key of our approach consists of a novel pose parametrization that uses a reference pose plus first order dynamics for local differential image rendering. The latter is then compared against images of integrated events in a staggered coarse-to-fine optimization scheme. As demonstrated by our results, the realistic view rendering ability of gaussian splatting leads to stable and accurate tracking across a variety of both publicly available and newly recorded data sequences.

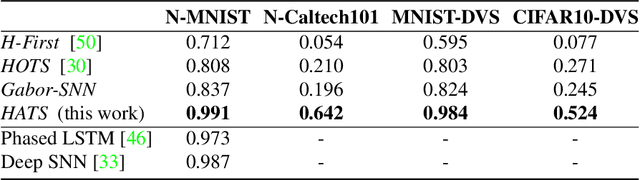

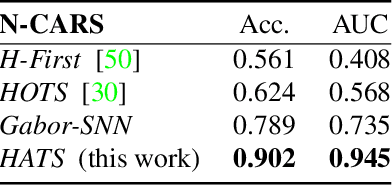

HATS: Histograms of Averaged Time Surfaces for Robust Event-based Object Classification

Mar 21, 2018

Abstract:Event-based cameras have recently drawn the attention of the Computer Vision community thanks to their advantages in terms of high temporal resolution, low power consumption and high dynamic range, compared to traditional frame-based cameras. These properties make event-based cameras an ideal choice for autonomous vehicles, robot navigation or UAV vision, among others. However, the accuracy of event-based object classification algorithms, which is of crucial importance for any reliable system working in real-world conditions, is still far behind their frame-based counterparts. Two main reasons for this performance gap are: 1. The lack of effective low-level representations and architectures for event-based object classification and 2. The absence of large real-world event-based datasets. In this paper we address both problems. First, we introduce a novel event-based feature representation together with a new machine learning architecture. Compared to previous approaches, we use local memory units to efficiently leverage past temporal information and build a robust event-based representation. Second, we release the first large real-world event-based dataset for object classification. We compare our method to the state-of-the-art with extensive experiments, showing better classification performance and real-time computation.

STICK: Spike Time Interval Computational Kernel, A Framework for General Purpose Computation using Neurons, Precise Timing, Delays, and Synchrony

Jul 22, 2015Abstract:There has been significant research over the past two decades in developing new platforms for spiking neural computation. Current neural computers are primarily developed to mimick biology. They use neural networks which can be trained to perform specific tasks to mainly solve pattern recognition problems. These machines can do more than simulate biology, they allow us to re-think our current paradigm of computation. The ultimate goal is to develop brain inspired general purpose computation architectures that can breach the current bottleneck introduced by the Von Neumann architecture. This work proposes a new framework for such a machine. We show that the use of neuron like units with precise timing representation, synaptic diversity, and temporal delays allows us to set a complete, scalable compact computation framework. The presented framework provides both linear and non linear operations, allowing us to represent and solve any function. We show usability in solving real use cases from simple differential equations to sets of non-linear differential equations leading to chaotic attractors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge