Wouter Bulten

Detection of prostate cancer in whole-slide images through end-to-end training with image-level labels

Jun 05, 2020

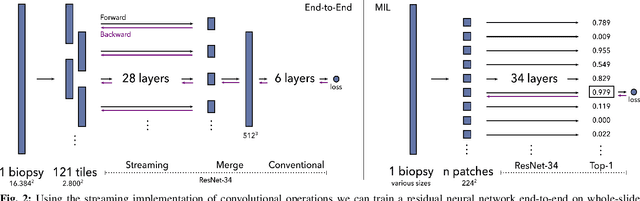

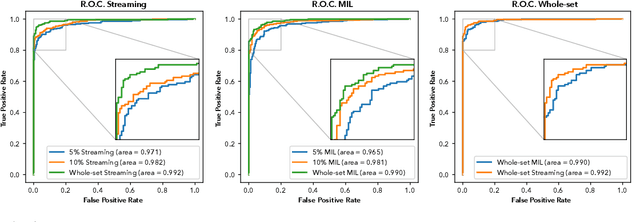

Abstract:Prostate cancer is the most prevalent cancer among men in Western countries, with 1.1 million new diagnoses every year. The gold standard for the diagnosis of prostate cancer is a pathologists' evaluation of prostate tissue. To potentially assist pathologists deep-learning-based cancer detection systems have been developed. Many of the state-of-the-art models are patch-based convolutional neural networks, as the use of entire scanned slides is hampered by memory limitations on accelerator cards. Patch-based systems typically require detailed, pixel-level annotations for effective training. However, such annotations are seldom readily available, in contrast to the clinical reports of pathologists, which contain slide-level labels. As such, developing algorithms which do not require manual pixel-wise annotations, but can learn using only the clinical report would be a significant advancement for the field. In this paper, we propose to use a streaming implementation of convolutional layers, to train a modern CNN (ResNet-34) with 21 million parameters end-to-end on 4712 prostate biopsies. The method enables the use of entire biopsy images at high-resolution directly by reducing the GPU memory requirements by 2.4 TB. We show that modern CNNs, trained using our streaming approach, can extract meaningful features from high-resolution images without additional heuristics, reaching similar performance as state-of-the-art patch-based and multiple-instance learning methods. By circumventing the need for manual annotations, this approach can function as a blueprint for other tasks in histopathological diagnosis. The source code to reproduce the streaming models is available at https://github.com/DIAGNijmegen/pathology-streaming-pipeline .

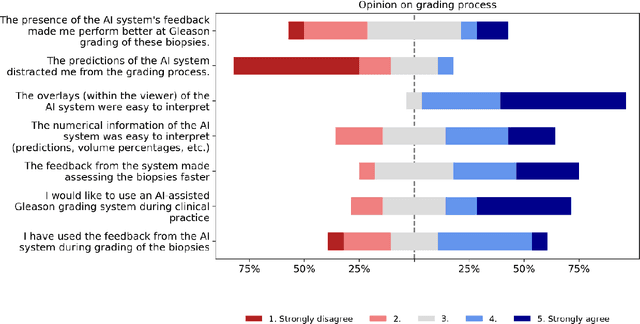

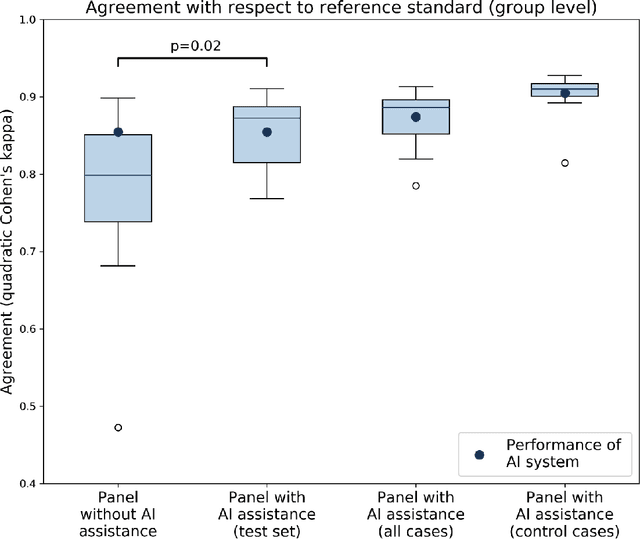

Artificial Intelligence Assistance Significantly Improves Gleason Grading of Prostate Biopsies by Pathologists

Feb 11, 2020

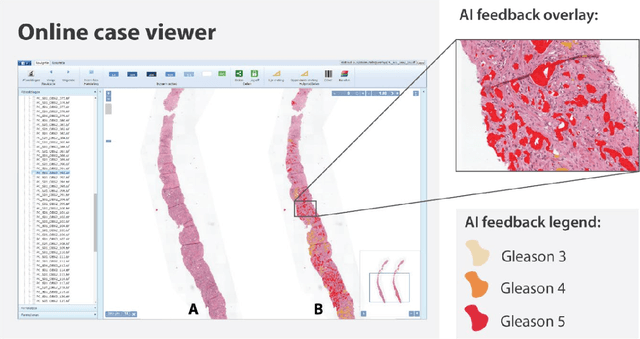

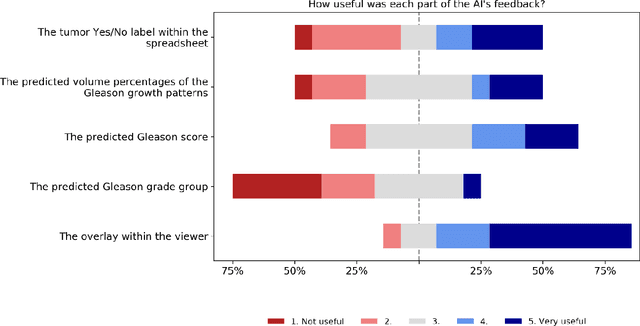

Abstract:While the Gleason score is the most important prognostic marker for prostate cancer patients, it suffers from significant observer variability. Artificial Intelligence (AI) systems, based on deep learning, have proven to achieve pathologist-level performance at Gleason grading. However, the performance of such systems can degrade in the presence of artifacts, foreign tissue, or other anomalies. Pathologists integrating their expertise with feedback from an AI system could result in a synergy that outperforms both the individual pathologist and the system. Despite the hype around AI assistance, existing literature on this topic within the pathology domain is limited. We investigated the value of AI assistance for grading prostate biopsies. A panel of fourteen observers graded 160 biopsies with and without AI assistance. Using AI, the agreement of the panel with an expert reference standard significantly increased (quadratically weighted Cohen's kappa, 0.799 vs 0.872; p=0.018). Our results show the added value of AI systems for Gleason grading, but more importantly, show the benefits of pathologist-AI synergy.

Automated Gleason Grading of Prostate Biopsies using Deep Learning

Jul 18, 2019

Abstract:The Gleason score is the most important prognostic marker for prostate cancer patients but suffers from significant inter-observer variability. We developed a fully automated deep learning system to grade prostate biopsies. The system was developed using 5834 biopsies from 1243 patients. A semi-automatic labeling technique was used to circumvent the need for full manual annotation by pathologists. The developed system achieved a high agreement with the reference standard. In a separate observer experiment, the deep learning system outperformed 10 out of 15 pathologists. The system has the potential to improve prostate cancer prognostics by acting as a first or second reader.

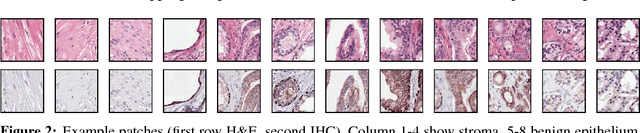

Dealing with Label Scarcity in Computational Pathology: A Use Case in Prostate Cancer Classification

May 16, 2019

Abstract:Large amounts of unlabelled data are commonplace for many applications in computational pathology, whereas labelled data is often expensive, both in time and cost, to acquire. We investigate the performance of unsupervised and supervised deep learning methods when few labelled data are available. Three methods are compared: clustering autoencoder latent vectors (unsupervised), a single layer classifier combined with a pre-trained autoencoder (semi-supervised), and a supervised CNN. We apply these methods on hematoxylin and eosin (H&E) stained prostatectomy images to classify tumour versus non-tumour tissue. Results show that semi-/unsupervised methods have an advantage over supervised learning when few labels are available. Additionally, we show that incorporating immunohistochemistry (IHC) stained data provides an increase in performance over only using H&E.

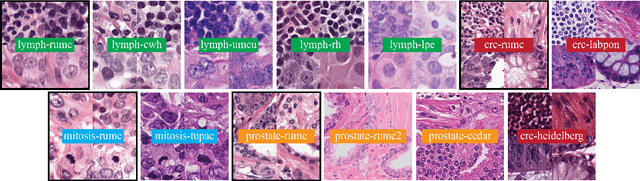

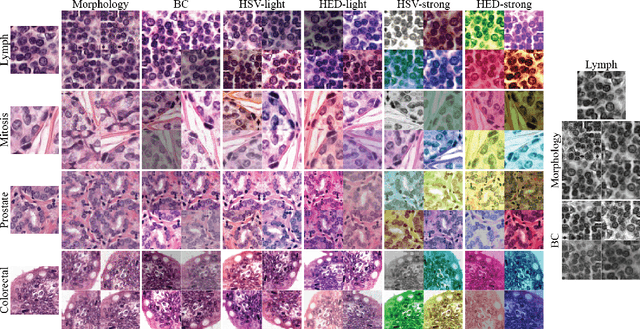

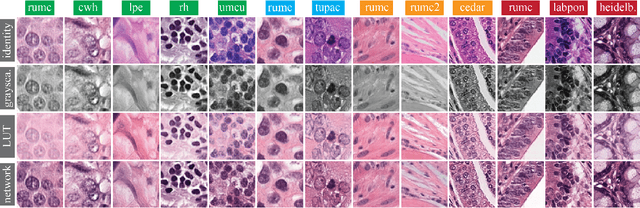

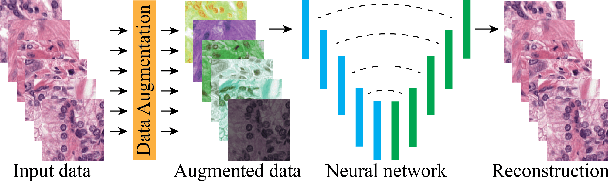

Quantifying the effects of data augmentation and stain color normalization in convolutional neural networks for computational pathology

Feb 18, 2019

Abstract:Stain variation is a phenomenon observed when distinct pathology laboratories stain tissue slides that exhibit similar but not identical color appearance. Due to this color shift between laboratories, convolutional neural networks (CNNs) trained with images from one lab often underperform on unseen images from the other lab. Several techniques have been proposed to reduce the generalization error, mainly grouped into two categories: stain color augmentation and stain color normalization. The former simulates a wide variety of realistic stain variations during training, producing stain-invariant CNNs. The latter aims to match training and test color distributions in order to reduce stain variation. For the first time, we compared some of these techniques and quantified their effect on CNN classification performance using a heterogeneous dataset of hematoxylin and eosin histopathology images from 4 organs and 9 pathology laboratories. Additionally, we propose a novel unsupervised method to perform stain color normalization using a neural network. Based on our experimental results, we provide practical guidelines on how to use stain color augmentation and stain color normalization in future computational pathology applications.

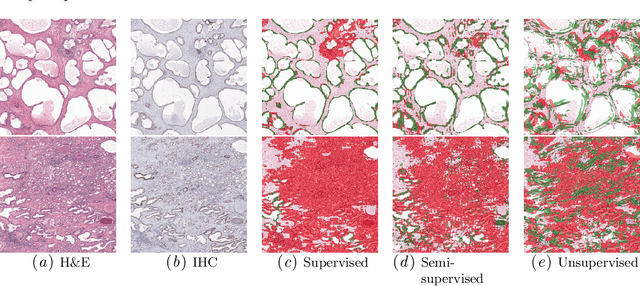

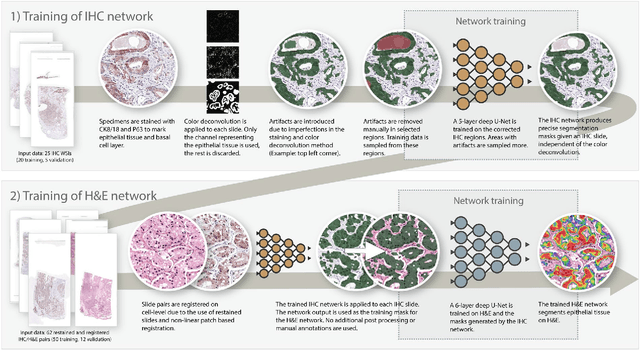

Epithelium segmentation using deep learning in H&E-stained prostate specimens with immunohistochemistry as reference standard

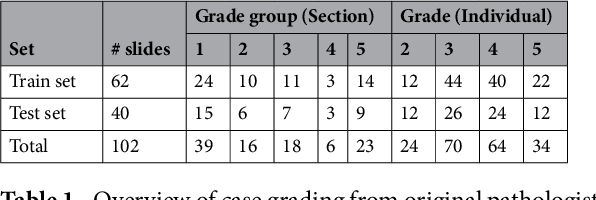

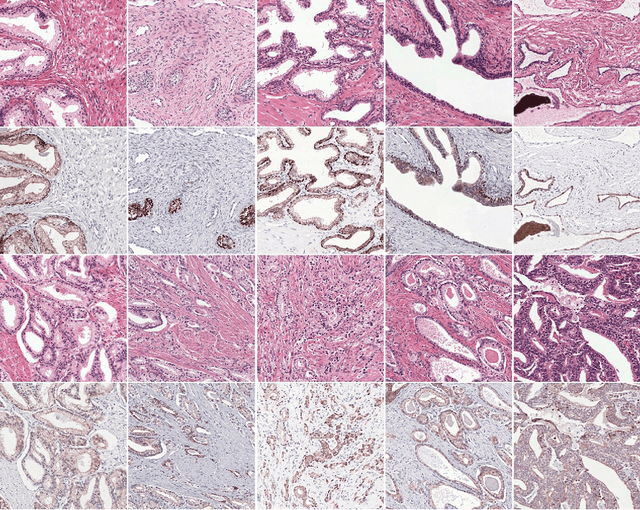

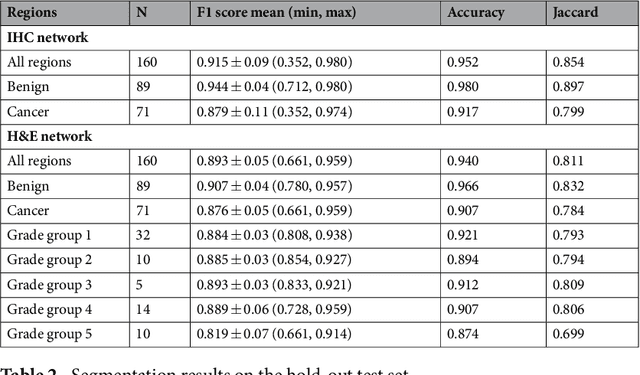

Aug 17, 2018

Abstract:Prostate cancer (PCa) is graded by pathologists by examining the architectural pattern of cancerous epithelial tissue on hematoxylin and eosin (H&E) stained slides. Given the importance of gland morphology, automatically differentiating between glandular epithelial tissue and other tissues is an important prerequisite for the development of automated methods for detecting PCa. We propose a new method, using deep learning, for automatically segmenting epithelial tissue in digitized prostatectomy slides. We employed immunohistochemistry (IHC) to render the ground truth less subjective and more precise compared to manual outlining on H&E slides, especially in areas with high-grade and poorly differentiated PCa. Our dataset consisted of 102 tissue blocks, including both low and high grade PCa. From each block a single new section was cut, stained with H&E, scanned, restained using P63 and CK8/18 to highlight the epithelial structure, and scanned again. The H&E slides were co-registered to the IHC slides. On a subset of the IHC slides we applied color deconvolution, corrected stain errors manually, and trained a U-Net to perform segmentation of epithelial structures. Whole-slide segmentation masks generated by the IHC U-Net were used to train a second U-Net on H&E. Our system makes precise cell-level segmentations and segments both intact glands as well as individual (tumor) epithelial cells. We achieved an F1-score of 0.895 on a hold-out test set and 0.827 on an external reference set from a different center. We envision this segmentation as being the first part of a fully automated prostate cancer detection and grading pipeline.

Unsupervised Prostate Cancer Detection on H&E using Convolutional Adversarial Autoencoders

Apr 19, 2018

Abstract:We propose an unsupervised method using self-clustering convolutional adversarial autoencoders to classify prostate tissue as tumor or non-tumor without any labeled training data. The clustering method is integrated into the training of the autoencoder and requires only little post-processing. Our network trains on hematoxylin and eosin (H&E) input patches and we tested two different reconstruction targets, H&E and immunohistochemistry (IHC). We show that antibody-driven feature learning using IHC helps the network to learn relevant features for the clustering task. Our network achieves a F1 score of 0.62 using only a small set of validation labels to assign classes to clusters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge