Christina Hulsbergen-van de Kaa

Artificial Intelligence Assistance Significantly Improves Gleason Grading of Prostate Biopsies by Pathologists

Feb 11, 2020

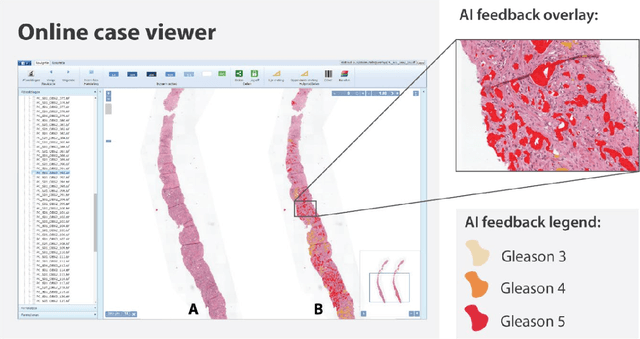

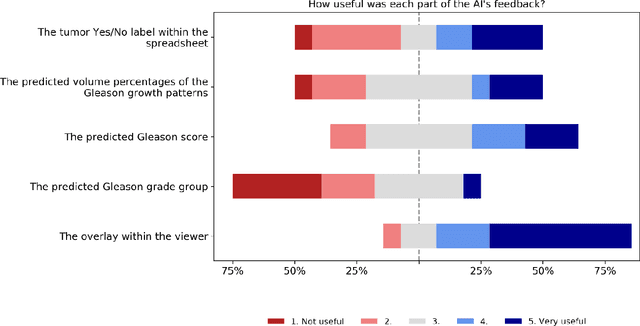

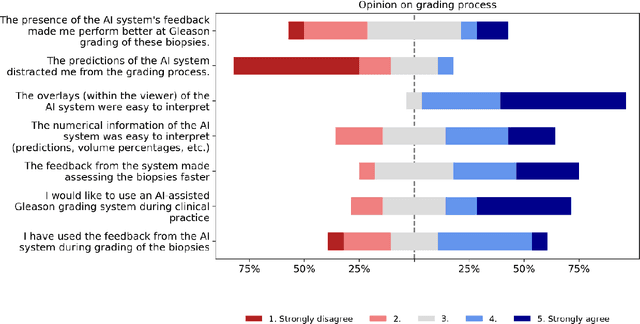

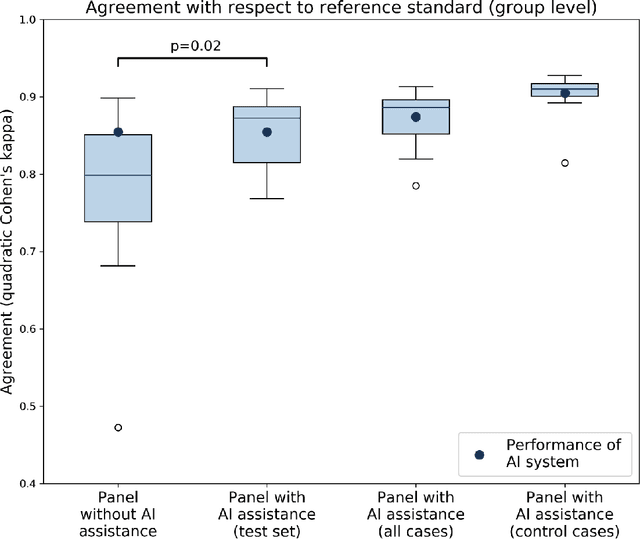

Abstract:While the Gleason score is the most important prognostic marker for prostate cancer patients, it suffers from significant observer variability. Artificial Intelligence (AI) systems, based on deep learning, have proven to achieve pathologist-level performance at Gleason grading. However, the performance of such systems can degrade in the presence of artifacts, foreign tissue, or other anomalies. Pathologists integrating their expertise with feedback from an AI system could result in a synergy that outperforms both the individual pathologist and the system. Despite the hype around AI assistance, existing literature on this topic within the pathology domain is limited. We investigated the value of AI assistance for grading prostate biopsies. A panel of fourteen observers graded 160 biopsies with and without AI assistance. Using AI, the agreement of the panel with an expert reference standard significantly increased (quadratically weighted Cohen's kappa, 0.799 vs 0.872; p=0.018). Our results show the added value of AI systems for Gleason grading, but more importantly, show the benefits of pathologist-AI synergy.

Automated Gleason Grading of Prostate Biopsies using Deep Learning

Jul 18, 2019

Abstract:The Gleason score is the most important prognostic marker for prostate cancer patients but suffers from significant inter-observer variability. We developed a fully automated deep learning system to grade prostate biopsies. The system was developed using 5834 biopsies from 1243 patients. A semi-automatic labeling technique was used to circumvent the need for full manual annotation by pathologists. The developed system achieved a high agreement with the reference standard. In a separate observer experiment, the deep learning system outperformed 10 out of 15 pathologists. The system has the potential to improve prostate cancer prognostics by acting as a first or second reader.

Epithelium segmentation using deep learning in H&E-stained prostate specimens with immunohistochemistry as reference standard

Aug 17, 2018

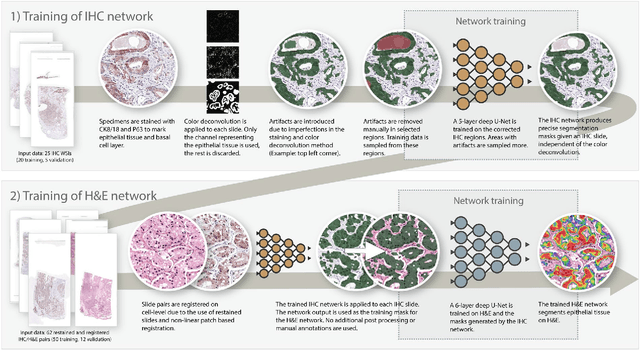

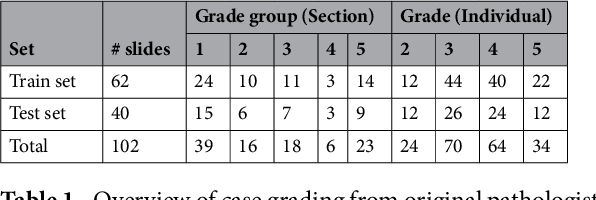

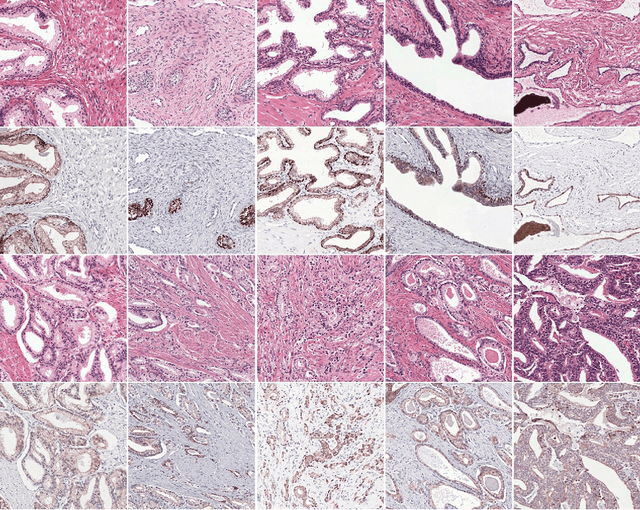

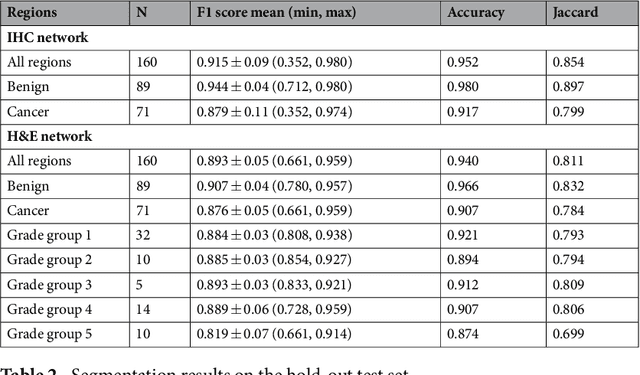

Abstract:Prostate cancer (PCa) is graded by pathologists by examining the architectural pattern of cancerous epithelial tissue on hematoxylin and eosin (H&E) stained slides. Given the importance of gland morphology, automatically differentiating between glandular epithelial tissue and other tissues is an important prerequisite for the development of automated methods for detecting PCa. We propose a new method, using deep learning, for automatically segmenting epithelial tissue in digitized prostatectomy slides. We employed immunohistochemistry (IHC) to render the ground truth less subjective and more precise compared to manual outlining on H&E slides, especially in areas with high-grade and poorly differentiated PCa. Our dataset consisted of 102 tissue blocks, including both low and high grade PCa. From each block a single new section was cut, stained with H&E, scanned, restained using P63 and CK8/18 to highlight the epithelial structure, and scanned again. The H&E slides were co-registered to the IHC slides. On a subset of the IHC slides we applied color deconvolution, corrected stain errors manually, and trained a U-Net to perform segmentation of epithelial structures. Whole-slide segmentation masks generated by the IHC U-Net were used to train a second U-Net on H&E. Our system makes precise cell-level segmentations and segments both intact glands as well as individual (tumor) epithelial cells. We achieved an F1-score of 0.895 on a hold-out test set and 0.827 on an external reference set from a different center. We envision this segmentation as being the first part of a fully automated prostate cancer detection and grading pipeline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge