William J. Beksi

UEOF: A Benchmark Dataset for Underwater Event-Based Optical Flow

Jan 15, 2026Abstract:Underwater imaging is fundamentally challenging due to wavelength-dependent light attenuation, strong scattering from suspended particles, turbidity-induced blur, and non-uniform illumination. These effects impair standard cameras and make ground-truth motion nearly impossible to obtain. On the other hand, event cameras offer microsecond resolution and high dynamic range. Nonetheless, progress on investigating event cameras for underwater environments has been limited due to the lack of datasets that pair realistic underwater optics with accurate optical flow. To address this problem, we introduce the first synthetic underwater benchmark dataset for event-based optical flow derived from physically-based ray-traced RGBD sequences. Using a modern video-to-event pipeline applied to rendered underwater videos, we produce realistic event data streams with dense ground-truth flow, depth, and camera motion. Moreover, we benchmark state-of-the-art learning-based and model-based optical flow prediction methods to understand how underwater light transport affects event formation and motion estimation accuracy. Our dataset establishes a new baseline for future development and evaluation of underwater event-based perception algorithms. The source code and dataset for this project are publicly available at https://robotic-vision-lab.github.io/ueof.

CropNeRF: A Neural Radiance Field-Based Framework for Crop Counting

Jan 01, 2026Abstract:Rigorous crop counting is crucial for effective agricultural management and informed intervention strategies. However, in outdoor field environments, partial occlusions combined with inherent ambiguity in distinguishing clustered crops from individual viewpoints poses an immense challenge for image-based segmentation methods. To address these problems, we introduce a novel crop counting framework designed for exact enumeration via 3D instance segmentation. Our approach utilizes 2D images captured from multiple viewpoints and associates independent instance masks for neural radiance field (NeRF) view synthesis. We introduce crop visibility and mask consistency scores, which are incorporated alongside 3D information from a NeRF model. This results in an effective segmentation of crop instances in 3D and highly-accurate crop counts. Furthermore, our method eliminates the dependence on crop-specific parameter tuning. We validate our framework on three agricultural datasets consisting of cotton bolls, apples, and pears, and demonstrate consistent counting performance despite major variations in crop color, shape, and size. A comparative analysis against the state of the art highlights superior performance on crop counting tasks. Lastly, we contribute a cotton plant dataset to advance further research on this topic.

CropTrack: A Tracking with Re-Identification Framework for Precision Agriculture

Dec 31, 2025Abstract:Multiple-object tracking (MOT) in agricultural environments presents major challenges due to repetitive patterns, similar object appearances, sudden illumination changes, and frequent occlusions. Contemporary trackers in this domain rely on the motion of objects rather than appearance for association. Nevertheless, they struggle to maintain object identities when targets undergo frequent and strong occlusions. The high similarity of object appearances makes integrating appearance-based association nontrivial for agricultural scenarios. To solve this problem we propose CropTrack, a novel MOT framework based on the combination of appearance and motion information. CropTrack integrates a reranking-enhanced appearance association, a one-to-many association with appearance-based conflict resolution strategy, and an exponential moving average prototype feature bank to improve appearance-based association. Evaluated on publicly available agricultural MOT datasets, CropTrack demonstrates consistent identity preservation, outperforming traditional motion-based tracking methods. Compared to the state of the art, CropTrack achieves significant gains in identification F1 and association accuracy scores with a lower number of identity switches.

Inertia-Informed Orientation Priors for Event-Based Optical Flow Estimation

Nov 17, 2025

Abstract:Event cameras, by virtue of their working principle, directly encode motion within a scene. Many learning-based and model-based methods exist that estimate event-based optical flow, however the temporally dense yet spatially sparse nature of events poses significant challenges. To address these issues, contrast maximization (CM) is a prominent model-based optimization methodology that estimates the motion trajectories of events within an event volume by optimally warping them. Since its introduction, the CM framework has undergone a series of refinements by the computer vision community. Nonetheless, it remains a highly non-convex optimization problem. In this paper, we introduce a novel biologically-inspired hybrid CM method for event-based optical flow estimation that couples visual and inertial motion cues. Concretely, we propose the use of orientation maps, derived from camera 3D velocities, as priors to guide the CM process. The orientation maps provide directional guidance and constrain the space of estimated motion trajectories. We show that this orientation-guided formulation leads to improved robustness and convergence in event-based optical flow estimation. The evaluation of our approach on the MVSEC, DSEC, and ECD datasets yields superior accuracy scores over the state of the art.

Secrets of Edge-Informed Contrast Maximization for Event-Based Vision

Sep 22, 2024Abstract:Event cameras capture the motion of intensity gradients (edges) in the image plane in the form of rapid asynchronous events. When accumulated in 2D histograms, these events depict overlays of the edges in motion, consequently obscuring the spatial structure of the generating edges. Contrast maximization (CM) is an optimization framework that can reverse this effect and produce sharp spatial structures that resemble the moving intensity gradients by estimating the motion trajectories of the events. Nonetheless, CM is still an underexplored area of research with avenues for improvement. In this paper, we propose a novel hybrid approach that extends CM from uni-modal (events only) to bi-modal (events and edges). We leverage the underpinning concept that, given a reference time, optimally warped events produce sharp gradients consistent with the moving edge at that time. Specifically, we formalize a correlation-based objective to aid CM and provide key insights into the incorporation of multiscale and multireference techniques. Moreover, our edge-informed CM method yields superior sharpness scores and establishes new state-of-the-art event optical flow benchmarks on the MVSEC, DSEC, and ECD datasets.

Semi-Supervised Variational Adversarial Active Learning via Learning to Rank and Agreement-Based Pseudo Labeling

Aug 23, 2024

Abstract:Active learning aims to alleviate the amount of labor involved in data labeling by automating the selection of unlabeled samples via an acquisition function. For example, variational adversarial active learning (VAAL) leverages an adversarial network to discriminate unlabeled samples from labeled ones using latent space information. However, VAAL has the following shortcomings: (i) it does not exploit target task information, and (ii) unlabeled data is only used for sample selection rather than model training. To address these limitations, we introduce novel techniques that significantly improve the use of abundant unlabeled data during training and take into account the task information. Concretely, we propose an improved pseudo-labeling algorithm that leverages information from all unlabeled data in a semi-supervised manner, thus allowing a model to explore a richer data space. In addition, we develop a ranking-based loss prediction module that converts predicted relative ranking information into a differentiable ranking loss. This loss can be embedded as a rank variable into the latent space of a variational autoencoder and then trained with a discriminator in an adversarial fashion for sample selection. We demonstrate the superior performance of our approach over the state of the art on various image classification and segmentation benchmark datasets.

Few-Shot Fruit Segmentation via Transfer Learning

May 04, 2024Abstract:Advancements in machine learning, computer vision, and robotics have paved the way for transformative solutions in various domains, particularly in agriculture. For example, accurate identification and segmentation of fruits from field images plays a crucial role in automating jobs such as harvesting, disease detection, and yield estimation. However, achieving robust and precise infield fruit segmentation remains a challenging task since large amounts of labeled data are required to handle variations in fruit size, shape, color, and occlusion. In this paper, we develop a few-shot semantic segmentation framework for infield fruits using transfer learning. Concretely, our work is aimed at addressing agricultural domains that lack publicly available labeled data. Motivated by similar success in urban scene parsing, we propose specialized pre-training using a public benchmark dataset for fruit transfer learning. By leveraging pre-trained neural networks, accurate semantic segmentation of fruit in the field is achieved with only a few labeled images. Furthermore, we show that models with pre-training learn to distinguish between fruit still on the trees and fruit that have fallen on the ground, and they can effectively transfer the knowledge to the target fruit dataset.

A Human-Centered Approach for Bootstrapping Causal Graph Creation

Mar 03, 2024Abstract:Causal inference, a cornerstone in disciplines such as economics, genomics, and medicine, is increasingly being recognized as fundamental to advancing the field of robotics. In particular, the ability to reason about cause and effect from observational data is crucial for robust generalization in robotic systems. However, the construction of a causal graphical model, a mechanism for representing causal relations, presents an immense challenge. Currently, a nuanced grasp of causal inference, coupled with an understanding of causal relationships, must be manually programmed into a causal graphical model. To address this difficulty, we present initial results towards a human-centered augmented reality framework for creating causal graphical models. Concretely, our system bootstraps the causal discovery process by involving humans in selecting variables, establishing relationships, performing interventions, generating counterfactual explanations, and evaluating the resulting causal graph at every step. We highlight the potential of our framework via a physical robot manipulator on a pick-and-place task.

NTrack: A Multiple-Object Tracker and Dataset for Infield Cotton Boll Counting

Dec 18, 2023Abstract:In agriculture, automating the accurate tracking of fruits, vegetables, and fiber is a very tough problem. The issue becomes extremely challenging in dynamic field environments. Yet, this information is critical for making day-to-day agricultural decisions, assisting breeding programs, and much more. To tackle this dilemma, we introduce NTrack, a novel multiple object tracking framework based on the linear relationship between the locations of neighboring tracks. NTrack computes dense optical flow and utilizes particle filtering to guide each tracker. Correspondences between detections and tracks are found through data association via direct observations and indirect cues, which are then combined to obtain an updated observation. Our modular multiple object tracking system is independent of the underlying detection method, thus allowing for the interchangeable use of any off-the-shelf object detector. We show the efficacy of our approach on the task of tracking and counting infield cotton bolls. Experimental results show that our system exceeds contemporary tracking and cotton boll-based counting methods by a large margin. Furthermore, we publicly release the first annotated cotton boll video dataset to the research community.

IPVNet: Learning Implicit Point-Voxel Features for Open-Surface 3D Reconstruction

Nov 05, 2023

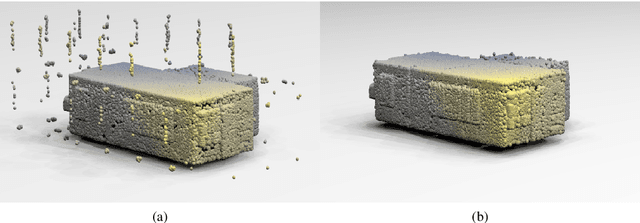

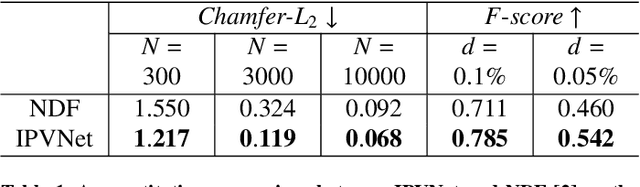

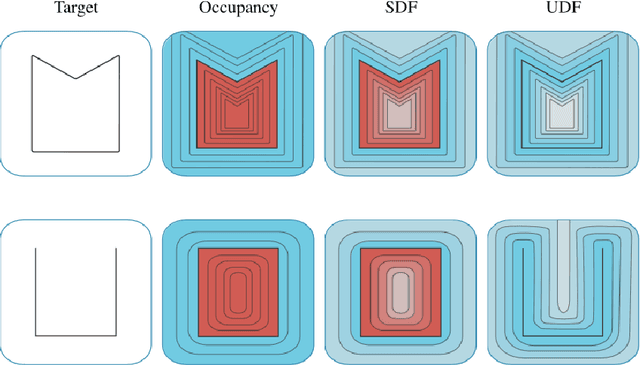

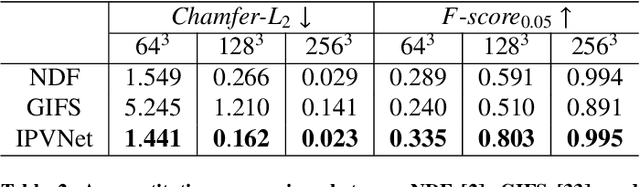

Abstract:Reconstruction of 3D open surfaces (e.g., non-watertight meshes) is an underexplored area of computer vision. Recent learning-based implicit techniques have removed previous barriers by enabling reconstruction in arbitrary resolutions. Yet, such approaches often rely on distinguishing between the inside and outside of a surface in order to extract a zero level set when reconstructing the target. In the case of open surfaces, this distinction often leads to artifacts such as the artificial closing of surface gaps. However, real-world data may contain intricate details defined by salient surface gaps. Implicit functions that regress an unsigned distance field have shown promise in reconstructing such open surfaces. Nonetheless, current unsigned implicit methods rely on a discretized representation of the raw data. This not only bounds the learning process to the representation's resolution, but it also introduces outliers in the reconstruction. To enable accurate reconstruction of open surfaces without introducing outliers, we propose a learning-based implicit point-voxel model (IPVNet). IPVNet predicts the unsigned distance between a surface and a query point in 3D space by leveraging both raw point cloud data and its discretized voxel counterpart. Experiments on synthetic and real-world public datasets demonstrates that IPVNet outperforms the state of the art while producing far fewer outliers in the resulting reconstruction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge