Jordan A. James

CropTrack: A Tracking with Re-Identification Framework for Precision Agriculture

Dec 31, 2025Abstract:Multiple-object tracking (MOT) in agricultural environments presents major challenges due to repetitive patterns, similar object appearances, sudden illumination changes, and frequent occlusions. Contemporary trackers in this domain rely on the motion of objects rather than appearance for association. Nevertheless, they struggle to maintain object identities when targets undergo frequent and strong occlusions. The high similarity of object appearances makes integrating appearance-based association nontrivial for agricultural scenarios. To solve this problem we propose CropTrack, a novel MOT framework based on the combination of appearance and motion information. CropTrack integrates a reranking-enhanced appearance association, a one-to-many association with appearance-based conflict resolution strategy, and an exponential moving average prototype feature bank to improve appearance-based association. Evaluated on publicly available agricultural MOT datasets, CropTrack demonstrates consistent identity preservation, outperforming traditional motion-based tracking methods. Compared to the state of the art, CropTrack achieves significant gains in identification F1 and association accuracy scores with a lower number of identity switches.

Few-Shot Fruit Segmentation via Transfer Learning

May 04, 2024Abstract:Advancements in machine learning, computer vision, and robotics have paved the way for transformative solutions in various domains, particularly in agriculture. For example, accurate identification and segmentation of fruits from field images plays a crucial role in automating jobs such as harvesting, disease detection, and yield estimation. However, achieving robust and precise infield fruit segmentation remains a challenging task since large amounts of labeled data are required to handle variations in fruit size, shape, color, and occlusion. In this paper, we develop a few-shot semantic segmentation framework for infield fruits using transfer learning. Concretely, our work is aimed at addressing agricultural domains that lack publicly available labeled data. Motivated by similar success in urban scene parsing, we propose specialized pre-training using a public benchmark dataset for fruit transfer learning. By leveraging pre-trained neural networks, accurate semantic segmentation of fruit in the field is achieved with only a few labeled images. Furthermore, we show that models with pre-training learn to distinguish between fruit still on the trees and fruit that have fallen on the ground, and they can effectively transfer the knowledge to the target fruit dataset.

CitDet: A Benchmark Dataset for Citrus Fruit Detection

Sep 11, 2023

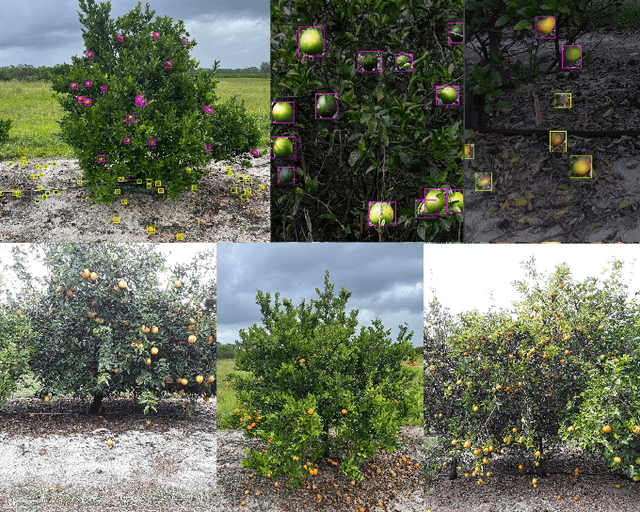

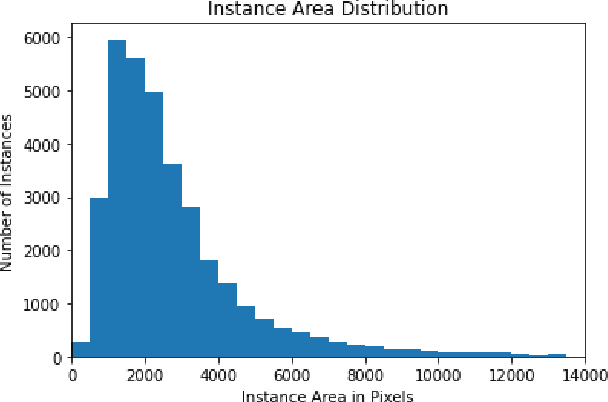

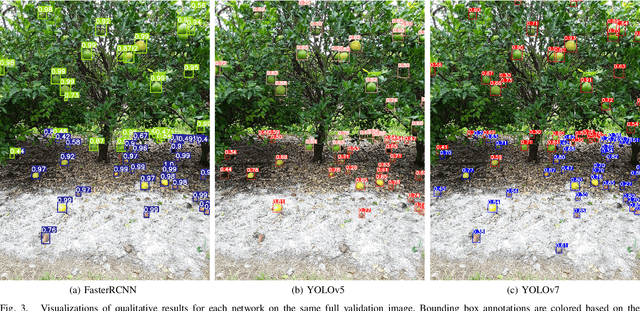

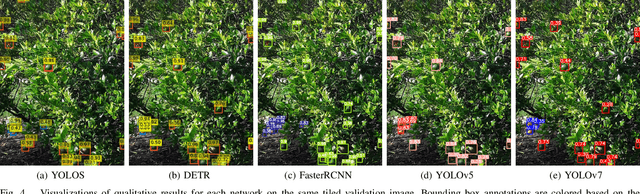

Abstract:In this letter, we present a new dataset to advance the state of the art in detecting citrus fruit and accurately estimate yield on trees affected by the Huanglongbing (HLB) disease in orchard environments via imaging. Despite the fact that significant progress has been made in solving the fruit detection problem, the lack of publicly available datasets has complicated direct comparison of results. For instance, citrus detection has long been of interest in the agricultural research community, yet there is an absence of work, particularly involving public datasets of citrus affected by HLB. To address this issue, we enhance state-of-the-art object detection methods for use in typical orchard settings. Concretely, we provide high-resolution images of citrus trees located in an area known to be highly affected by HLB, along with high-quality bounding box annotations of citrus fruit. Fruit on both the trees and the ground are labeled to allow for identification of fruit location, which contributes to advancements in yield estimation and potential measure of HLB impact via fruit drop. The dataset consists of over 32,000 bounding box annotations for fruit instances contained in 579 high-resolution images. In summary, our contributions are the following: (i) we introduce a novel dataset along with baseline performance benchmarks on multiple contemporary object detection algorithms, (ii) we show the ability to accurately capture fruit location on tree or on ground, and finally (ii) we present a correlation of our results with yield estimations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge