Nolan B. Gutierrez

A Human-Centered Approach for Bootstrapping Causal Graph Creation

Mar 03, 2024Abstract:Causal inference, a cornerstone in disciplines such as economics, genomics, and medicine, is increasingly being recognized as fundamental to advancing the field of robotics. In particular, the ability to reason about cause and effect from observational data is crucial for robust generalization in robotic systems. However, the construction of a causal graphical model, a mechanism for representing causal relations, presents an immense challenge. Currently, a nuanced grasp of causal inference, coupled with an understanding of causal relationships, must be manually programmed into a causal graphical model. To address this difficulty, we present initial results towards a human-centered augmented reality framework for creating causal graphical models. Concretely, our system bootstraps the causal discovery process by involving humans in selecting variables, establishing relationships, performing interventions, generating counterfactual explanations, and evaluating the resulting causal graph at every step. We highlight the potential of our framework via a physical robot manipulator on a pick-and-place task.

MetaMax: Improved Open-Set Deep Neural Networks via Weibull Calibration

Nov 20, 2022Abstract:Open-set recognition refers to the problem in which classes that were not seen during training appear at inference time. This requires the ability to identify instances of novel classes while maintaining discriminative capability for closed-set classification. OpenMax was the first deep neural network-based approach to address open-set recognition by calibrating the predictive scores of a standard closed-set classification network. In this paper we present MetaMax, a more effective post-processing technique that improves upon contemporary methods by directly modeling class activation vectors. MetaMax removes the need for computing class mean activation vectors (MAVs) and distances between a query image and a class MAV as required in OpenMax. Experimental results show that MetaMax outperforms OpenMax and is comparable in performance to other state-of-the-art approaches.

Evaluating Uncertainty Calibration for Open-Set Recognition

May 15, 2022

Abstract:Despite achieving enormous success in predictive accuracy for visual classification problems, deep neural networks (DNNs) suffer from providing overconfident probabilities on out-of-distribution (OOD) data. Yet, accurate uncertainty estimation is crucial for safe and reliable robot autonomy. In this paper, we evaluate popular calibration techniques for open-set conditions in a way that is distinctly different from the conventional evaluation of calibration methods on OOD data. Our results show that closed-set DNN calibration approaches are much less effective for open-set recognition, which highlights the need to develop new DNN calibration methods to address this problem.

Thermal Image Super-Resolution Using Second-Order Channel Attention with Varying Receptive Fields

Jul 30, 2021

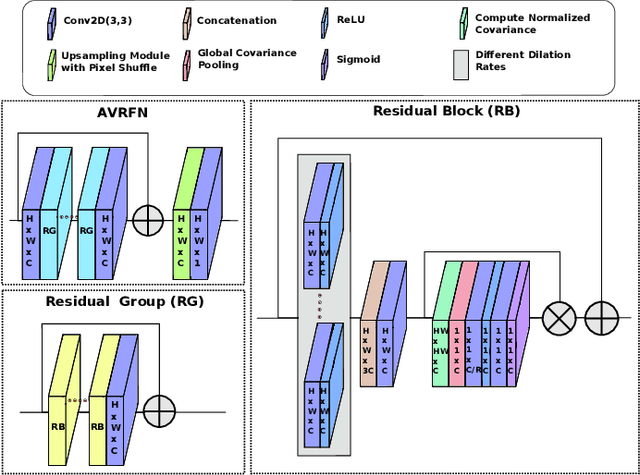

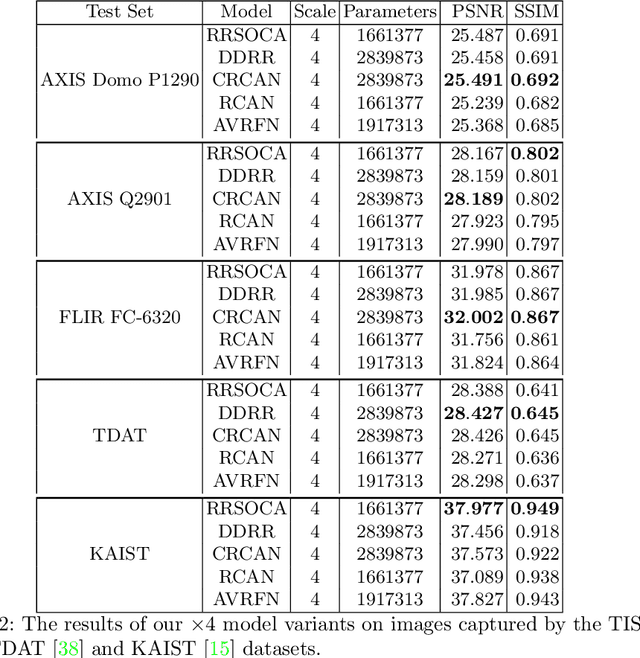

Abstract:Thermal images model the long-infrared range of the electromagnetic spectrum and provide meaningful information even when there is no visible illumination. Yet, unlike imagery that represents radiation from the visible continuum, infrared images are inherently low-resolution due to hardware constraints. The restoration of thermal images is critical for applications that involve safety, search and rescue, and military operations. In this paper, we introduce a system to efficiently reconstruct thermal images. Specifically, we explore how to effectively attend to contrasting receptive fields (RFs) where increasing the RFs of a network can be computationally expensive. For this purpose, we introduce a deep attention to varying receptive fields network (AVRFN). We supply a gated convolutional layer with higher-order information extracted from disparate RFs, whereby an RF is parameterized by a dilation rate. In this way, the dilation rate can be tuned to use fewer parameters thus increasing the efficacy of AVRFN. Our experimental results show an improvement over the state of the art when compared against competing thermal image super-resolution methods.

An Uncertainty Estimation Framework for Probabilistic Object Detection

Jun 28, 2021

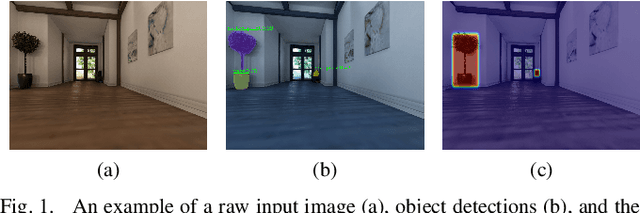

Abstract:In this paper, we introduce a new technique that combines two popular methods to estimate uncertainty in object detection. Quantifying uncertainty is critical in real-world robotic applications. Traditional detection models can be ambiguous even when they provide a high-probability output. Robot actions based on high-confidence, yet unreliable predictions, may result in serious repercussions. Our framework employs deep ensembles and Monte Carlo dropout for approximating predictive uncertainty, and it improves upon the uncertainty estimation quality of the baseline method. The proposed approach is evaluated on publicly available synthetic image datasets captured from sequences of video.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge