Wenju Zhou

A Review of Vision-Based Assistive Systems for Visually Impaired People: Technologies, Applications, and Future Directions

May 20, 2025Abstract:Visually impaired individuals rely heavily on accurate and timely information about obstacles and their surrounding environments to achieve independent living. In recent years, significant progress has been made in the development of assistive technologies, particularly vision-based systems, that enhance mobility and facilitate interaction with the external world in both indoor and outdoor settings. This paper presents a comprehensive review of recent advances in assistive systems designed for the visually impaired, with a focus on state-of-the-art technologies in obstacle detection, navigation, and user interaction. In addition, emerging trends and future directions in visual guidance systems are discussed.

Dynamic Event-Triggered Discrete-Time Linear Time-Varying System with Privacy-Preservation

Oct 28, 2022Abstract:This paper focuses on discrete-time wireless sensor networks with privacy-preservation. In practical applications, information exchange between sensors is subject to attacks. For the information leakage caused by the attack during the information transmission process, privacy-preservation is introduced for system states. To make communication resources more effectively utilized, a dynamic event-triggered set-membership estimator is designed. Moreover, the privacy of the system is analyzed to ensure the security of the real data. As a result, the set-membership estimator with differential privacy is analyzed using recursive convex optimization. Then the steady-state performance of the system is studied. Finally, one example is presented to demonstrate the feasibility of the proposed distributed filter containing privacy-preserving analysis.

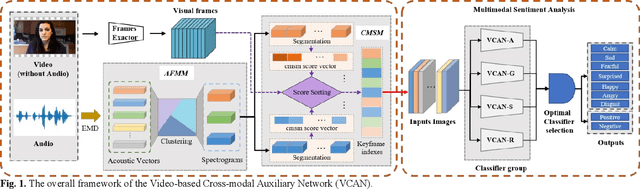

Video-based Cross-modal Auxiliary Network for Multimodal Sentiment Analysis

Aug 30, 2022

Abstract:Multimodal sentiment analysis has a wide range of applications due to its information complementarity in multimodal interactions. Previous works focus more on investigating efficient joint representations, but they rarely consider the insufficient unimodal features extraction and data redundancy of multimodal fusion. In this paper, a Video-based Cross-modal Auxiliary Network (VCAN) is proposed, which is comprised of an audio features map module and a cross-modal selection module. The first module is designed to substantially increase feature diversity in audio feature extraction, aiming to improve classification accuracy by providing more comprehensive acoustic representations. To empower the model to handle redundant visual features, the second module is addressed to efficiently filter the redundant visual frames during integrating audiovisual data. Moreover, a classifier group consisting of several image classification networks is introduced to predict sentiment polarities and emotion categories. Extensive experimental results on RAVDESS, CMU-MOSI, and CMU-MOSEI benchmarks indicate that VCAN is significantly superior to the state-of-the-art methods for improving the classification accuracy of multimodal sentiment analysis.

Deep Convolution Network Based Emotion Analysis for Automatic Detection of Mild Cognitive Impairment in the Elderly

Nov 09, 2021

Abstract:A significant number of people are suffering from cognitive impairment all over the world. Early detection of cognitive impairment is of great importance to both patients and caregivers. However, existing approaches have their shortages, such as time consumption and financial expenses involved in clinics and the neuroimaging stage. It has been found that patients with cognitive impairment show abnormal emotion patterns. In this paper, we present a novel deep convolution network-based system to detect the cognitive impairment through the analysis of the evolution of facial emotions while participants are watching designed video stimuli. In our proposed system, a novel facial expression recognition algorithm is developed using layers from MobileNet and Support Vector Machine (SVM), which showed satisfactory performance in 3 datasets. To verify the proposed system in detecting cognitive impairment, 61 elderly people including patients with cognitive impairment and healthy people as a control group have been invited to participate in the experiments and a dataset was built accordingly. With this dataset, the proposed system has successfully achieved the detection accuracy of 73.3%.

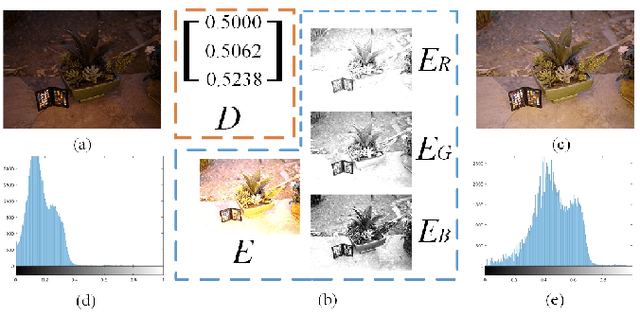

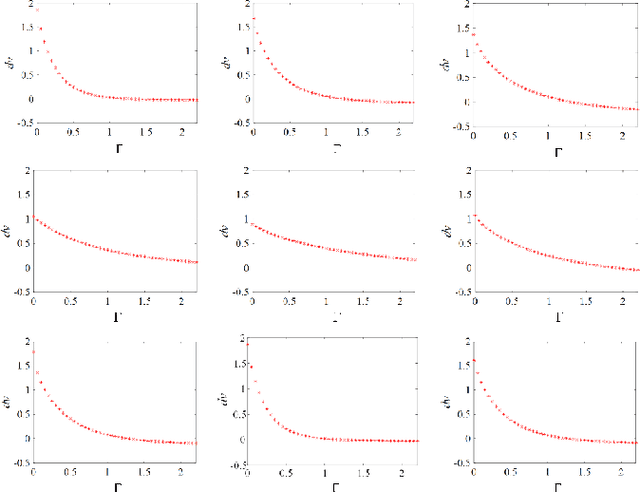

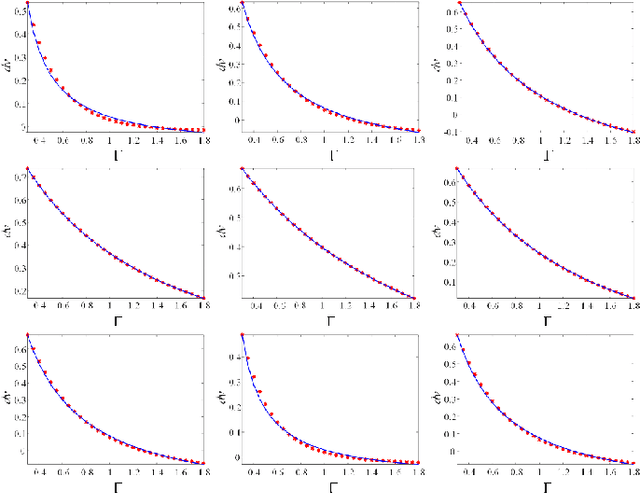

Perceiving Unknown in Dark from Perspective of Cell Vibration

Jun 03, 2020

Abstract:Low light very likely leads to the degradation of image quality and even causes visual tasks' failure. Existing image enhancement technologies are prone to over-enhancement or color distortion, and their adaptability is fairly limited. In order to deal with these problems, we utilise the mechanism of biological cell vibration to interpret the formation of color images. In particular, we here propose a simple yet effective cell vibration energy (CVE) mapping method for image enhancement. Based on a hypothetical color-formation mechanism, our proposed method first uses cell vibration and photoreceptor correction to determine the photon flow energy for each color channel, and then reconstructs the color image with the maximum energy constraint of the visual system. Photoreceptor cells can adaptively adjust the feedback from the light intensity of the perceived environment. Based on this understanding, we here propose a new Gamma auto-adjustment method to modify Gamma values according to individual images. Finally, a fusion method, combining CVE and Gamma auto-adjustment (CVE-G), is proposed to reconstruct the color image under the constraint of lightness. Experimental results show that the proposed algorithm is superior to six state of the art methods in avoiding over-enhancement and color distortion, restoring the textures of dark areas and reproducing natural colors. The source code will be released at https://github.com/leixiaozhou/CVE-G-Resource-Base.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge