Wei-Kai Liu

Monitor Placement for Fault Localization in Deep Neural Network Accelerators

Nov 28, 2023

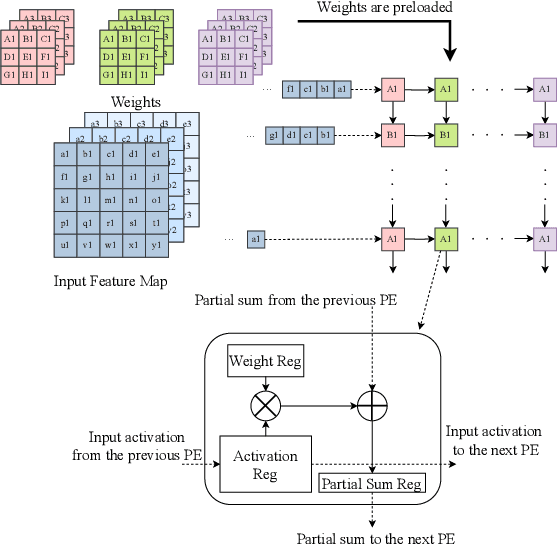

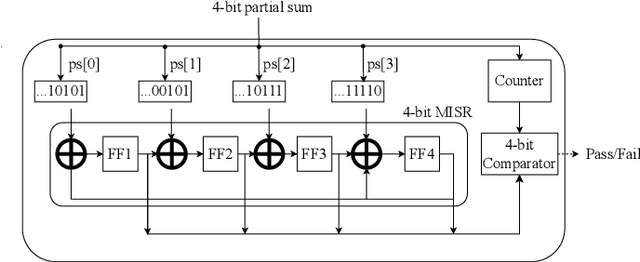

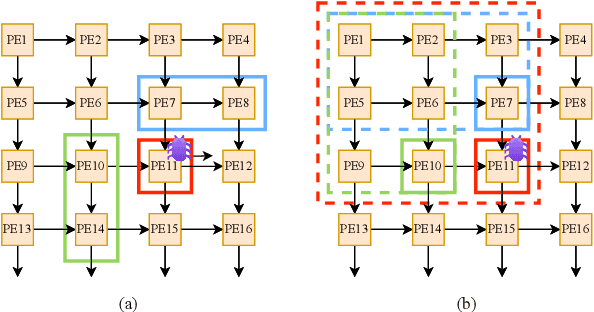

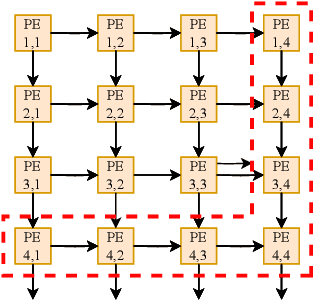

Abstract:Systolic arrays are a prominent choice for deep neural network (DNN) accelerators because they offer parallelism and efficient data reuse. Improving the reliability of DNN accelerators is crucial as hardware faults can degrade the accuracy of DNN inferencing. Systolic arrays make use of a large number of processing elements (PEs) for parallel processing, but when one PE is faulty, the error propagates and affects the outcomes of downstream PEs. Due to the large number of PEs, the cost associated with implementing hardware-based runtime monitoring of every single PE is infeasible. We present a solution to optimize the placement of hardware monitors within systolic arrays. We first prove that $2N-1$ monitors are needed to localize a single faulty PE and we also derive the monitor placement. We show that a second placement optimization problem, which minimizes the set of candidate faulty PEs for a given number of monitors, is NP-hard. Therefore, we propose a heuristic approach to balance the reliability and hardware resource utilization in DNN accelerators when number of monitors is limited. Experimental evaluation shows that to localize a single faulty PE, an area overhead of only 0.33% is incurred for a $256\times 256$ systolic array.

Investment Ranking Challenge: Identifying the best performing stocks based on their semi-annual returns

Jun 20, 2019

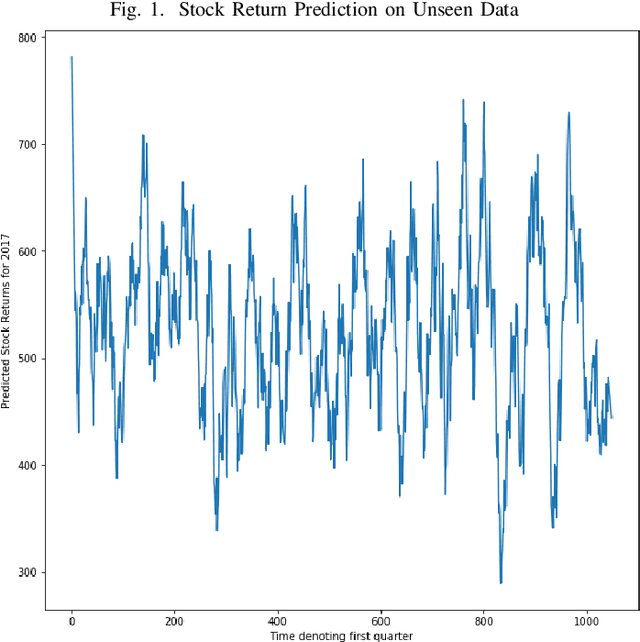

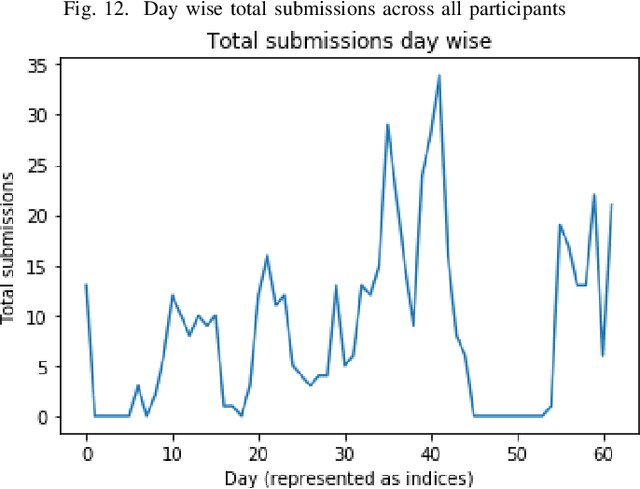

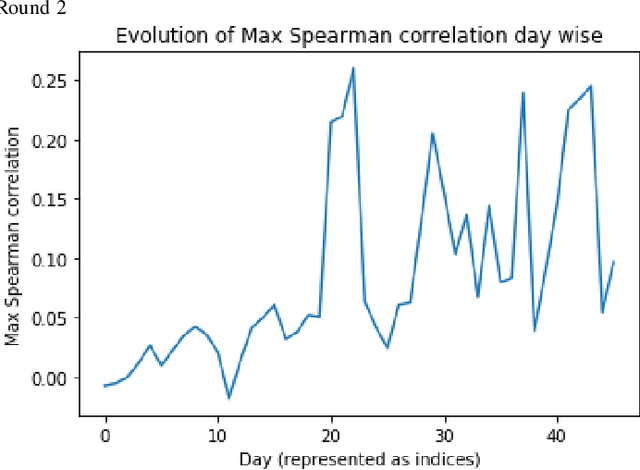

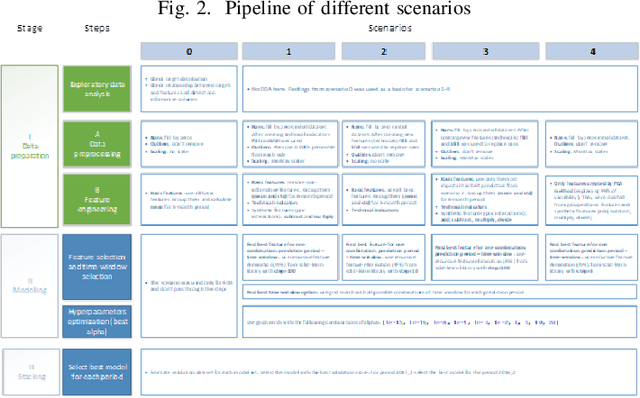

Abstract:In the IEEE Investment ranking challenge 2018, participants were asked to build a model which would identify the best performing stocks based on their returns over a forward six months window. Anonymized financial predictors and semi-annual returns were provided for a group of anonymized stocks from 1996 to 2017, which were divided into 42 non-overlapping six months period. The second half of 2017 was used as an out-of-sample test of the model's performance. Metrics used were Spearman's Rank Correlation Coefficient and Normalized Discounted Cumulative Gain (NDCG) of the top 20% of a model's predicted rankings. The top six participants were invited to describe their approach. The solutions used were varied and were based on selecting a subset of data to train, combination of deep and shallow neural networks, different boosting algorithms, different models with different sets of features, linear support vector machine, combination of convoltional neural network (CNN) and Long short term memory (LSTM).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge