Wei-Chi Chen

Zero-Touch Network on Industrial IoT: An End-to-End Machine Learning Approach

Apr 26, 2022

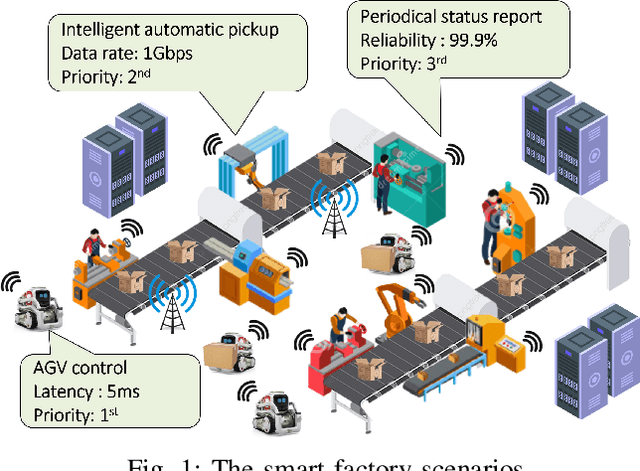

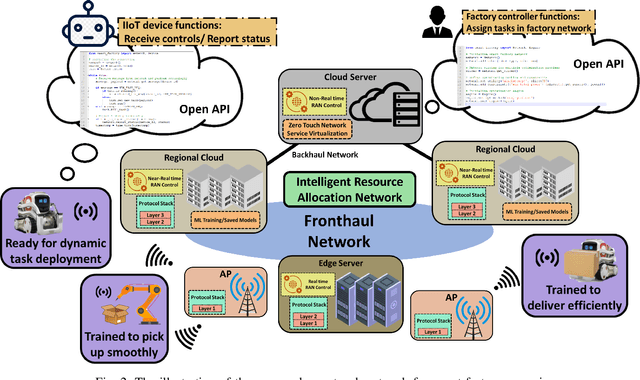

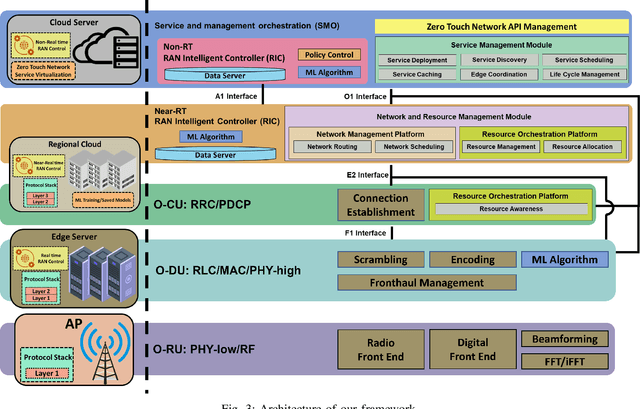

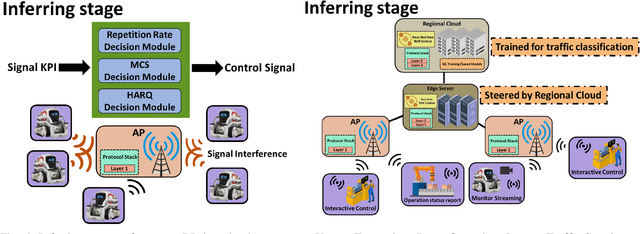

Abstract:Industry 4.0-enabled smart factory is expected to realize the next revolution for manufacturers. Although artificial intelligence (AI) technologies have improved productivity, current use cases belong to small-scale and single-task operations. To unbound the potential of smart factory, this paper develops zero-touch network systems for intelligent manufacturing and facilitates distributed AI applications in both training and inferring stages in a large-scale manner. The open radio access network (O-RAN) architecture is first introduced for the zero-touch platform to enable globally controlling communications and computation infrastructure capability in the field. The designed serverless framework allows intelligent and efficient learning assignments and resource allocations. Hence, requested learning tasks can be assigned to appropriate robots, and the underlying infrastructure can be used to support the learning tasks without expert knowledge. Moreover, due to the proposed network system's flexibility, powerful AI-enabled networking algorithms can be utilized to ensure service-level agreements and superior performances for factory workloads. Finally, three open research directions of backward compatibility, end-to-end enhancements, and cybersecurity are discussed for zero-touch smart factory.

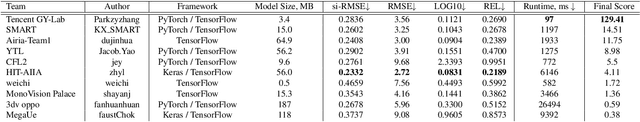

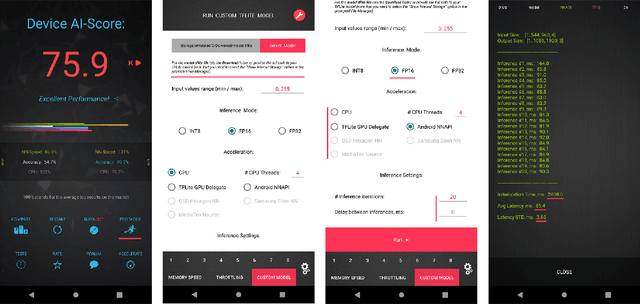

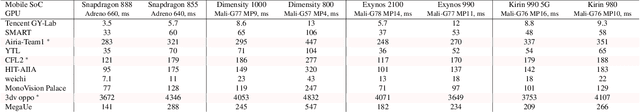

Fast and Accurate Single-Image Depth Estimation on Mobile Devices, Mobile AI 2021 Challenge: Report

May 17, 2021

Abstract:Depth estimation is an important computer vision problem with many practical applications to mobile devices. While many solutions have been proposed for this task, they are usually very computationally expensive and thus are not applicable for on-device inference. To address this problem, we introduce the first Mobile AI challenge, where the target is to develop an end-to-end deep learning-based depth estimation solutions that can demonstrate a nearly real-time performance on smartphones and IoT platforms. For this, the participants were provided with a new large-scale dataset containing RGB-depth image pairs obtained with a dedicated stereo ZED camera producing high-resolution depth maps for objects located at up to 50 meters. The runtime of all models was evaluated on the popular Raspberry Pi 4 platform with a mobile ARM-based Broadcom chipset. The proposed solutions can generate VGA resolution depth maps at up to 10 FPS on the Raspberry Pi 4 while achieving high fidelity results, and are compatible with any Android or Linux-based mobile devices. A detailed description of all models developed in the challenge is provided in this paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge