Wansong Liu

A Hybrid Task-Constrained Motion Planning for Collaborative Robots in Intelligent Remanufacturing

Jun 12, 2024

Abstract:Industrial manipulators have extensively collaborated with human operators to execute tasks, e.g., disassembly of end-of-use products, in intelligent remanufacturing. A safety task execution requires real-time path planning for the manipulator's end-effector to autonomously avoid human operators. This is even more challenging when the end-effector needs to follow a planned path while avoiding the collision between the manipulator body and human operators, which is usually computationally expensive and limits real-time application. This paper proposes an efficient hybrid motion planning algorithm that consists of an A$^*$ algorithm and an online manipulator reconfiguration mechanism (OMRM) to tackle such challenges in task and configuration spaces respectively. The A$^*$ algorithm is first leveraged to plan the shortest collision-free path of the end-effector in task space. When the manipulator body is risky to the human operator, our OMRM then selects an alternative joint configuration with minimum reconfiguration effort from a database to assist the manipulator to follow the planned path and avoid the human operator simultaneously. The database of manipulator reconfiguration establishes the relationship between the task and configuration space offline using forward kinematics, and is able to provide multiple reconfiguration candidates for a desired end-effector's position. The proposed new hybrid algorithm plans safe manipulator motion during the whole task execution. Extensive numerical and experimental studies, as well as comparison studies between the proposed one and the state-of-the-art ones, have been conducted to validate the proposed motion planning algorithm.

Integrating Uncertainty-Aware Human Motion Prediction into Graph-Based Manipulator Motion Planning

May 16, 2024Abstract:There has been a growing utilization of industrial robots as complementary collaborators for human workers in re-manufacturing sites. Such a human-robot collaboration (HRC) aims to assist human workers in improving the flexibility and efficiency of labor-intensive tasks. In this paper, we propose a human-aware motion planning framework for HRC to effectively compute collision-free motions for manipulators when conducting collaborative tasks with humans. We employ a neural human motion prediction model to enable proactive planning for manipulators. Particularly, rather than blindly trusting and utilizing predicted human trajectories in the manipulator planning, we quantify uncertainties of the neural prediction model to further ensure human safety. Moreover, we integrate the uncertainty-aware prediction into a graph that captures key workspace elements and illustrates their interconnections. Then a graph neural network is leveraged to operate on the constructed graph. Consequently, robot motion planning considers both the dependencies among all the elements in the workspace and the potential influence of future movements of human workers. We experimentally validate the proposed planning framework using a 6-degree-of-freedom manipulator in a shared workspace where a human is performing disassembling tasks. The results demonstrate the benefits of our approach in terms of improving the smoothness and safety of HRC. A brief video introduction of this work is available as the supplemental materials.

KG-Planner: Knowledge-Informed Graph Neural Planning for Collaborative Manipulators

May 13, 2024Abstract:This paper presents a novel knowledge-informed graph neural planner (KG-Planner) to address the challenge of efficiently planning collision-free motions for robots in high-dimensional spaces, considering both static and dynamic environments involving humans. Unlike traditional motion planners that struggle with finding a balance between efficiency and optimality, the KG-Planner takes a different approach. Instead of relying solely on a neural network or imitating the motions of an oracle planner, our KG-Planner integrates explicit physical knowledge from the workspace. The integration of knowledge has two key aspects: (1) we present an approach to design a graph that can comprehensively model the workspace's compositional structure. The designed graph explicitly incorporates critical elements such as robot joints, obstacles, and their interconnections. This representation allows us to capture the intricate relationships between these elements. (2) We train a Graph Neural Network (GNN) that excels at generating nearly optimal robot motions. In particular, the GNN employs a layer-wise propagation rule to facilitate the exchange and update of information among workspace elements based on their connections. This propagation emphasizes the influence of these elements throughout the planning process. To validate the efficacy and efficiency of our KG-Planner, we conduct extensive experiments in both static and dynamic environments. These experiments include scenarios with and without human workers. The results of our approach are compared against existing methods, showcasing the superior performance of the KG-Planner. A short video introduction of this work is available (video link provided in the paper).

A Recurrent Neural Network Enhanced Unscented Kalman Filter for Human Motion Prediction

Feb 20, 2024

Abstract:This paper presents a deep learning enhanced adaptive unscented Kalman filter (UKF) for predicting human arm motion in the context of manufacturing. Unlike previous network-based methods that solely rely on captured human motion data, which is represented as bone vectors in this paper, we incorporate a human arm dynamic model into the motion prediction algorithm and use the UKF to iteratively forecast human arm motions. Specifically, a Lagrangian-mechanics-based physical model is employed to correlate arm motions with associated muscle forces. Then a Recurrent Neural Network (RNN) is integrated into the framework to predict future muscle forces, which are transferred back to future arm motions based on the dynamic model. Given the absence of measurement data for future human motions that can be input into the UKF to update the state, we integrate another RNN to directly predict human future motions and treat the prediction as surrogate measurement data fed into the UKF. A noteworthy aspect of this study involves the quantification of uncertainties associated with both the data-driven and physical models in one unified framework. These quantified uncertainties are used to dynamically adapt the measurement and process noises of the UKF over time. This adaption, driven by the uncertainties of the RNN models, addresses inaccuracies stemming from the data-driven model and mitigates discrepancies between the assumed and true physical models, ultimately enhancing the accuracy and robustness of our predictions. Compared to the traditional RNN-based prediction, our method demonstrates improved accuracy and robustness in extensive experimental validations of various types of human motions.

DE-TGN: Uncertainty-Aware Human Motion Forecasting using Deep Ensembles

Jul 07, 2023

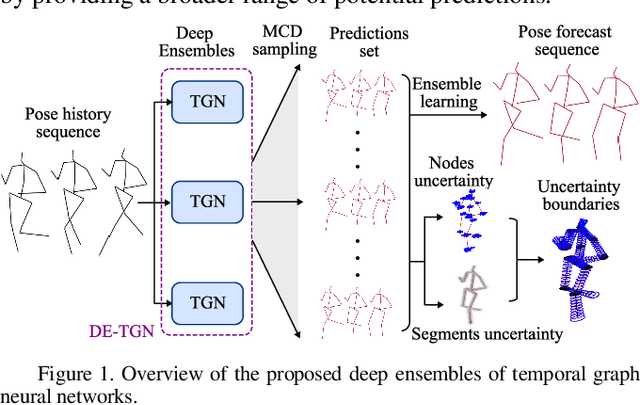

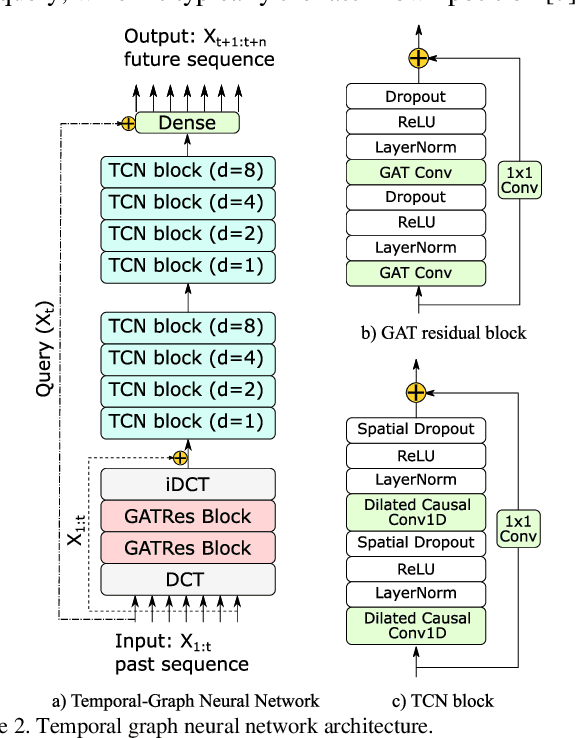

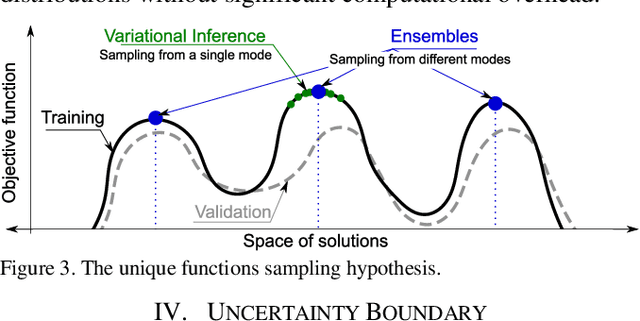

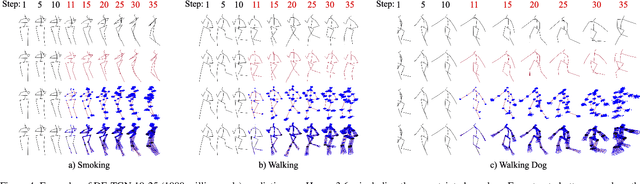

Abstract:Ensuring the safety of human workers in a collaborative environment with robots is of utmost importance. Although accurate pose prediction models can help prevent collisions between human workers and robots, they are still susceptible to critical errors. In this study, we propose a novel approach called deep ensembles of temporal graph neural networks (DE-TGN) that not only accurately forecast human motion but also provide a measure of prediction uncertainty. By leveraging deep ensembles and employing stochastic Monte-Carlo dropout sampling, we construct a volumetric field representing a range of potential future human poses based on covariance ellipsoids. To validate our framework, we conducted experiments using three motion capture datasets including Human3.6M, and two human-robot interaction scenarios, achieving state-of-the-art prediction error. Moreover, we discovered that deep ensembles not only enable us to quantify uncertainty but also improve the accuracy of our predictions.

Vehicle-Human Interactive Behaviors in Emergency: Data Extraction from Traffic Accident Videos

Mar 02, 2020

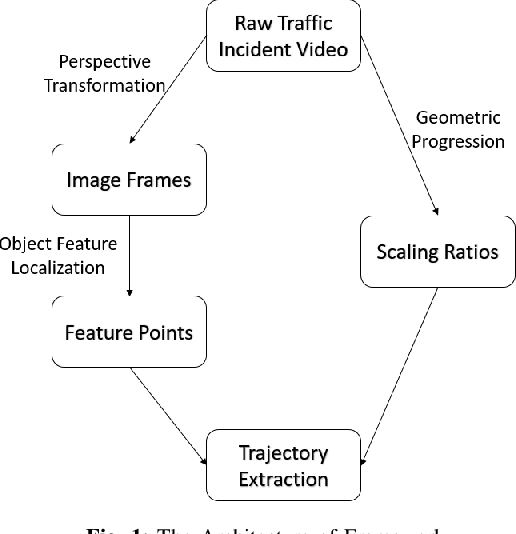

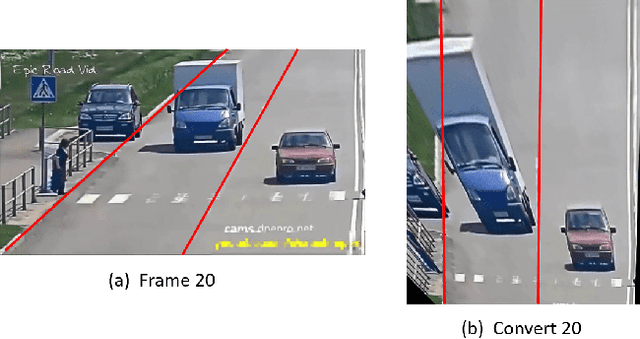

Abstract:Currently, studying the vehicle-human interactive behavior in the emergency needs a large amount of datasets in the actual emergent situations that are almost unavailable. Existing public data sources on autonomous vehicles (AVs) mainly focus either on the normal driving scenarios or on emergency situations without human involvement. To fill this gap and facilitate related research, this paper provides a new yet convenient way to extract the interactive behavior data (i.e., the trajectories of vehicles and humans) from actual accident videos that were captured by both the surveillance cameras and driving recorders. The main challenge for data extraction from real-time accident video lies in the fact that the recording cameras are un-calibrated and the angles of surveillance are unknown. The approach proposed in this paper employs image processing to obtain a new perspective which is different from the original video's perspective. Meanwhile, we manually detect and mark object feature points in each image frame. In order to acquire a gradient of reference ratios, a geometric model is implemented in the analysis of reference pixel value, and the feature points are then scaled to the object trajectory based on the gradient of ratios. The generated trajectories not only restore the object movements completely but also reflect changes in vehicle velocity and rotation based on the feature points distributions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge