DE-TGN: Uncertainty-Aware Human Motion Forecasting using Deep Ensembles

Paper and Code

Jul 07, 2023

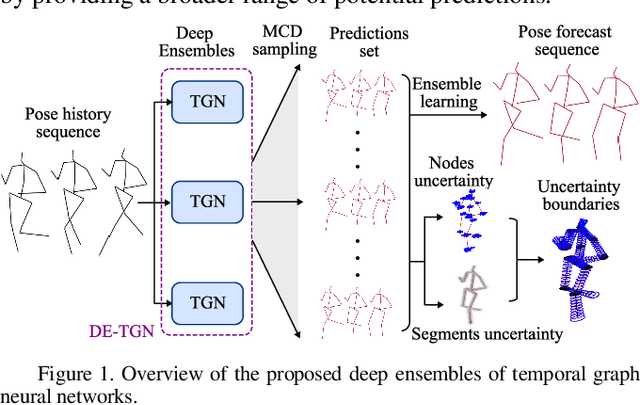

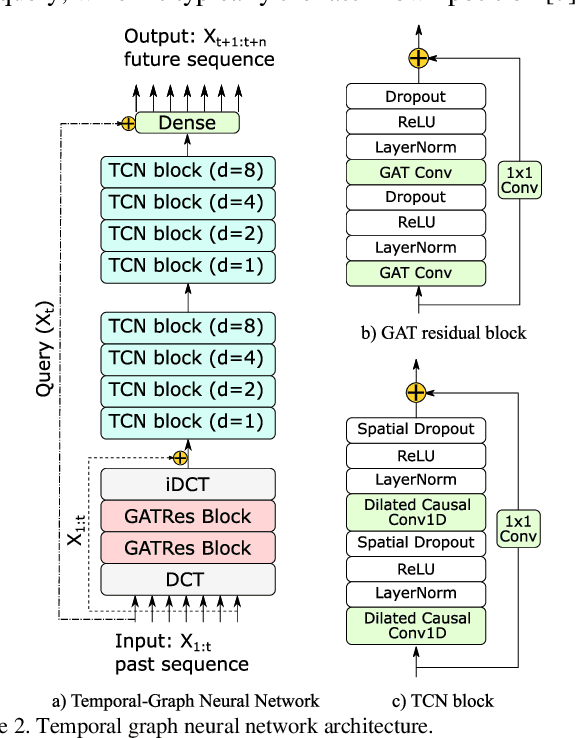

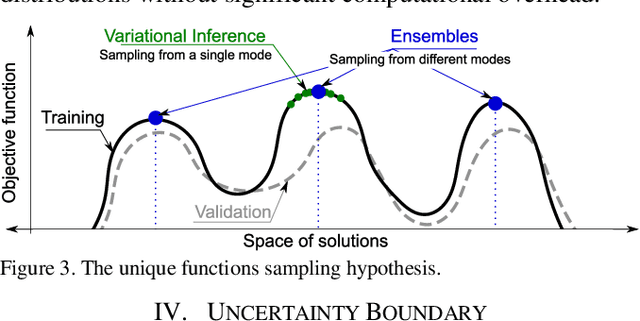

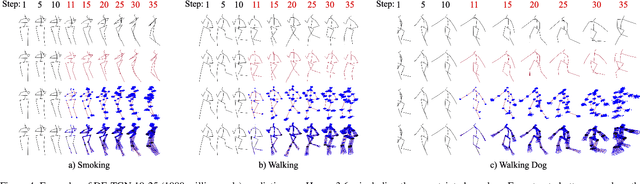

Ensuring the safety of human workers in a collaborative environment with robots is of utmost importance. Although accurate pose prediction models can help prevent collisions between human workers and robots, they are still susceptible to critical errors. In this study, we propose a novel approach called deep ensembles of temporal graph neural networks (DE-TGN) that not only accurately forecast human motion but also provide a measure of prediction uncertainty. By leveraging deep ensembles and employing stochastic Monte-Carlo dropout sampling, we construct a volumetric field representing a range of potential future human poses based on covariance ellipsoids. To validate our framework, we conducted experiments using three motion capture datasets including Human3.6M, and two human-robot interaction scenarios, achieving state-of-the-art prediction error. Moreover, we discovered that deep ensembles not only enable us to quantify uncertainty but also improve the accuracy of our predictions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge