Wanli Xing

EROAM: Event-based Camera Rotational Odometry and Mapping in Real-time

Nov 17, 2024Abstract:This paper presents EROAM, a novel event-based rotational odometry and mapping system that achieves real-time, accurate camera rotation estimation. Unlike existing approaches that rely on event generation models or contrast maximization, EROAM employs a spherical event representation by projecting events onto a unit sphere and introduces Event Spherical Iterative Closest Point (ES-ICP), a novel geometric optimization framework designed specifically for event camera data. The spherical representation simplifies rotational motion formulation while enabling continuous mapping for enhanced spatial resolution. Combined with parallel point-to-line optimization, EROAM achieves efficient computation without compromising accuracy. Extensive experiments on both synthetic and real-world datasets show that EROAM significantly outperforms state-of-the-art methods in terms of accuracy, robustness, and computational efficiency. Our method maintains consistent performance under challenging conditions, including high angular velocities and extended sequences, where other methods often fail or show significant drift. Additionally, EROAM produces high-quality panoramic reconstructions with preserved fine structural details.

Retrieval-augmented Generation to Improve Math Question-Answering: Trade-offs Between Groundedness and Human Preference

Oct 04, 2023

Abstract:For middle-school math students, interactive question-answering (QA) with tutors is an effective way to learn. The flexibility and emergent capabilities of generative large language models (LLMs) has led to a surge of interest in automating portions of the tutoring process - including interactive QA to support conceptual discussion of mathematical concepts. However, LLM responses to math questions can be incorrect or mismatched to the educational context - such as being misaligned with a school's curriculum. One potential solution is retrieval-augmented generation (RAG), which involves incorporating a vetted external knowledge source in the LLM prompt to increase response quality. In this paper, we designed prompts that retrieve and use content from a high-quality open-source math textbook to generate responses to real student questions. We evaluate the efficacy of this RAG system for middle-school algebra and geometry QA by administering a multi-condition survey, finding that humans prefer responses generated using RAG, but not when responses are too grounded in the textbook content. We argue that while RAG is able to improve response quality, designers of math QA systems must consider trade-offs between generating responses preferred by students and responses closely matched to specific educational resources.

Simultaneous Synchronization and Calibration for Wide-baseline Stereo Event Cameras

Sep 29, 2023Abstract:Event-based cameras are increasingly utilized in various applications, owing to their high temporal resolution and low power consumption. However, a fundamental challenge arises when deploying multiple such cameras: they operate on independent time systems, leading to temporal misalignment. This misalignment can significantly degrade performance in downstream applications. Traditional solutions, which often rely on hardware-based synchronization, face limitations in compatibility and are impractical for long-distance setups. To address these challenges, we propose a novel algorithm that exploits the motion of objects in the shared field of view to achieve millisecond-level synchronization among multiple event-based cameras. Our method also concurrently estimates extrinsic parameters. We validate our approach in both simulated and real-world indoor/outdoor scenarios, demonstrating successful synchronization and accurate extrinsic parameters estimation.

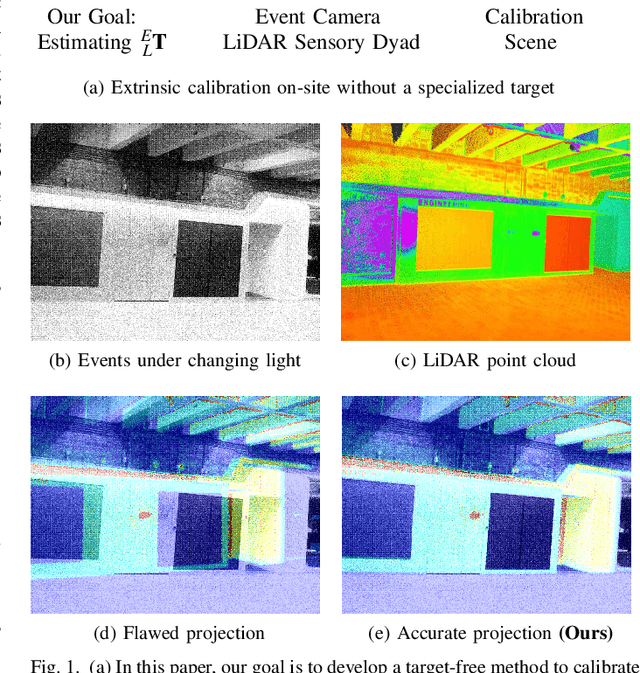

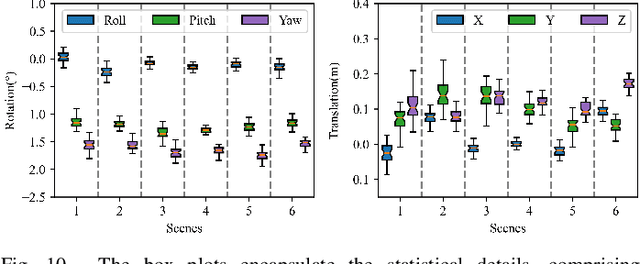

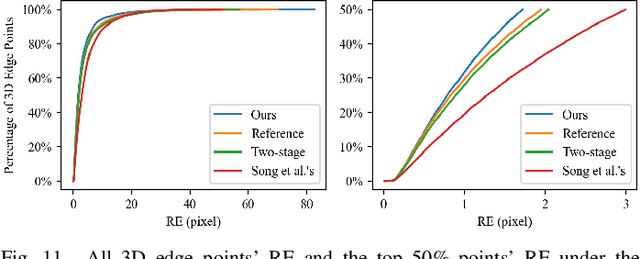

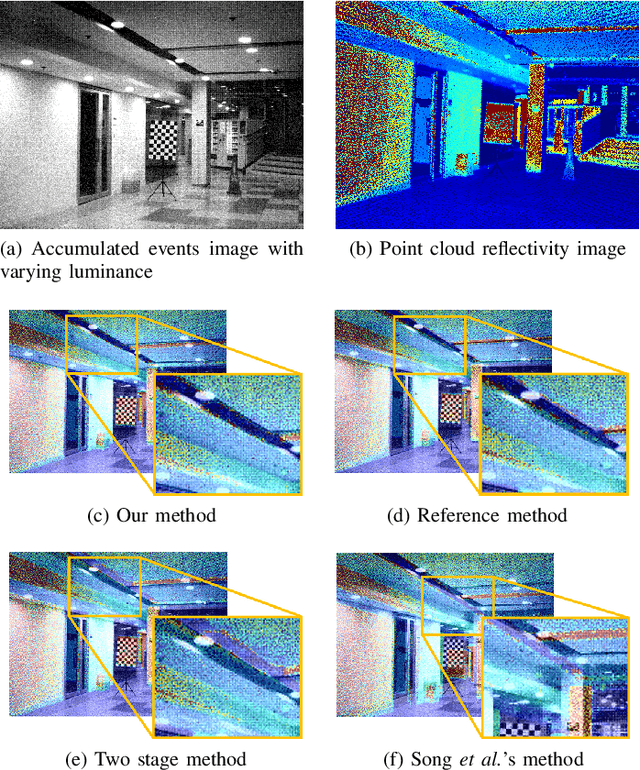

Target-free Extrinsic Calibration of Event-LiDAR Dyad using Edge Correspondences

May 06, 2023

Abstract:Calibrating the extrinsic parameters of sensory devices is crucial for fusing multi-modal data. Recently, event cameras have emerged as a promising type of neuromorphic sensors, with many potential applications in fields such as mobile robotics and autonomous driving. When combined with LiDAR, they can provide more comprehensive information about the surrounding environment. Nonetheless, due to the distinctive representation of event cameras compared to traditional frame-based cameras, calibrating them with LiDAR presents a significant challenge. In this paper, we propose a novel method to calibrate the extrinsic parameters between a dyad of an event camera and a LiDAR without the need for a calibration board or other equipment. Our approach takes advantage of the fact that when an event camera is in motion, changes in reflectivity and geometric edges in the environment trigger numerous events, which can also be captured by LiDAR. Our proposed method leverages the edges extracted from events and point clouds and correlates them to estimate extrinsic parameters. Experimental results demonstrate that our proposed method is highly robust and effective in various scenes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge