Waad Subber

Data-based Discovery of Governing Equations

Dec 21, 2020

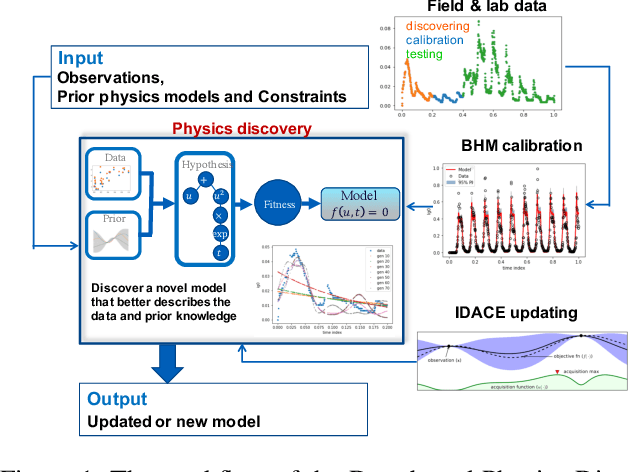

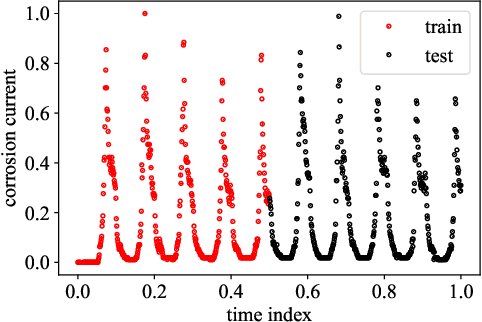

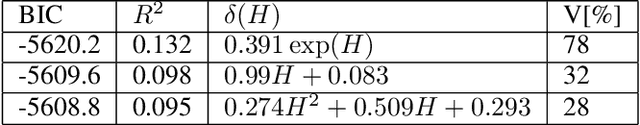

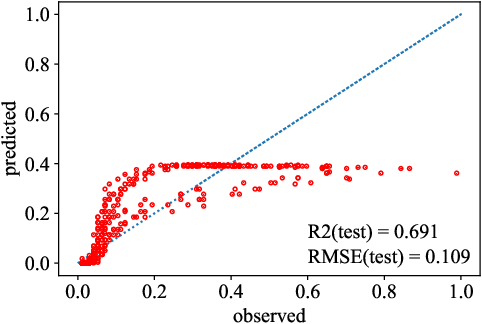

Abstract:Most common mechanistic models are traditionally presented in mathematical forms to explain a given physical phenomenon. Machine learning algorithms, on the other hand, provide a mechanism to map the input data to output without explicitly describing the underlying physical process that generated the data. We propose a Data-based Physics Discovery (DPD) framework for automatic discovery of governing equations from observed data. Without a prior definition of the model structure, first a free-form of the equation is discovered, and then calibrated and validated against the available data. In addition to the observed data, the DPD framework can utilize available prior physical models, and domain expert feedback. When prior models are available, the DPD framework can discover an additive or multiplicative correction term represented symbolically. The correction term can be a function of the existing input variable to the prior model, or a newly introduced variable. In case a prior model is not available, the DPD framework discovers a new data-based standalone model governing the observations. We demonstrate the performance of the proposed framework on a real-world application in the aerospace industry.

Data-Informed Decomposition for Localized Uncertainty Quantification of Dynamical Systems

Aug 14, 2020

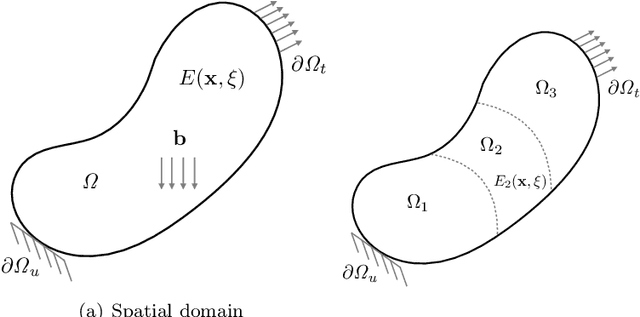

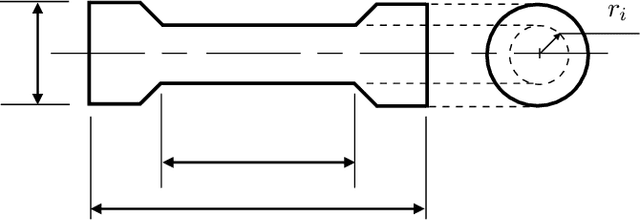

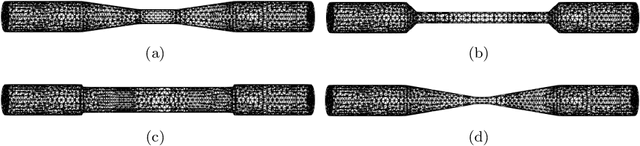

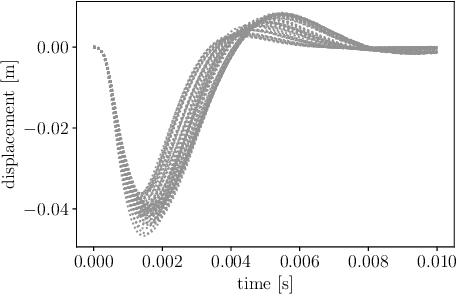

Abstract:Industrial dynamical systems often exhibit multi-scale response due to material heterogeneities, operation conditions and complex environmental loadings. In such problems, it is the case that the smallest length-scale of the systems dynamics controls the numerical resolution required to effectively resolve the embedded physics. In practice however, high numerical resolutions is only required in a confined region of the system where fast dynamics or localized material variability are exhibited, whereas a coarser discretization can be sufficient in the rest majority of the system. To this end, a unified computational scheme with uniform spatio-temporal resolutions for uncertainty quantification can be very computationally demanding. Partitioning the complex dynamical system into smaller easier-to-solve problems based of the localized dynamics and material variability can reduce the overall computational cost. However, identifying the region of interest for high-resolution and intensive uncertainty quantification can be a problem dependent. The region of interest can be specified based on the localization features of the solution, user interest, and correlation length of the random material properties. For problems where a region of interest is not evident, Bayesian inference can provide a feasible solution. In this work, we employ a Bayesian framework to update our prior knowledge on the localized region of interest using measurements and system response. To address the computational cost of the Bayesian inference, we construct a Gaussian process surrogate for the forward model. Once, the localized region of interest is identified, we use polynomial chaos expansion to propagate the localization uncertainty. We demonstrate our framework through numerical experiments on a three-dimensional elastodynamic problem.

Advances in Bayesian Probabilistic Modeling for Industrial Applications

Mar 26, 2020

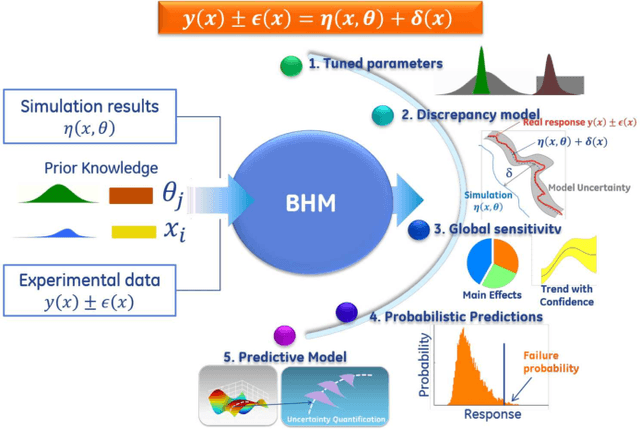

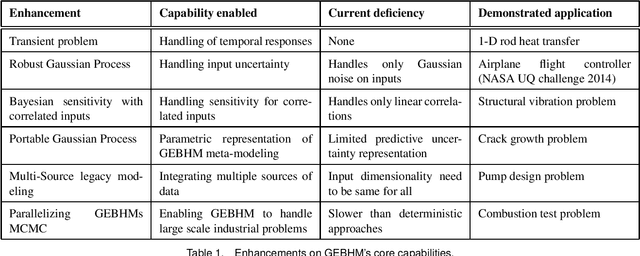

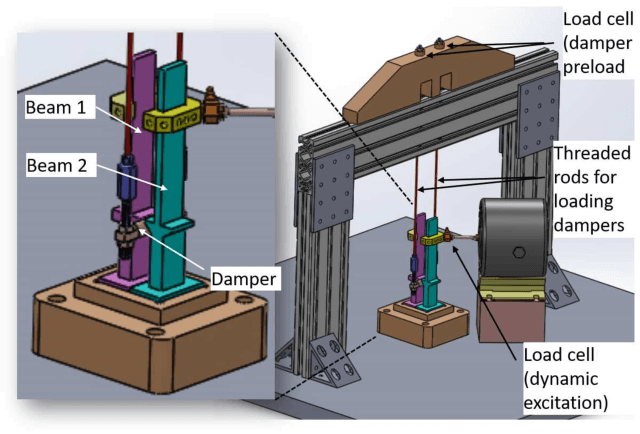

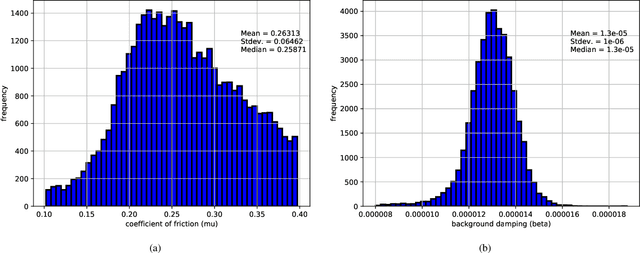

Abstract:Industrial applications frequently pose a notorious challenge for state-of-the-art methods in the contexts of optimization, designing experiments and modeling unknown physical response. This problem is aggravated by limited availability of clean data, uncertainty in available physics-based models and additional logistic and computational expense associated with experiments. In such a scenario, Bayesian methods have played an impactful role in alleviating the aforementioned obstacles by quantifying uncertainty of different types under limited resources. These methods, usually deployed as a framework, allows decision makers to make informed choices under uncertainty while being able to incorporate information on the the fly, usually in the form of data, from multiple sources while being consistent with the physical intuition about the problem. This is a major advantage that Bayesian methods bring to fruition especially in the industrial context. This paper is a compendium of the Bayesian modeling methodology that is being consistently developed at GE Research. The methodology, called GE's Bayesian Hybrid Modeling (GEBHM), is a probabilistic modeling method, based on the Kennedy and O'Hagan framework, that has been continuously scaled-up and industrialized over several years. In this work, we explain the various advancements in GEBHM's methods and demonstrate their impact on several challenging industrial problems.

Data-driven discovery of free-form governing differential equations

Nov 11, 2019

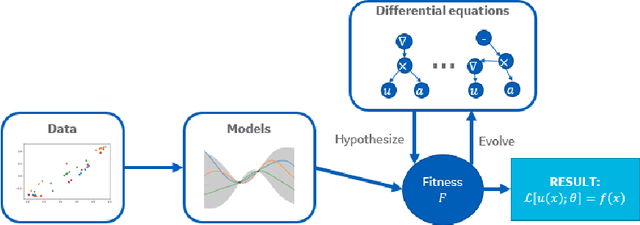

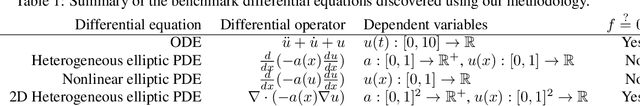

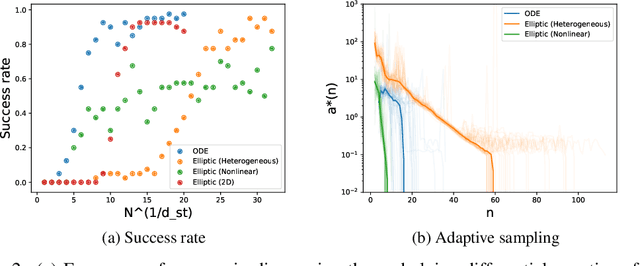

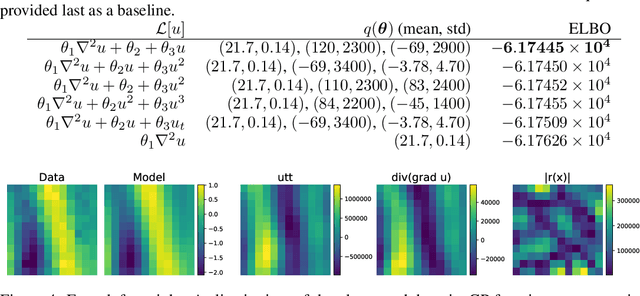

Abstract:We present a method of discovering governing differential equations from data without the need to specify a priori the terms to appear in the equation. The input to our method is a dataset (or ensemble of datasets) corresponding to a particular solution (or ensemble of particular solutions) of a differential equation. The output is a human-readable differential equation with parameters calibrated to the individual particular solutions provided. The key to our method is to learn differentiable models of the data that subsequently serve as inputs to a genetic programming algorithm in which graphs specify computation over arbitrary compositions of functions, parameters, and (potentially differential) operators on functions. Differential operators are composed and evaluated using recursive application of automatic differentiation, allowing our algorithm to explore arbitrary compositions of operators without the need for human intervention. We also demonstrate an active learning process to identify and remedy deficiencies in the proposed governing equations.

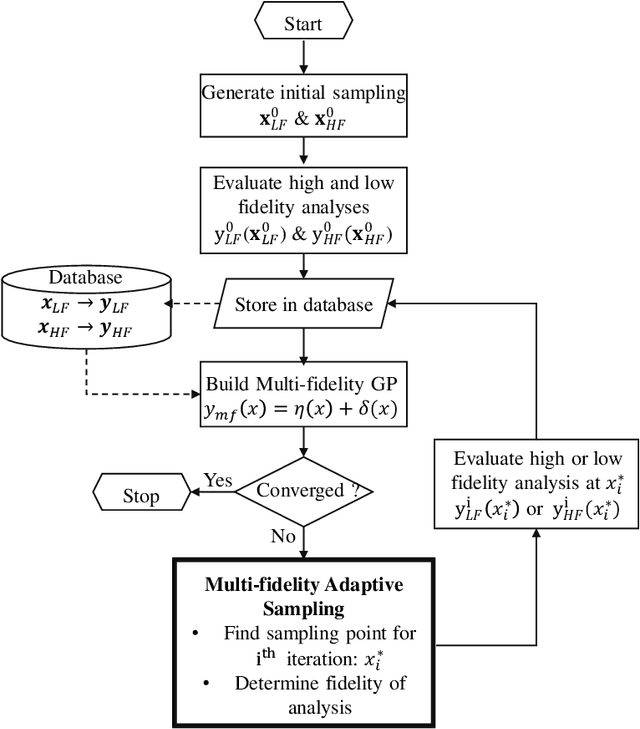

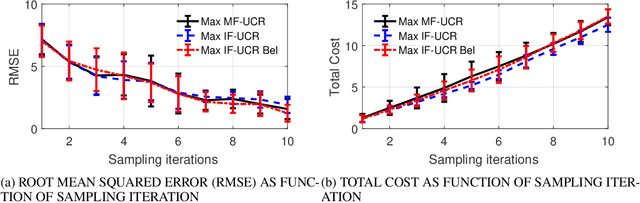

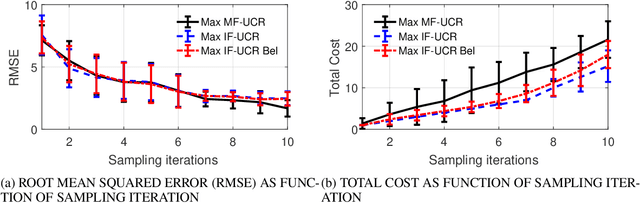

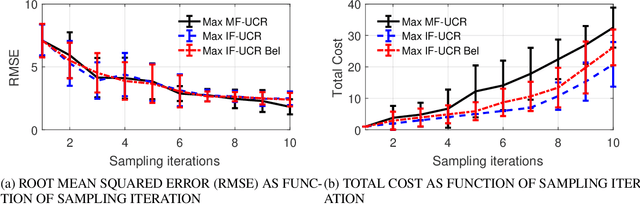

A Strategy for Adaptive Sampling of Multi-fidelity Gaussian Process to Reduce Predictive Uncertainty

Jul 26, 2019

Abstract:Multi-fidelity Gaussian process is a common approach to address the extensive computationally demanding algorithms such as optimization, calibration and uncertainty quantification. Adaptive sampling for multi-fidelity Gaussian process is a changing task due to the fact that not only we seek to estimate the next sampling location of the design variable, but also the level of the simulator fidelity. This issue is often addressed by including the cost of the simulator as an another factor in the searching criterion in conjunction with the uncertainty reduction metric. In this work, we extent the traditional design of experiment framework for the multi-fidelity Gaussian process by partitioning the prediction uncertainty based on the fidelity level and the associated cost of execution. In addition, we utilize the concept of Believer which quantifies the effect of adding an exploratory design point on the Gaussian process uncertainty prediction. We demonstrated our framework using academic examples as well as a industrial application of steady-state thermodynamic operation point of a fluidized bed process

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge