Vivek Roy

Learnable Spatio-Temporal Map Embeddings for Deep Inertial Localization

Nov 14, 2022

Abstract:Indoor localization systems often fuse inertial odometry with map information via hand-defined methods to reduce odometry drift, but such methods are sensitive to noise and struggle to generalize across odometry sources. To address the robustness problem in map utilization, we propose a data-driven prior on possible user locations in a map by combining learned spatial map embeddings and temporal odometry embeddings. Our prior learns to encode which map regions are feasible locations for a user more accurately than previous hand-defined methods. This prior leads to a 49% improvement in inertial-only localization accuracy when used in a particle filter. This result is significant, as it shows that our relative positioning method can match the performance of absolute positioning using bluetooth beacons. To show the generalizability of our method, we also show similar improvements using wheel encoder odometry.

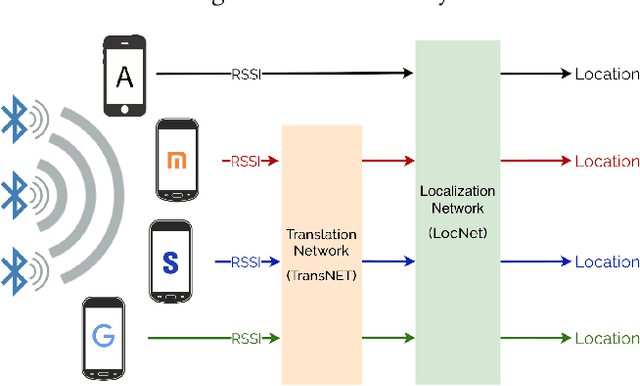

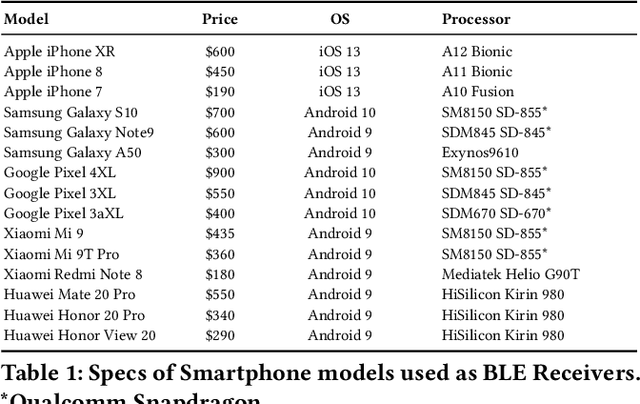

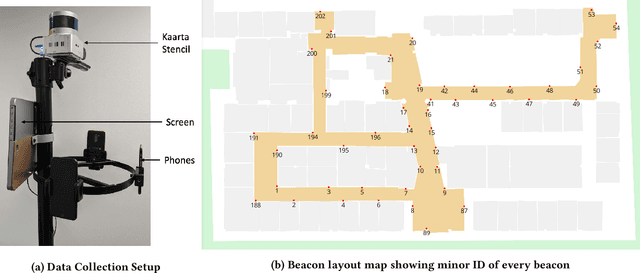

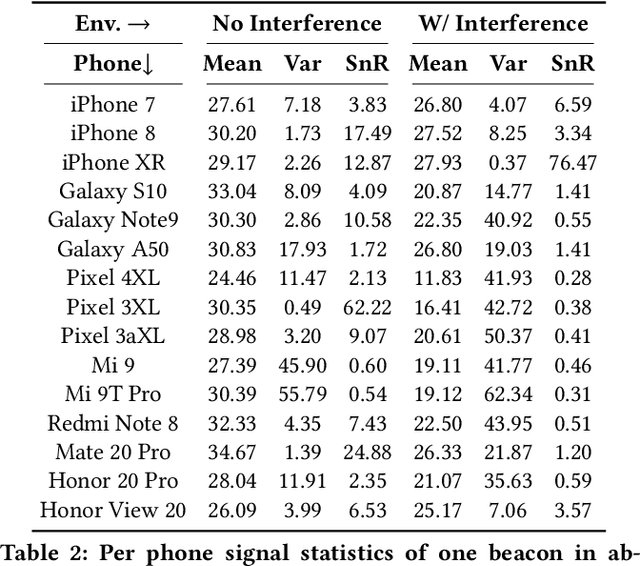

DeepBLE: Generalizing RSSI-based Localization Across Different Devices

Feb 27, 2021

Abstract:Accurate smartphone localization (< 1-meter error) for indoor navigation using only RSSI received from a set of BLE beacons remains a challenging problem, due to the inherent noise of RSSI measurements. To overcome the large variance in RSSI measurements, we propose a data-driven approach that uses a deep recurrent network, DeepBLE, to localize the smartphone using RSSI measured from multiple beacons in an environment. In particular, we focus on the ability of our approach to generalize across many smartphone brands (e.g., Apple, Samsung) and models (e.g., iPhone 8, S10). Towards this end, we collect a large-scale dataset of 15 hours of smartphone data, which consists of over 50,000 BLE beacon RSSI measurements collected from 47 beacons in a single building using 15 different popular smartphone models, along with precise 2D location annotations. Our experiments show that there is a very high variability of RSSI measurements across smartphone models (especially across brand), making it very difficult to apply supervised learning using only a subset of smartphone models. To address this challenge, we propose a novel statistic similarity loss (SSL) which enables our model to generalize to unseen phones using a semi-supervised learning approach. For known phones, the iPhone XR achieves the best mean distance error of 0.84 meters. For unknown phones, the Huawei Mate20 Pro shows the greatest improvement, cutting error by over 38\% from 2.62 meters to 1.63 meters error using our semi-supervised adaptation method.

Few-Shot Learning with Intra-Class Knowledge Transfer

Aug 22, 2020

Abstract:We consider the few-shot classification task with an unbalanced dataset, in which some classes have sufficient training samples while other classes only have limited training samples. Recent works have proposed to solve this task by augmenting the training data of the few-shot classes using generative models with the few-shot training samples as the seeds. However, due to the limited number of the few-shot seeds, the generated samples usually have small diversity, making it difficult to train a discriminative classifier for the few-shot classes. To enrich the diversity of the generated samples, we propose to leverage the intra-class knowledge from the neighbor many-shot classes with the intuition that neighbor classes share similar statistical information. Such intra-class information is obtained with a two-step mechanism. First, a regressor trained only on the many-shot classes is used to evaluate the few-shot class means from only a few samples. Second, superclasses are clustered, and the statistical mean and feature variance of each superclass are used as transferable knowledge inherited by the children few-shot classes. Such knowledge is then used by a generator to augment the sparse training data to help the downstream classification tasks. Extensive experiments show that our method achieves state-of-the-art across different datasets and $n$-shot settings.

Estimating 3D Camera Pose from 2D Pedestrian Trajectories

Jan 02, 2020

Abstract:We consider the task of re-calibrating the 3D pose of a static surveillance camera, whose pose may change due to external forces, such as birds, wind, falling objects or earthquakes. Conventionally, camera pose estimation can be solved with a PnP (Perspective-n-Point) method using 2D-to-3D feature correspondences, when 3D points are known. However, 3D point annotations are not always available or practical to obtain in real-world applications. We propose an alternative strategy for extracting 3D information to solve for camera pose by using pedestrian trajectories. We observe that 2D pedestrian trajectories indirectly contain useful 3D information that can be used for inferring camera pose. To leverage this information, we propose a data-driven approach by training a neural network (NN) regressor to model a direct mapping from 2D pedestrian trajectories projected on the image plane to 3D camera pose. We demonstrate that our regressor trained only on synthetic data can be directly applied to real data, thus eliminating the need to label any real data. We evaluate our method across six different scenes from the Town Centre Street and DUKEMTMC datasets. Our method achieves an improvement of $\sim50\%$ on both position and orientation prediction accuracy when compared to other SOTA methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge