Vincenzo Petrone

Simplifying ROS2 controllers with a modular architecture for robot-agnostic reference generation

Jan 13, 2026Abstract:This paper introduces a novel modular architecture for ROS2 that decouples the logic required to acquire, validate, and interpolate references from the control laws that track them. The design includes a dedicated component, named Reference Generator, that receives references, in the form of either single points or trajectories, from external nodes (e.g., planners), and writes single-point references at the controller's sampling period via the existing ros2_control chaining mechanism to downstream controllers. This separation removes duplicated reference-handling code from controllers and improves reusability across robot platforms. We implement two reference generators: one for handling joint-space references and one for Cartesian references, along with a set of new controllers (PD with gravity compensation, Cartesian pose, and admittance controllers) and validate the approach on simulated and real Universal Robots and Franka Emika manipulators. Results show that (i) references are tracked reliably in all tested scenarios, (ii) reference generators reduce duplicated reference-handling code across chained controllers to favor the construction and reuse of complex controller pipelines, and (iii) controller implementations remain focused only on control laws.

Augmenting Neural Networks-based Model Approximators in Robotic Force-tracking Tasks

Sep 10, 2025Abstract:As robotics gains popularity, interaction control becomes crucial for ensuring force tracking in manipulator-based tasks. Typically, traditional interaction controllers either require extensive tuning, or demand expert knowledge of the environment, which is often impractical in real-world applications. This work proposes a novel control strategy leveraging Neural Networks (NNs) to enhance the force-tracking behavior of a Direct Force Controller (DFC). Unlike similar previous approaches, it accounts for the manipulator's tangential velocity, a critical factor in force exertion, especially during fast motions. The method employs an ensemble of feedforward NNs to predict contact forces, then exploits the prediction to solve an optimization problem and generate an optimal residual action, which is added to the DFC output and applied to an impedance controller. The proposed Velocity-augmented Artificial intelligence Interaction Controller for Ambiguous Models (VAICAM) is validated in the Gazebo simulator on a Franka Emika Panda robot. Against a vast set of trajectories, VAICAM achieves superior performance compared to two baseline controllers.

A ROS2-based software library for inverse dynamics computation

Apr 08, 2025

Abstract:Inverse dynamics computation is a critical component in robot control, planning and simulation, enabling the calculation of joint torques required to achieve a desired motion. This paper presents a ROS2-based software library designed to solve the inverse dynamics problem for robotic systems. The library is built around an abstract class with three concrete implementations: one for simulated robots and two for real UR10 and Franka robots. This contribution aims to provide a flexible, extensible, robot-agnostic solution to inverse dynamics, suitable for both simulation and real-world scenarios involving planning and control applications. The related software is available at https://github.com/ros2-gbp/ros2-gbp-github-org/issues/732.

The Dynamic Model of the UR10 Robot and its ROS2 Integration

Feb 17, 2025

Abstract:This paper presents the full dynamic model of the UR10 industrial robot. A triple-stage identification approach is adopted to estimate the manipulator's dynamic coefficients. First, linear parameters are computed using a standard linear regression algorithm. Subsequently, nonlinear friction parameters are estimated according to a sigmoidal model. Lastly, motor drive gains are devised to map estimated joint currents to torques. The overall identified model can be used for both control and planning purposes, as the accompanied ROS2 software can be easily reconfigured to account for a generic payload. The estimated robot model is experimentally validated against a set of exciting trajectories and compared to the state-of-the-art model for the same manipulator, achieving higher current prediction accuracy (up to a factor of 4.43) and more precise motor gains. The related software is available at https://codeocean.com/capsule/8515919/tree/v2.

On the role of Artificial Intelligence methods in modern force-controlled manufacturing robotic tasks

Sep 25, 2024

Abstract:This position paper explores the integration of Artificial Intelligence (AI) into force-controlled robotic tasks within the scope of advanced manufacturing, a cornerstone of Industry 4.0. AI's role in enhancing robotic manipulators - key drivers in the Fourth Industrial Revolution - is rapidly leading to significant innovations in smart manufacturing. The objective of this article is to frame these innovations in practical force-controlled applications - e.g. deburring, polishing, and assembly tasks like peg-in-hole (PiH) - highlighting their necessity for maintaining high-quality production standards. By reporting on recent AI-based methodologies, this article contrasts them and identifies current challenges to be addressed in future research. The analysis concludes with a perspective on future research directions, emphasizing the need for common performance metrics to validate AI techniques, integration of various enhancements for performance optimization, and the importance of validating them in relevant scenarios. These future directions aim to provide consistency with already adopted approaches, so as to be compatible with manufacturing standards, increasing the relevance of AI-driven methods in both academic and industrial contexts.

Human-Robot Interface for Teleoperated Robotized Planetary Sample Collection and Assembly

Jun 13, 2024

Abstract:As human space exploration evolves toward longer voyages farther from our home planet, in-situ resource utilization (ISRU) becomes increasingly important. Haptic teleoperations are one of the technologies by which such activities can be carried out remotely by humans, whose expertise is still necessary for complex activities. In order to perform precision tasks with effectiveness, the operator must experience ease of use and accuracy. The same features are demanded to reduce the complexity of the training procedures and the associated learning time for operators without a specific background in robotic teleoperations. Haptic teleoperation systems, that allow for a natural feeling of forces, need to cope with the trade-off between accurate movements and workspace extension. Clearly, both of them are required for typical ISRU tasks. In this work, we develop a new concept of operations and suitable human-robot interfaces to achieve sample collection and assembly with ease of use and accuracy. In the proposed operational concept, the teleoperation space is extended by executing automated trajectories, offline planned at the control station. In three different experimental scenarios, we validate the end-to-end system involving the control station and the robotic asset, by assessing the contribution of haptics to mission success, the system robustness to consistent delays, and the ease of training new operators.

Scaling Population-Based Reinforcement Learning with GPU Accelerated Simulation

Apr 08, 2024

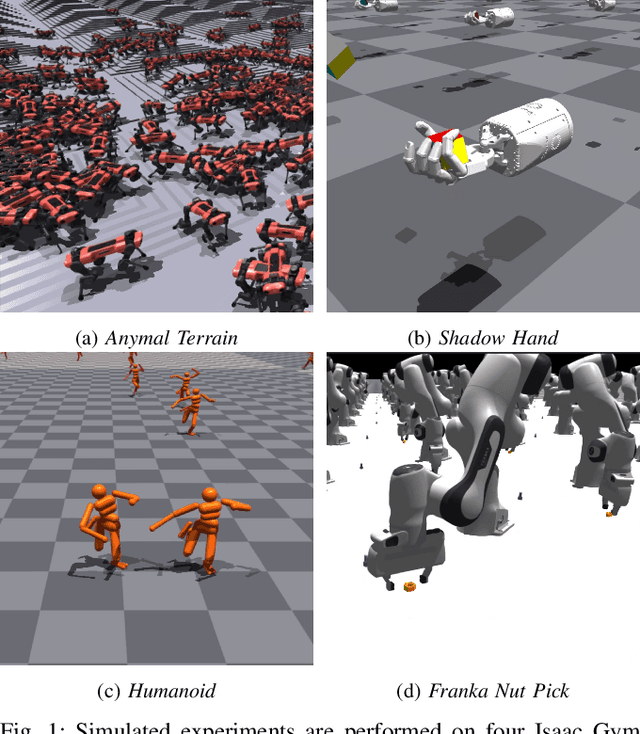

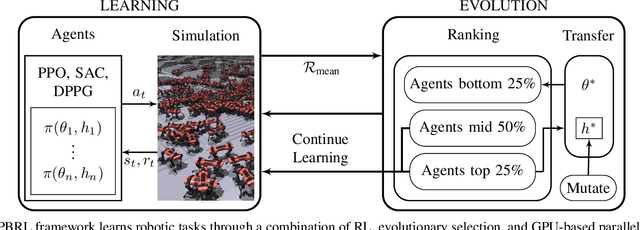

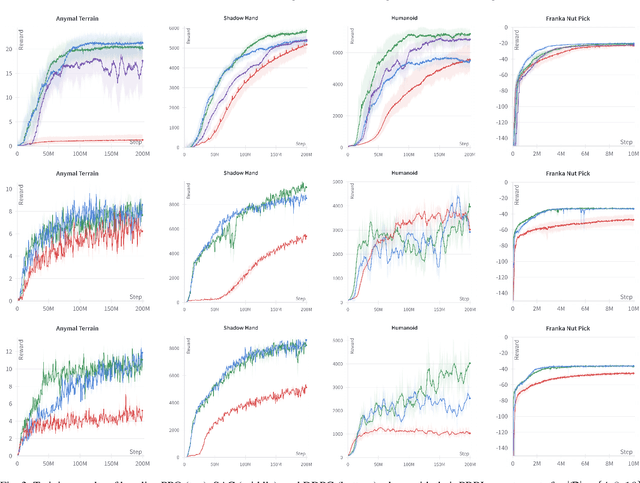

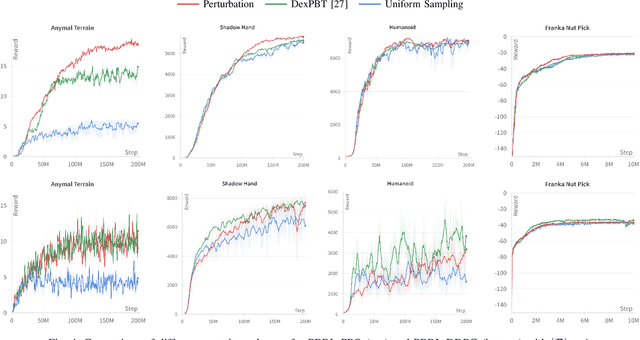

Abstract:In recent years, deep reinforcement learning (RL) has shown its effectiveness in solving complex continuous control tasks like locomotion and dexterous manipulation. However, this comes at the cost of an enormous amount of experience required for training, exacerbated by the sensitivity of learning efficiency and the policy performance to hyperparameter selection, which often requires numerous trials of time-consuming experiments. This work introduces a Population-Based Reinforcement Learning (PBRL) approach that exploits a GPU-accelerated physics simulator to enhance the exploration capabilities of RL by concurrently training multiple policies in parallel. The PBRL framework is applied to three state-of-the-art RL algorithms -- PPO, SAC, and DDPG -- dynamically adjusting hyperparameters based on the performance of learning agents. The experiments are performed on four challenging tasks in Isaac Gym -- Anymal Terrain, Shadow Hand, Humanoid, Franka Nut Pick -- by analyzing the effect of population size and mutation mechanisms for hyperparameters. The results show that PBRL agents achieve superior performance, in terms of cumulative reward, compared to non-evolutionary baseline agents. The trained agents are finally deployed in the real world for a Franka Nut Pick task, demonstrating successful sim-to-real transfer. Code and videos of the learned policies are available on our project website.

Time-Optimal Trajectory Planning with Interaction with the Environment

Jul 12, 2022

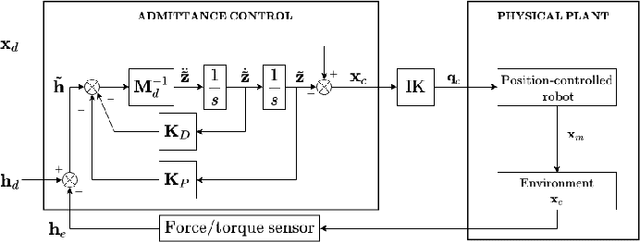

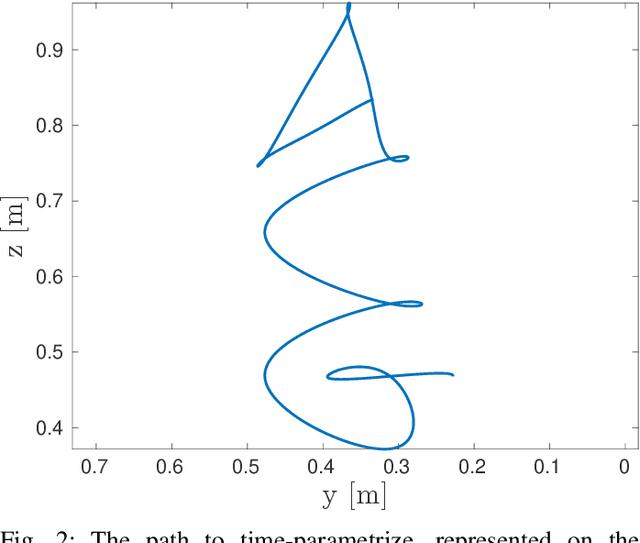

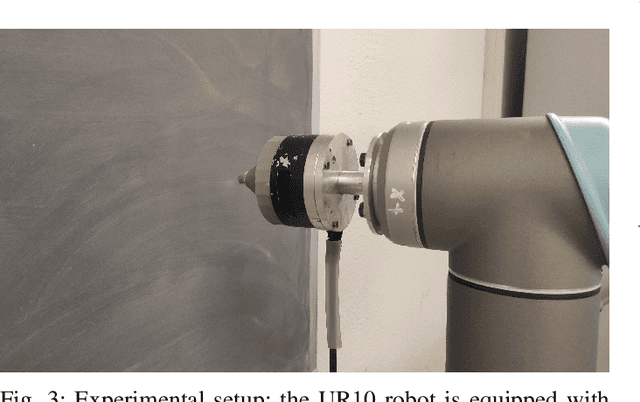

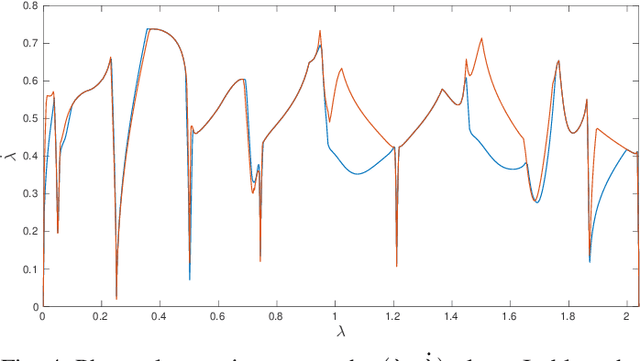

Abstract:Optimal motion planning along prescribed paths can be solved with several techniques, but most of them do not take into account the wrenches exerted by the end-effector when in contact with the environment. When a dynamic model of the environment is not available, no consolidated methodology exists to consider the effect of the interaction. Regardless of the specific performance index to optimize, this article proposes a strategy to include external wrenches in the optimal planning algorithm, considering the task specifications. This procedure is instantiated for minimum-time trajectories and validated on a real robot performing an interaction task under admittance control. The results prove that the inclusion of end-effector wrenches affect the planned trajectory, in fact modifying the manipulator's dynamic capability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge