Lorenzo Pagliara

Simplifying ROS2 controllers with a modular architecture for robot-agnostic reference generation

Jan 13, 2026Abstract:This paper introduces a novel modular architecture for ROS2 that decouples the logic required to acquire, validate, and interpolate references from the control laws that track them. The design includes a dedicated component, named Reference Generator, that receives references, in the form of either single points or trajectories, from external nodes (e.g., planners), and writes single-point references at the controller's sampling period via the existing ros2_control chaining mechanism to downstream controllers. This separation removes duplicated reference-handling code from controllers and improves reusability across robot platforms. We implement two reference generators: one for handling joint-space references and one for Cartesian references, along with a set of new controllers (PD with gravity compensation, Cartesian pose, and admittance controllers) and validate the approach on simulated and real Universal Robots and Franka Emika manipulators. Results show that (i) references are tracked reliably in all tested scenarios, (ii) reference generators reduce duplicated reference-handling code across chained controllers to favor the construction and reuse of complex controller pipelines, and (iii) controller implementations remain focused only on control laws.

Improving dependability in robotized bolting operations

Nov 13, 2025Abstract:Bolting operations are critical in industrial assembly and in the maintenance of scientific facilities, requiring high precision and robustness to faults. Although robotic solutions have the potential to improve operational safety and effectiveness, current systems still lack reliable autonomy and fault management capabilities. To address this gap, we propose a control framework for dependable robotized bolting tasks and instantiate it on a specific robotic system. The system features a control architecture ensuring accurate driving torque control and active compliance throughout the entire operation, enabling safe interaction even under fault conditions. By designing a multimodal human-robot interface (HRI) providing real-time visualization of relevant system information and supporting seamless transitions between automatic and manual control, we improve operator situation awareness and fault detection capabilities. A high-level supervisor (SV) coordinates the execution and manages transitions between control modes, ensuring consistency with the supervisory control (SVC) paradigm, while preserving the human operator's authority. The system is validated in a representative bolting operation involving pipe flange joining, under several fault conditions. The results demonstrate improved fault detection capabilities, enhanced operator situational awareness, and accurate and compliant execution of the bolting operation. However, they also reveal the limitations of relying on a single camera to achieve full situational awareness.

Haptic bilateral teleoperation system for free-hand dental procedures

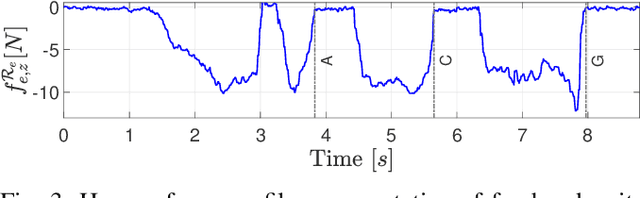

Mar 27, 2025Abstract:Free-hand dental procedures are typically repetitive, time-consuming and require high precision and manual dexterity. Dental robots can play a key role in improving procedural accuracy and safety, enhancing patient comfort, and reducing operator workload. However, robotic solutions for free-hand procedures remain limited or completely lacking, and their acceptance is still low. To address this gap, we develop a haptic bilateral teleoperation system (HBTS) for free-hand dental procedures. The system includes a dedicated mechanical end-effector, compatible with standard clinical tools, and equipped with an endoscopic camera for improved visibility of the intervention site. By ensuring motion and force correspondence between the operator's actions and the robot's movements, monitored through visual feedback, we enhance the operator's sensory awareness and motor accuracy. Furthermore, recognizing the need to ensure procedural safety, we limit interaction forces by scaling the motion references provided to the admittance controller based solely on measured contact forces. This ensures effective force limitation in all contact states without requiring prior knowledge of the environment. The proposed HBTS is validated in a dental scaling procedure using a dental phantom. The results show that the system improves the naturalness, safety, and accuracy of teleoperation, highlighting its potential to enhance free-hand dental procedures.

Human-Robot Interface for Teleoperated Robotized Planetary Sample Collection and Assembly

Jun 13, 2024

Abstract:As human space exploration evolves toward longer voyages farther from our home planet, in-situ resource utilization (ISRU) becomes increasingly important. Haptic teleoperations are one of the technologies by which such activities can be carried out remotely by humans, whose expertise is still necessary for complex activities. In order to perform precision tasks with effectiveness, the operator must experience ease of use and accuracy. The same features are demanded to reduce the complexity of the training procedures and the associated learning time for operators without a specific background in robotic teleoperations. Haptic teleoperation systems, that allow for a natural feeling of forces, need to cope with the trade-off between accurate movements and workspace extension. Clearly, both of them are required for typical ISRU tasks. In this work, we develop a new concept of operations and suitable human-robot interfaces to achieve sample collection and assembly with ease of use and accuracy. In the proposed operational concept, the teleoperation space is extended by executing automated trajectories, offline planned at the control station. In three different experimental scenarios, we validate the end-to-end system involving the control station and the robotic asset, by assessing the contribution of haptics to mission success, the system robustness to consistent delays, and the ease of training new operators.

Safe haptic teleoperations of admittance controlled robots with virtualization of the force feedback

Apr 11, 2024

Abstract:Haptic teleoperations play a key role in extending human capabilities to perform complex tasks remotely, employing a robotic system. The impact of haptics is far-reaching and can improve the sensory awareness and motor accuracy of the operator. In this context, a key challenge is attaining a natural, stable and safe haptic human-robot interaction. Achieving these conflicting requirements is particularly crucial for complex procedures, e.g. medical ones. To address this challenge, in this work we develop a novel haptic bilateral teleoperation system (HBTS), featuring a virtualized force feedback, based on the motion error generated by an admittance controlled robot. This approach allows decoupling the force rendering system from the control of the interaction: the rendered force is assigned with the desired dynamics, while the admittance control parameters are separately tuned to maximize interaction performance. Furthermore, recognizing the necessity to limit the forces exerted by the robot on the environment, to ensure a safe interaction, we embed a saturation strategy of the motion references provided by the haptic device to admittance control. We validate the different aspects of the proposed HBTS, through a teleoperated blackboard writing experiment, against two other architectures. The results indicate that the proposed HBTS improves the naturalness of teleoperation, as well as safety and accuracy of the interaction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge