Vincent Hugel

COSMER

Eiffel Tower: A Deep-Sea Underwater Dataset for Long-Term Visual Localization

May 09, 2023

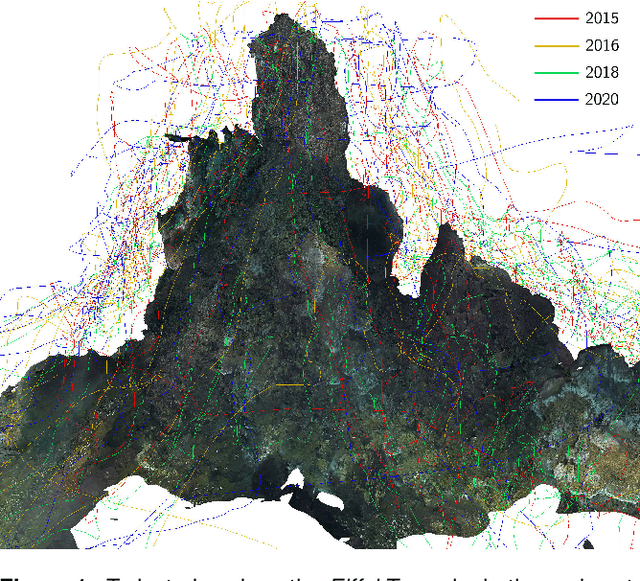

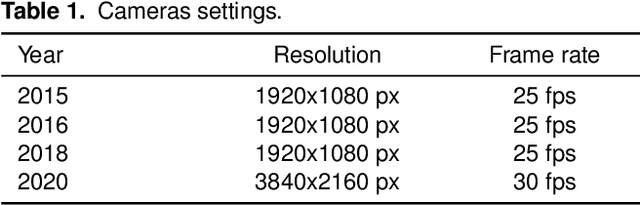

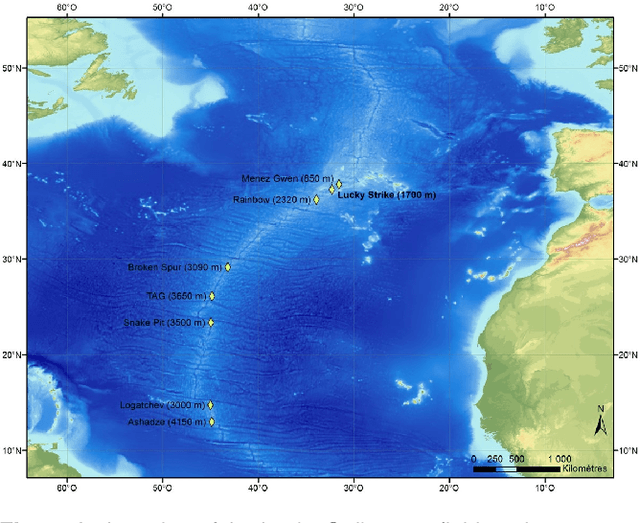

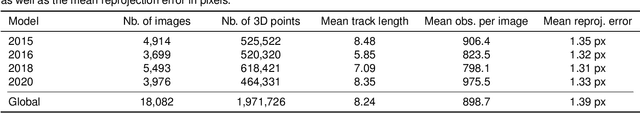

Abstract:Visual localization plays an important role in the positioning and navigation of robotics systems within previously visited environments. When visits occur over long periods of time, changes in the environment related to seasons or day-night cycles present a major challenge. Under water, the sources of variability are due to other factors such as water conditions or growth of marine organisms. Yet it remains a major obstacle and a much less studied one, partly due to the lack of data. This paper presents a new deep-sea dataset to benchmark underwater long-term visual localization. The dataset is composed of images from four visits to the same hydrothermal vent edifice over the course of five years. Camera poses and a common geometry of the scene were estimated using navigation data and Structure-from-Motion. This serves as a reference when evaluating visual localization techniques. An analysis of the data provides insights about the major changes observed throughout the years. Furthermore, several well-established visual localization methods are evaluated on the dataset, showing there is still room for improvement in underwater long-term visual localization. The data is made publicly available at https://www.seanoe.org/data/00810/92226/.

SUCRe: Leveraging Scene Structure for Underwater Color Restoration

Dec 18, 2022Abstract:Underwater images are altered by the physical characteristics of the medium through which light rays pass before reaching the optical sensor. Scattering and strong wavelength-dependent absorption significantly modify the captured colors depending on the distance of observed elements to the image plane. In this paper, we aim to recover the original colors of the scene as if the water had no effect on them. We propose two novel methods that rely on different sets of inputs. The first assumes that pixel intensities in the restored image are normally distributed within each color channel, leading to an alternative optimization of the well-known \textit{Sea-thru} method which acts on single images and their distance maps. We additionally introduce SUCRe, a new method that further exploits the scene's 3D Structure for Underwater Color Restoration. By following points in multiple images and tracking their intensities at different distances to the sensor we constrain the optimization of the image formation model parameters. When compared to similar existing approaches, SUCRe provides clear improvements in a variety of scenarios ranging from natural light to deep-sea environments. The code for both approaches is publicly available at https://github.com/clementinboittiaux/sucre .

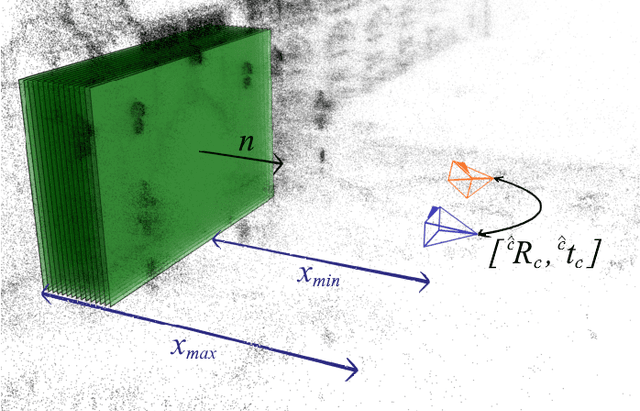

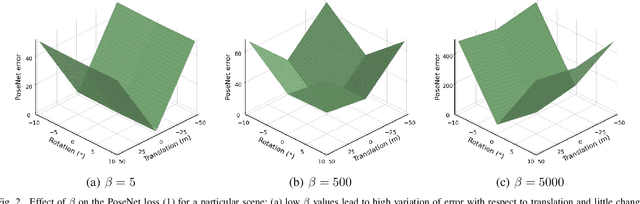

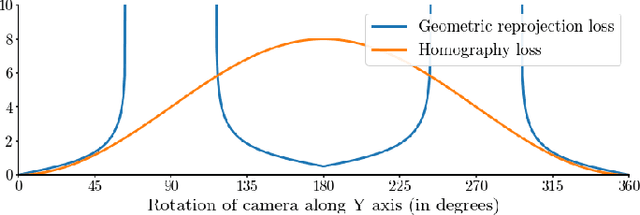

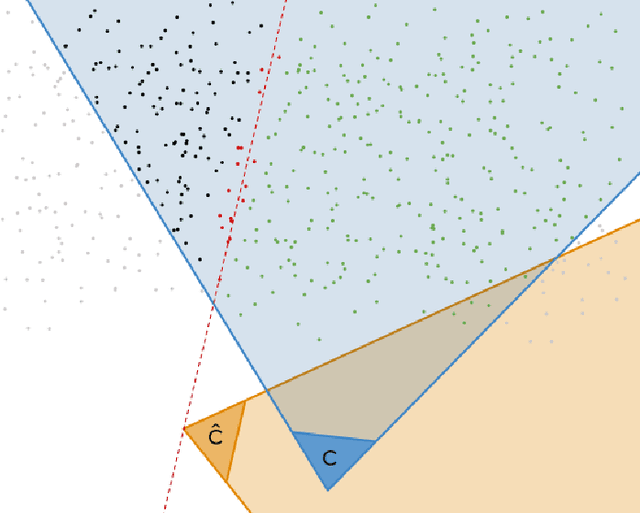

Homography-Based Loss Function for Camera Pose Regression

May 04, 2022

Abstract:Some recent visual-based relocalization algorithms rely on deep learning methods to perform camera pose regression from image data. This paper focuses on the loss functions that embed the error between two poses to perform deep learning based camera pose regression. Existing loss functions are either difficult-to-tune multi-objective functions or present unstable reprojection errors that rely on ground truth 3D scene points and require a two-step training. To deal with these issues, we introduce a novel loss function which is based on a multiplane homography integration. This new function does not require prior initialization and only depends on physically interpretable hyperparameters. Furthermore, the experiments carried out on well established relocalization datasets show that it minimizes best the mean square reprojection error during training when compared with existing loss functions.

Exploiting Bird Locomotion Kinematics Data for Robotics Modeling

Sep 21, 2008

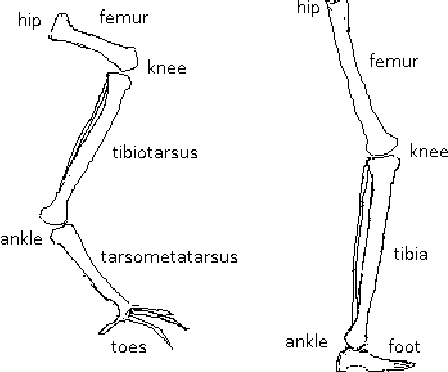

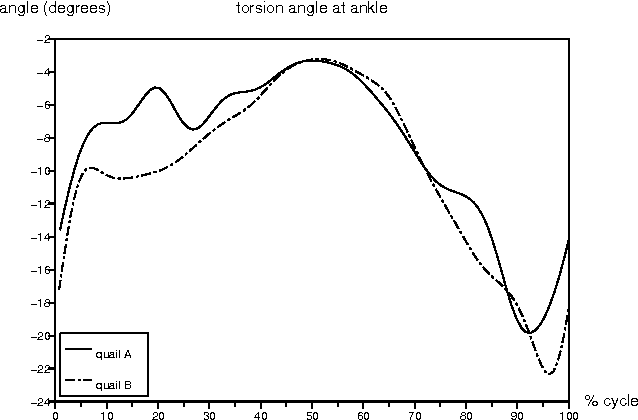

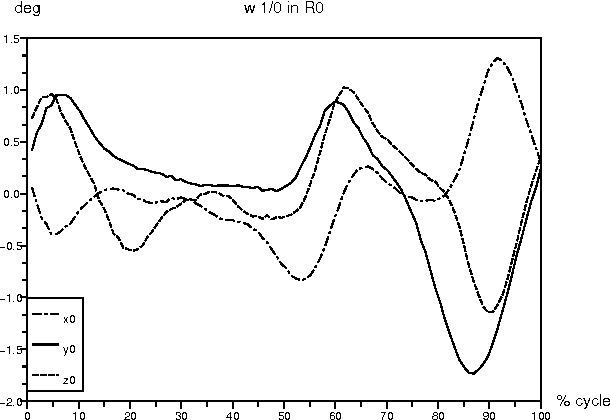

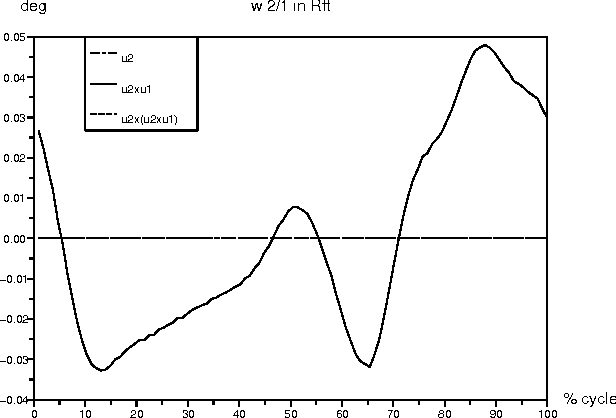

Abstract:We present here the results of an analysis carried out by biologists and roboticists with the aim of modeling bird locomotion kinematics for robotics purposes. The aim was to develop a bio-inspired kinematic model of the bird leg from biological data. We first acquired and processed kinematic data for sagittal and top views obtained by X-ray radiography of quails walking. Data processing involved filtering and specific data reconstruction in three dimensions, as two-dimensional views cannot be synchronized. We then designed a robotic model of a bird-like leg based on a kinematic analysis of the biological data. Angular velocity vectors were calculated to define the number of degrees of freedom (DOF) at each joint and the orientation of the rotation axes.

The NAO humanoid: a combination of performance and affordability

Sep 21, 2008Abstract:This article presents the design of the autonomous humanoid robot called NAO that is built by the French company Aldebaran-Robotics. With its height of 0.57 m and its weight about 4.5 kg, this innovative robot is lightweight and compact. It distinguishes itself from its existing Japanese, American, and other counterparts thanks to its pelvis kinematics design, its proprietary actuation system based on brush DC motors, its electronic, computer and distributed software architectures. This robot has been designed to be affordable without sacrificing quality and performance. It is an open and easy-to-handle platform where the user can change all the embedded system software or just add some applications to make the robot adopt specific behaviours. The robot's head and forearms are modular and can be changed to promote further evolution. The comprehensive and functional design is one of the reasons that helped select NAO to replace the AIBO quadrupeds in the 2008 RoboCup standard league.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge