Victoria Chen

MEMO: Dataset and Methods for Robust Multimodal Retinal Image Registration with Large or Small Vessel Density Differences

Sep 25, 2023

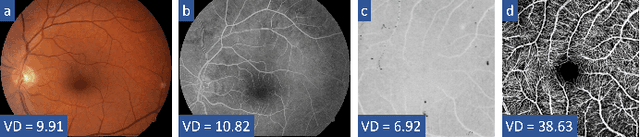

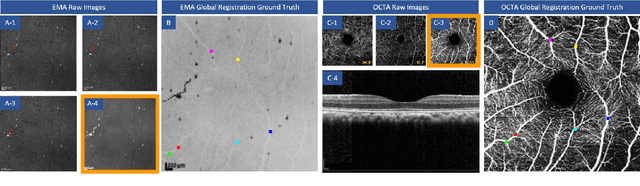

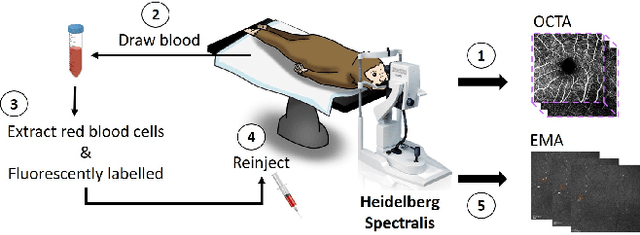

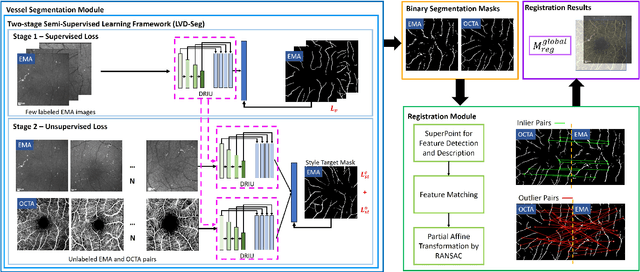

Abstract:The measurement of retinal blood flow (RBF) in capillaries can provide a powerful biomarker for the early diagnosis and treatment of ocular diseases. However, no single modality can determine capillary flowrates with high precision. Combining erythrocyte-mediated angiography (EMA) with optical coherence tomography angiography (OCTA) has the potential to achieve this goal, as EMA can measure the absolute 2D RBF of retinal microvasculature and OCTA can provide the 3D structural images of capillaries. However, multimodal retinal image registration between these two modalities remains largely unexplored. To fill this gap, we establish MEMO, the first public multimodal EMA and OCTA retinal image dataset. A unique challenge in multimodal retinal image registration between these modalities is the relatively large difference in vessel density (VD). To address this challenge, we propose a segmentation-based deep-learning framework (VDD-Reg) and a new evaluation metric (MSD), which provide robust results despite differences in vessel density. VDD-Reg consists of a vessel segmentation module and a registration module. To train the vessel segmentation module, we further designed a two-stage semi-supervised learning framework (LVD-Seg) combining supervised and unsupervised losses. We demonstrate that VDD-Reg outperforms baseline methods quantitatively and qualitatively for cases of both small VD differences (using the CF-FA dataset) and large VD differences (using our MEMO dataset). Moreover, VDD-Reg requires as few as three annotated vessel segmentation masks to maintain its accuracy, demonstrating its feasibility.

HAVEN: A Unity-based Virtual Robot Environment to Showcase HRI-based Augmented Reality

Nov 06, 2020

Abstract:Due to the COVID-19 pandemic, conducting Human-Robot Interaction (HRI) studies in person is not permissible due to social distancing practices to limit the spread of the virus. Therefore, a virtual reality (VR) simulation with a virtual robot may offer an alternative to real-life HRI studies. Like a real intelligent robot, a virtual robot can utilize the same advanced algorithms to behave autonomously. This paper introduces HAVEN (HRI-based Augmentation in a Virtual robot Environment using uNity), a VR simulation that enables users to interact with a virtual robot. The goal of this system design is to enable researchers to conduct HRI Augmented Reality studies using a virtual robot without being in a real environment. This framework also introduces two common HRI experiment designs: a hallway passing scenario and human-robot team object retrieval scenario. Both reflect HAVEN's potential as a tool for future AR-based HRI studies.

High-dimensional Black-box Optimization Under Uncertainty

Nov 08, 2019

Abstract:Limited informative data remains the primary challenge for optimization the expensive complex systems. Learning from limited data and finding the set of variables that optimizes an expected output arise practically in the design problems. In such situations, the underlying function is complex yet unknown, a large number of variables are involved though not all of them are important, and the interactions between the variables are significant. On the other hand, it is usually expensive to collect more data and the outcome is under uncertainty. Unfortunately, despite being real-world challenges, exiting works have not addressed these jointly. We propose a new surrogate optimization approach in this article to tackle these challenges. We design a flexible, non-interpolating, and parsimonious surrogate model using a partitioning technique. The proposed model bends at near-optimal locations and identifies the peaks and valleys for optimization purposes. To discover new candidate points an exploration-exploitation Pareto method is implemented as a sampling strategy. Furthermore, we develop a smart replication approach based on hypothesis testing to overcome the uncertainties associated with the black-box outcome. The Smart-Replication approach identifies promising points to replicate rather than wasting evaluation on less informative data points. We conduct a comprehensive set of experiments on challenging global optimization test functions to evaluate the performance of our proposal.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge