Andre Cleaver

SENSAR: A Visual Tool for Intelligent Robots for Collaborative Human-Robot Interaction

Nov 09, 2020

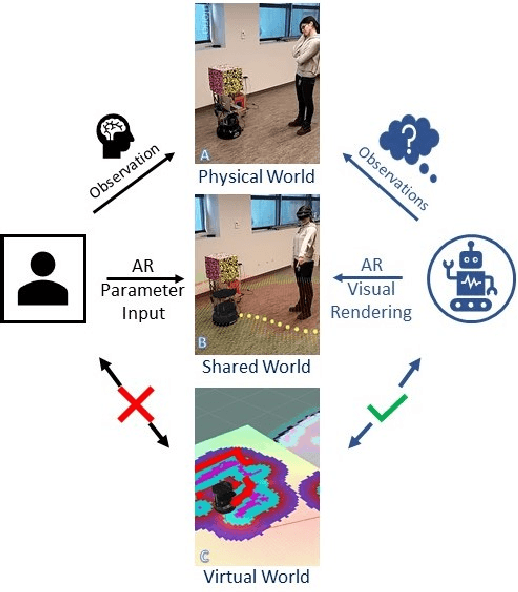

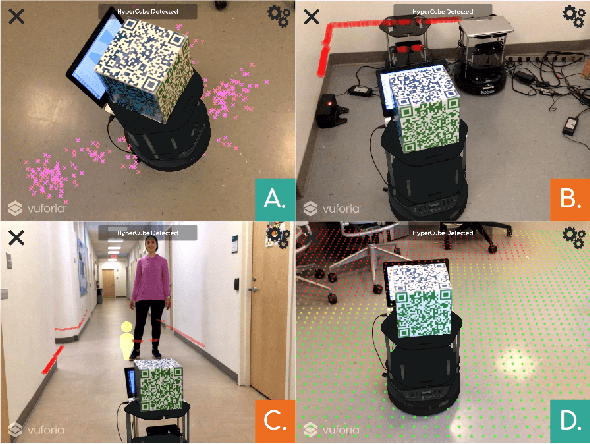

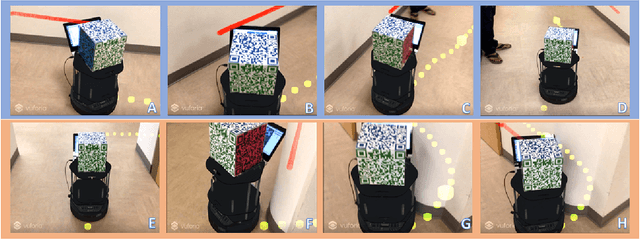

Abstract:Establishing common ground between an intelligent robot and a human requires communication of the robot's intention, behavior, and knowledge to the human to build trust and assure safety in a shared environment. This paper introduces SENSAR (Seeing Everything iN Situ with Augmented Reality), an augmented reality robotic system that enables robots to communicate their sensory and cognitive data in context over the real-world with rendered graphics, allowing a user to understand, correct, and validate the robot's perception of the world. Our system aims to support human-robot interaction research by establishing common ground where the perceptions of the human and the robot align.

HAVEN: A Unity-based Virtual Robot Environment to Showcase HRI-based Augmented Reality

Nov 06, 2020

Abstract:Due to the COVID-19 pandemic, conducting Human-Robot Interaction (HRI) studies in person is not permissible due to social distancing practices to limit the spread of the virus. Therefore, a virtual reality (VR) simulation with a virtual robot may offer an alternative to real-life HRI studies. Like a real intelligent robot, a virtual robot can utilize the same advanced algorithms to behave autonomously. This paper introduces HAVEN (HRI-based Augmentation in a Virtual robot Environment using uNity), a VR simulation that enables users to interact with a virtual robot. The goal of this system design is to enable researchers to conduct HRI Augmented Reality studies using a virtual robot without being in a real environment. This framework also introduces two common HRI experiment designs: a hallway passing scenario and human-robot team object retrieval scenario. Both reflect HAVEN's potential as a tool for future AR-based HRI studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge