Faizan Muhammad

Gemini: A Family of Highly Capable Multimodal Models

Dec 19, 2023Abstract:This report introduces a new family of multimodal models, Gemini, that exhibit remarkable capabilities across image, audio, video, and text understanding. The Gemini family consists of Ultra, Pro, and Nano sizes, suitable for applications ranging from complex reasoning tasks to on-device memory-constrained use-cases. Evaluation on a broad range of benchmarks shows that our most-capable Gemini Ultra model advances the state of the art in 30 of 32 of these benchmarks - notably being the first model to achieve human-expert performance on the well-studied exam benchmark MMLU, and improving the state of the art in every one of the 20 multimodal benchmarks we examined. We believe that the new capabilities of Gemini models in cross-modal reasoning and language understanding will enable a wide variety of use cases and we discuss our approach toward deploying them responsibly to users.

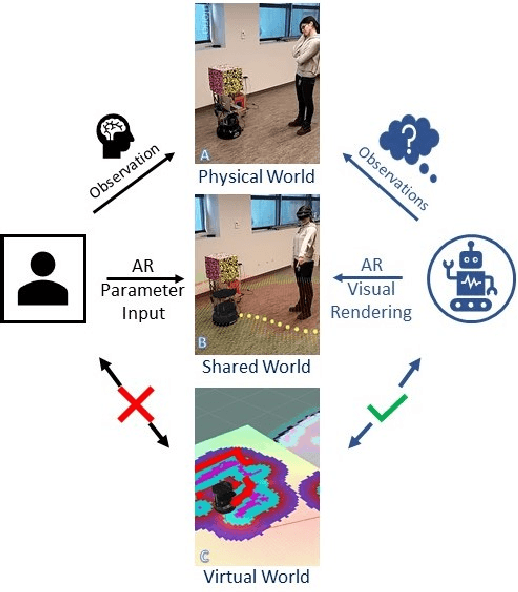

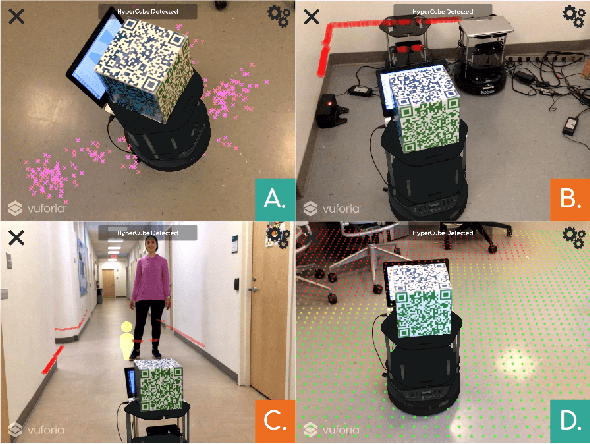

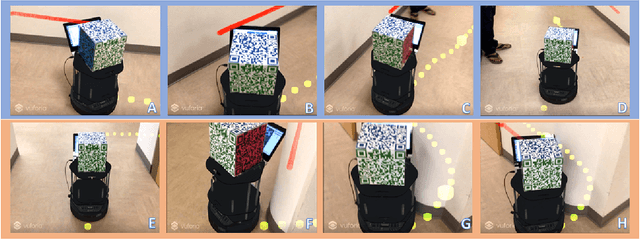

SENSAR: A Visual Tool for Intelligent Robots for Collaborative Human-Robot Interaction

Nov 09, 2020

Abstract:Establishing common ground between an intelligent robot and a human requires communication of the robot's intention, behavior, and knowledge to the human to build trust and assure safety in a shared environment. This paper introduces SENSAR (Seeing Everything iN Situ with Augmented Reality), an augmented reality robotic system that enables robots to communicate their sensory and cognitive data in context over the real-world with rendered graphics, allowing a user to understand, correct, and validate the robot's perception of the world. Our system aims to support human-robot interaction research by establishing common ground where the perceptions of the human and the robot align.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge