Varun Singh

KG-QAGen: A Knowledge-Graph-Based Framework for Systematic Question Generation and Long-Context LLM Evaluation

May 18, 2025Abstract:The increasing context length of modern language models has created a need for evaluating their ability to retrieve and process information across extensive documents. While existing benchmarks test long-context capabilities, they often lack a structured way to systematically vary question complexity. We introduce KG-QAGen (Knowledge-Graph-based Question-Answer Generation), a framework that (1) extracts QA pairs at multiple complexity levels (2) by leveraging structured representations of financial agreements (3) along three key dimensions -- multi-hop retrieval, set operations, and answer plurality -- enabling fine-grained assessment of model performance across controlled difficulty levels. Using this framework, we construct a dataset of 20,139 QA pairs (the largest number among the long-context benchmarks) and open-source a part of it. We evaluate 13 proprietary and open-source LLMs and observe that even the best-performing models are struggling with set-based comparisons and multi-hop logical inference. Our analysis reveals systematic failure modes tied to semantic misinterpretation and inability to handle implicit relations.

Nemotron-H: A Family of Accurate and Efficient Hybrid Mamba-Transformer Models

Apr 10, 2025

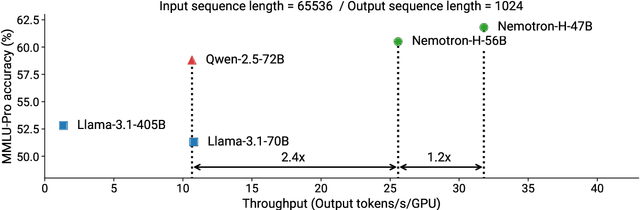

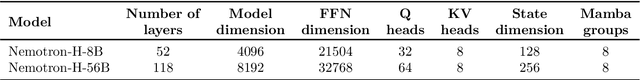

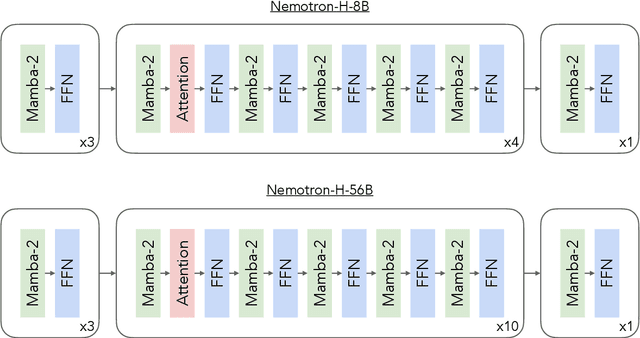

Abstract:As inference-time scaling becomes critical for enhanced reasoning capabilities, it is increasingly becoming important to build models that are efficient to infer. We introduce Nemotron-H, a family of 8B and 56B/47B hybrid Mamba-Transformer models designed to reduce inference cost for a given accuracy level. To achieve this goal, we replace the majority of self-attention layers in the common Transformer model architecture with Mamba layers that perform constant computation and require constant memory per generated token. We show that Nemotron-H models offer either better or on-par accuracy compared to other similarly-sized state-of-the-art open-sourced Transformer models (e.g., Qwen-2.5-7B/72B and Llama-3.1-8B/70B), while being up to 3$\times$ faster at inference. To further increase inference speed and reduce the memory required at inference time, we created Nemotron-H-47B-Base from the 56B model using a new compression via pruning and distillation technique called MiniPuzzle. Nemotron-H-47B-Base achieves similar accuracy to the 56B model, but is 20% faster to infer. In addition, we introduce an FP8-based training recipe and show that it can achieve on par results with BF16-based training. This recipe is used to train the 56B model. All Nemotron-H models will be released, with support in Hugging Face, NeMo, and Megatron-LM.

A Scalable System for Visual Analysis of Ocean Data

Jan 09, 2025Abstract:Oceanographers rely on visual analysis to interpret model simulations, identify events and phenomena, and track dynamic ocean processes. The ever increasing resolution and complexity of ocean data due to its dynamic nature and multivariate relationships demands a scalable and adaptable visualization tool for interactive exploration. We introduce pyParaOcean, a scalable and interactive visualization system designed specifically for ocean data analysis. pyParaOcean offers specialized modules for common oceanographic analysis tasks, including eddy identification and salinity movement tracking. These modules seamlessly integrate with ParaView as filters, ensuring a user-friendly and easy-to-use system while leveraging the parallelization capabilities of ParaView and a plethora of inbuilt general-purpose visualization functionalities. The creation of an auxiliary dataset stored as a Cinema database helps address I/O and network bandwidth bottlenecks while supporting the generation of quick overview visualizations. We present a case study on the Bay of Bengal (BoB) to demonstrate the utility of the system and scaling studies to evaluate the efficiency of the system.

Low Complexity High Speed Deep Neural Network Augmented Wireless Channel Estimation

Nov 15, 2023Abstract:The channel estimation (CE) in wireless receivers is one of the most critical and computationally complex signal processing operations. Recently, various works have shown that the deep learning (DL) based CE outperforms conventional minimum mean square error (MMSE) based CE, and it is hardware-friendly. However, DL-based CE has higher complexity and latency than popularly used least square (LS) based CE. In this work, we propose a novel low complexity high-speed Deep Neural Network-Augmented Least Square (LC-LSDNN) algorithm for IEEE 802.11p wireless physical layer and efficiently implement it on Zynq system on chip (ZSoC). The novelty of the LC-LSDNN is to use different DNNs for real and imaginary values of received complex symbols. This helps reduce the size of DL by 59% and optimize the critical path, allowing it to operate at 60% higher clock frequency. We also explore three different architectures for MMSE-based CE. We show that LC-LSDNN significantly outperforms MMSE and state-of-the-art DL-based CE for a wide range of signal-to-noise ratios (SNR) and different wireless channels. Also, it is computationally efficient, with around 50% lower resources than existing DL-based CE.

Automated Segmentation of Vertebrae on Lateral Chest Radiography Using Deep Learning

Jan 05, 2020

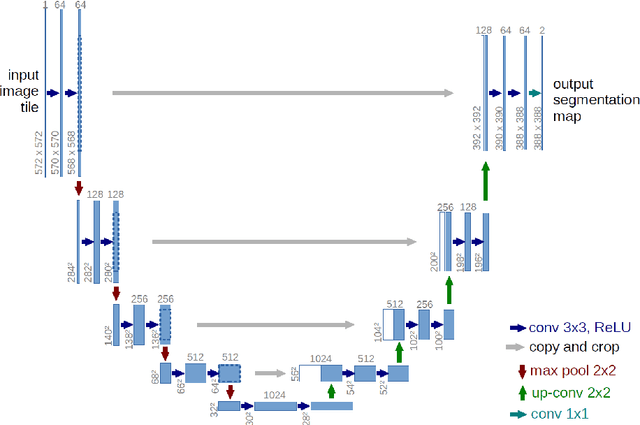

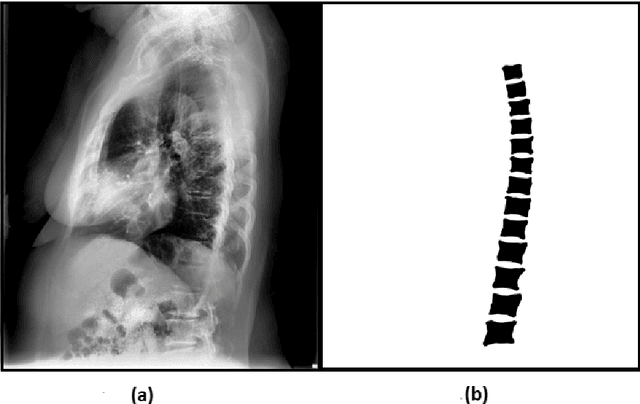

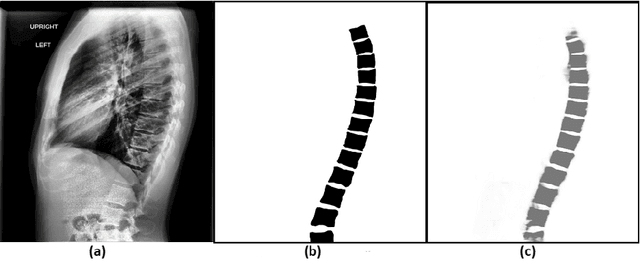

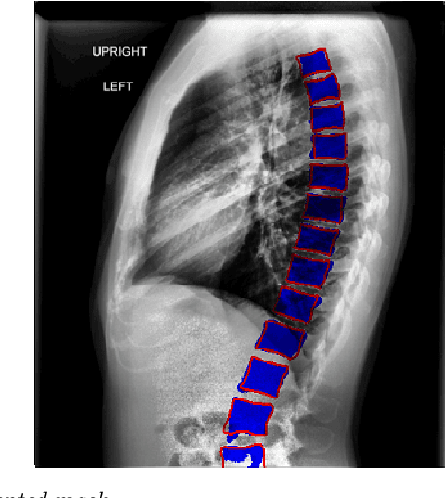

Abstract:The purpose of this study is to develop an automated algorithm for thoracic vertebral segmentation on chest radiography using deep learning. 124 de-identified lateral chest radiographs on unique patients were obtained. Segmentations of visible vertebrae were manually performed by a medical student and verified by a board-certified radiologist. 74 images were used for training, 10 for validation, and 40 were held out for testing. A U-Net deep convolutional neural network was employed for segmentation, using the sum of dice coefficient and binary cross-entropy as the loss function. On the test set, the algorithm demonstrated an average dice coefficient value of 90.5 and an average intersection-over-union (IoU) of 81.75. Deep learning demonstrates promise in the segmentation of vertebrae on lateral chest radiography.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge