Sanket Badhe

Taxonomy of the Retrieval System Framework: Pitfalls and Paradigms

Jan 27, 2026Abstract:Designing an embedding retrieval system requires navigating a complex design space of conflicting trade-offs between efficiency and effectiveness. This work structures these decisions as a vertical traversal of the system design stack. We begin with the Representation Layer by examining how loss functions and architectures, specifically Bi-encoders and Cross-encoders, define semantic relevance and geometric projection. Next, we analyze the Granularity Layer and evaluate how segmentation strategies like Atomic and Hierarchical chunking mitigate information bottlenecks in long-context documents. Moving to the Orchestration Layer, we discuss methods that transcend the single-vector paradigm, including hierarchical retrieval, agentic decomposition, and multi-stage reranking pipelines to resolve capacity limitations. Finally, we address the Robustness Layer by identifying architectural mitigations for domain generalization failures, lexical blind spots, and the silent degradation of retrieval quality due to temporal drift. By categorizing these limitations and design choices, we provide a comprehensive framework for practitioners to optimize the efficiency-effectiveness frontier in modern neural search systems.

ScamAgents: How AI Agents Can Simulate Human-Level Scam Calls

Aug 08, 2025Abstract:Large Language Models (LLMs) have demonstrated impressive fluency and reasoning capabilities, but their potential for misuse has raised growing concern. In this paper, we present ScamAgent, an autonomous multi-turn agent built on top of LLMs, capable of generating highly realistic scam call scripts that simulate real-world fraud scenarios. Unlike prior work focused on single-shot prompt misuse, ScamAgent maintains dialogue memory, adapts dynamically to simulated user responses, and employs deceptive persuasion strategies across conversational turns. We show that current LLM safety guardrails, including refusal mechanisms and content filters, are ineffective against such agent-based threats. Even models with strong prompt-level safeguards can be bypassed when prompts are decomposed, disguised, or delivered incrementally within an agent framework. We further demonstrate the transformation of scam scripts into lifelike voice calls using modern text-to-speech systems, completing a fully automated scam pipeline. Our findings highlight an urgent need for multi-turn safety auditing, agent-level control frameworks, and new methods to detect and disrupt conversational deception powered by generative AI.

Automated Segmentation of Vertebrae on Lateral Chest Radiography Using Deep Learning

Jan 05, 2020

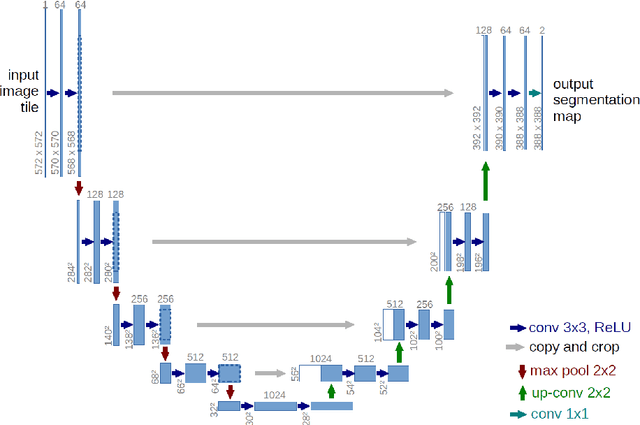

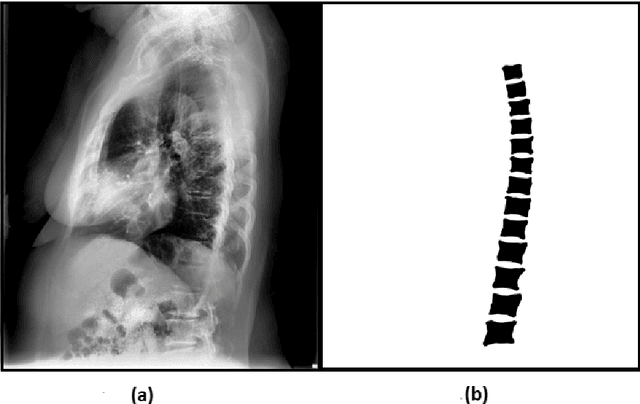

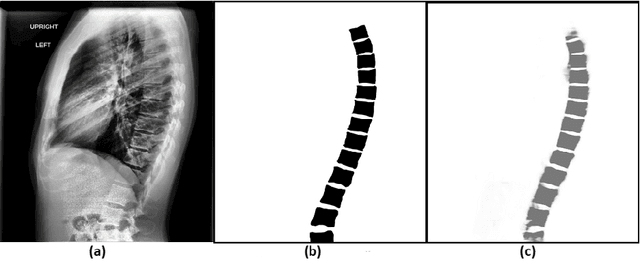

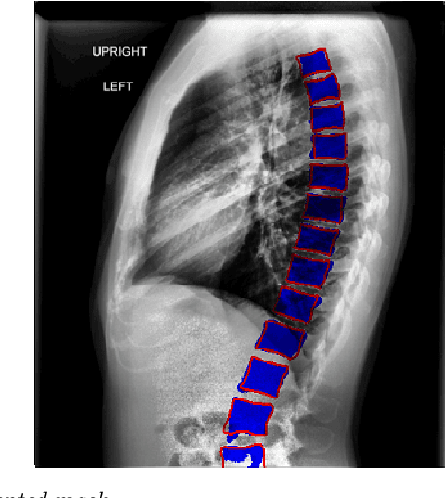

Abstract:The purpose of this study is to develop an automated algorithm for thoracic vertebral segmentation on chest radiography using deep learning. 124 de-identified lateral chest radiographs on unique patients were obtained. Segmentations of visible vertebrae were manually performed by a medical student and verified by a board-certified radiologist. 74 images were used for training, 10 for validation, and 40 were held out for testing. A U-Net deep convolutional neural network was employed for segmentation, using the sum of dice coefficient and binary cross-entropy as the loss function. On the test set, the algorithm demonstrated an average dice coefficient value of 90.5 and an average intersection-over-union (IoU) of 81.75. Deep learning demonstrates promise in the segmentation of vertebrae on lateral chest radiography.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge