Vanessa Böhm

SAR-based landslide classification pretraining leads to better segmentation

Nov 17, 2022

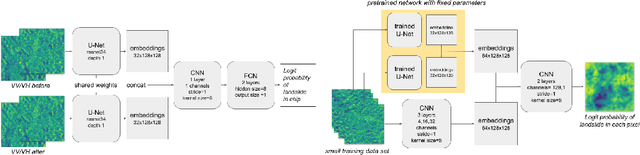

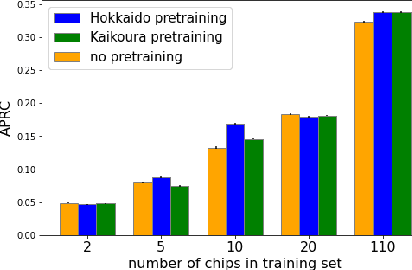

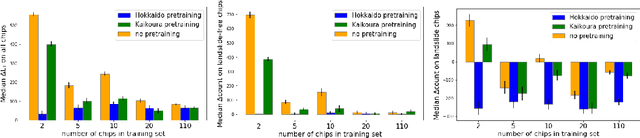

Abstract:Rapid assessment after a natural disaster is key for prioritizing emergency resources. In the case of landslides, rapid assessment involves determining the extent of the area affected and measuring the size and location of individual landslides. Synthetic Aperture Radar (SAR) is an active remote sensing technique that is unaffected by weather conditions. Deep Learning algorithms can be applied to SAR data, but training them requires large labeled datasets. In the case of landslides, these datasets are laborious to produce for segmentation, and often they are not available for the specific region in which the event occurred. Here, we study how deep learning algorithms for landslide segmentation on SAR products can benefit from pretraining on a simpler task and from data from different regions. The method we explore consists of two training stages. First, we learn the task of identifying whether a SAR image contains any landslides or not. Then, we learn to segment in a sparsely labeled scenario where half of the data do not contain landslides. We test whether the inclusion of feature embeddings derived from stage-1 helps with landslide detection in stage-2. We find that it leads to minor improvements in the Area Under the Precision-Recall Curve, but also to a significantly lower false positive rate in areas without landslides and an improved estimate of the average number of landslide pixels in a chip. A more accurate pixel count allows to identify the most affected areas with higher confidence. This could be valuable in rapid response scenarios where prioritization of resources at a global scale is important. We make our code publicly available at https://github.com/VMBoehm/SAR-landslide-detection-pretraining.

Probabilistic Auto-Encoder

Jun 22, 2020

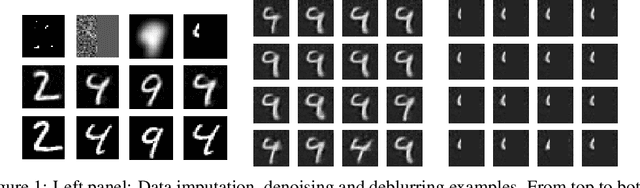

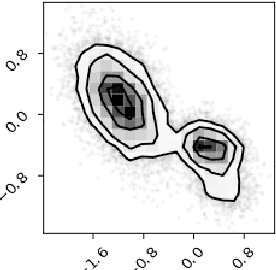

Abstract:We introduce the Probabilistic Auto-Encoder (PAE), a generative model with a lower dimensional latent space that is based on an Auto-Encoder which is interpreted probabilistically after training using a Normalizing Flow. The PAE combines the advantages of an Auto-Encoder, i.e. it is fast and easy to train and achieves small reconstruction error, with the desired properties of a generative model, such as high sample quality and good performance in downstream tasks. Compared to a VAE and its common variants, the PAE trains faster, reaches lower reconstruction error and achieves state of the art samples without parameter fine-tuning or annealing schemes. We demonstrate that the PAE is further a powerful model for performing the downstream tasks of outlier detection and probabilistic image reconstruction: 1) Starting from the Laplace approximation to the marginal likelihood, we identify a PAE-based outlier detection metric which achieves state of the art results in Out-of-Distribution detection outperforming other likelihood based estimators. 2) Using posterior analysis in the PAE latent space we perform high dimensional data inpainting and denoising with uncertainty quantification.

Uncertainty Quantification with Generative Models

Oct 22, 2019

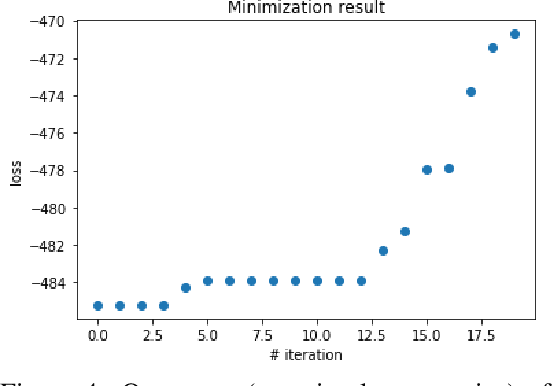

Abstract:We develop a generative model-based approach to Bayesian inverse problems, such as image reconstruction from noisy and incomplete images. Our framework addresses two common challenges of Bayesian reconstructions: 1) It makes use of complex, data-driven priors that comprise all available information about the uncorrupted data distribution. 2) It enables computationally tractable uncertainty quantification in the form of posterior analysis in latent and data space. The method is very efficient in that the generative model only has to be trained once on an uncorrupted data set, after that, the procedure can be used for arbitrary corruption types.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge