Vadim Arzamasov

Adversarial Subspace Generation for Outlier Detection in High-Dimensional Data

Apr 10, 2025

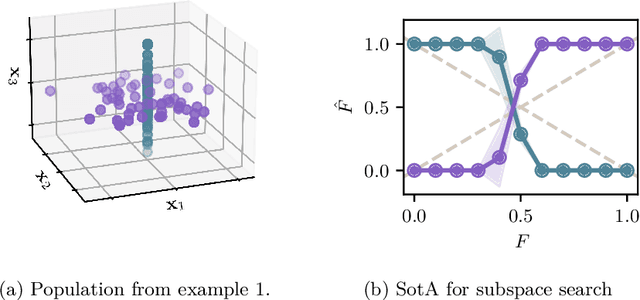

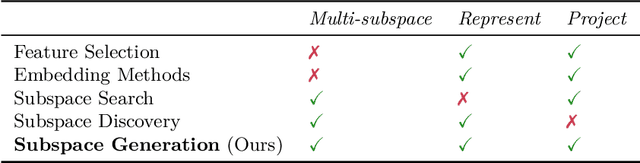

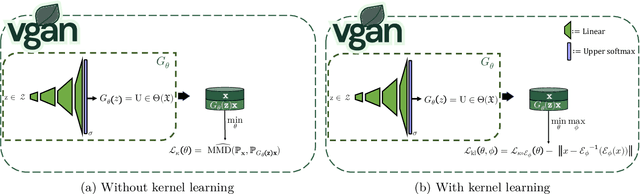

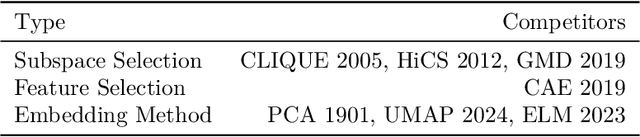

Abstract:Outlier detection in high-dimensional tabular data is challenging since data is often distributed across multiple lower-dimensional subspaces -- a phenomenon known as the Multiple Views effect (MV). This effect led to a large body of research focused on mining such subspaces, known as subspace selection. However, as the precise nature of the MV effect was not well understood, traditional methods had to rely on heuristic-driven search schemes that struggle to accurately capture the true structure of the data. Properly identifying these subspaces is critical for unsupervised tasks such as outlier detection or clustering, where misrepresenting the underlying data structure can hinder the performance. We introduce Myopic Subspace Theory (MST), a new theoretical framework that mathematically formulates the Multiple Views effect and writes subspace selection as a stochastic optimization problem. Based on MST, we introduce V-GAN, a generative method trained to solve such an optimization problem. This approach avoids any exhaustive search over the feature space while ensuring that the intrinsic data structure is preserved. Experiments on 42 real-world datasets show that using V-GAN subspaces to build ensemble methods leads to a significant increase in one-class classification performance -- compared to existing subspace selection, feature selection, and embedding methods. Further experiments on synthetic data show that V-GAN identifies subspaces more accurately while scaling better than other relevant subspace selection methods. These results confirm the theoretical guarantees of our approach and also highlight its practical viability in high-dimensional settings.

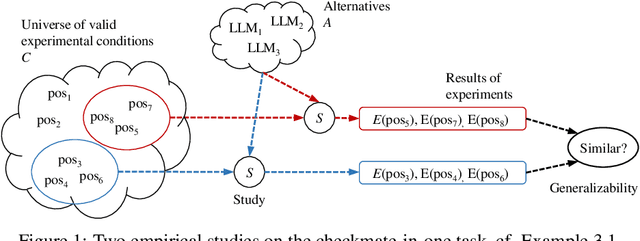

Generalizability of experimental studies

Jun 25, 2024

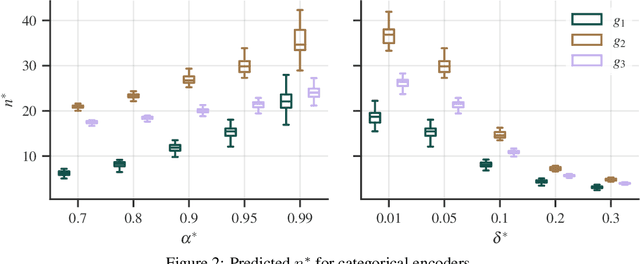

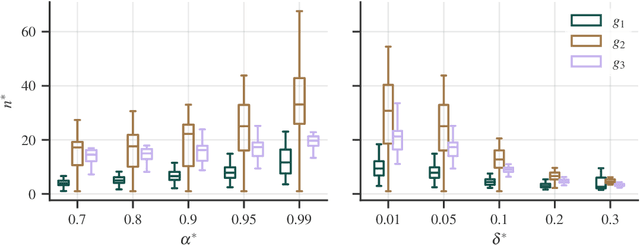

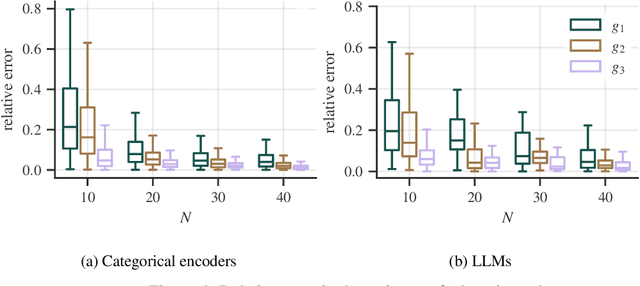

Abstract:Experimental studies are a cornerstone of machine learning (ML) research. A common, but often implicit, assumption is that the results of a study will generalize beyond the study itself, e.g. to new data. That is, there is a high probability that repeating the study under different conditions will yield similar results. Despite the importance of the concept, the problem of measuring generalizability remains open. This is probably due to the lack of a mathematical formalization of experimental studies. In this paper, we propose such a formalization and develop a quantifiable notion of generalizability. This notion allows to explore the generalizability of existing studies and to estimate the number of experiments needed to achieve the generalizability of new studies. To demonstrate its usefulness, we apply it to two recently published benchmarks to discern generalizable and non-generalizable results. We also publish a Python module that allows our analysis to be repeated for other experimental studies.

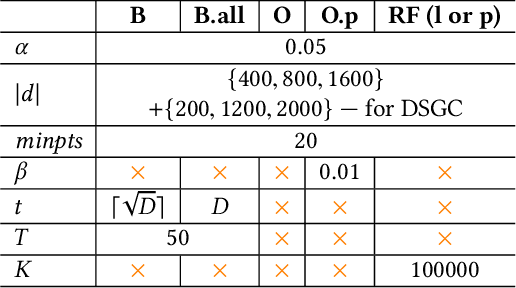

Generative Subspace Adversarial Active Learning for Outlier Detection in Multiple Views of High-dimensional Data

Apr 20, 2024

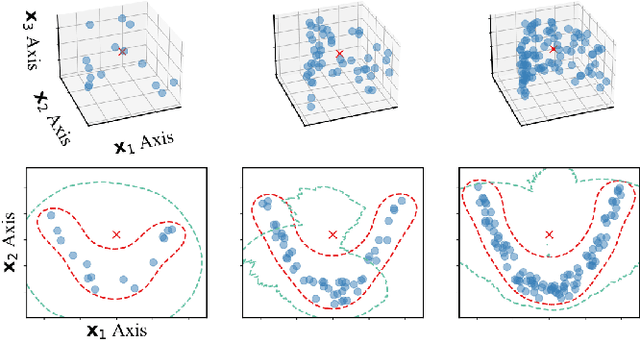

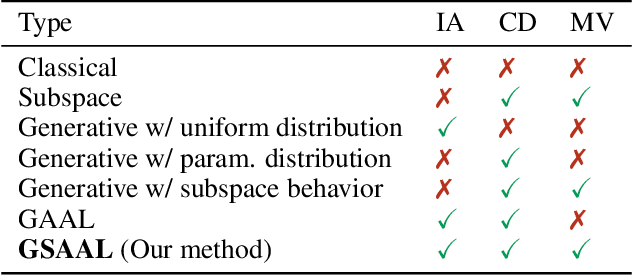

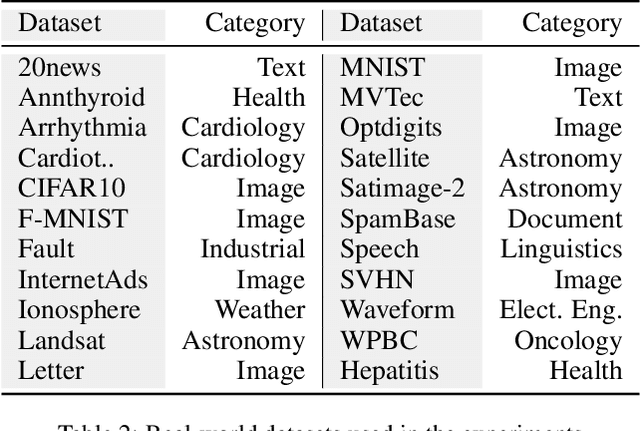

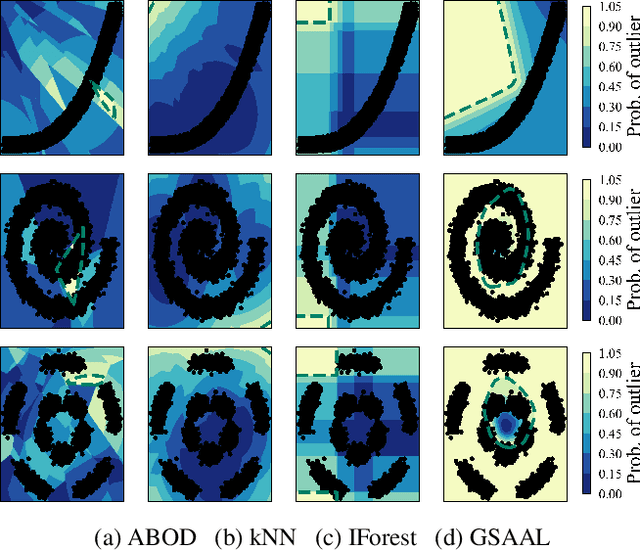

Abstract:Outlier detection in high-dimensional tabular data is an important task in data mining, essential for many downstream tasks and applications. Existing unsupervised outlier detection algorithms face one or more problems, including inlier assumption (IA), curse of dimensionality (CD), and multiple views (MV). To address these issues, we introduce Generative Subspace Adversarial Active Learning (GSAAL), a novel approach that uses a Generative Adversarial Network with multiple adversaries. These adversaries learn the marginal class probability functions over different data subspaces, while a single generator in the full space models the entire distribution of the inlier class. GSAAL is specifically designed to address the MV limitation while also handling the IA and CD, being the only method to do so. We provide a comprehensive mathematical formulation of MV, convergence guarantees for the discriminators, and scalability results for GSAAL. Our extensive experiments demonstrate the effectiveness and scalability of GSAAL, highlighting its superior performance compared to other popular OD methods, especially in MV scenarios.

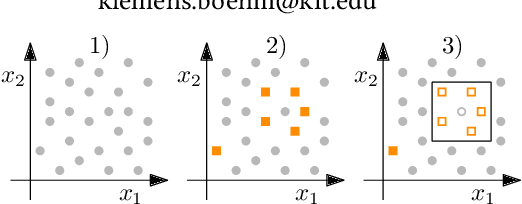

Efficient Generation of Hidden Outliers for Improved Outlier Detection

Feb 06, 2024Abstract:Outlier generation is a popular technique used for solving important outlier detection tasks. Generating outliers with realistic behavior is challenging. Popular existing methods tend to disregard the 'multiple views' property of outliers in high-dimensional spaces. The only existing method accounting for this property falls short in efficiency and effectiveness. We propose BISECT, a new outlier generation method that creates realistic outliers mimicking said property. To do so, BISECT employs a novel proposition introduced in this article stating how to efficiently generate said realistic outliers. Our method has better guarantees and complexity than the current methodology for recreating 'multiple views'. We use the synthetic outliers generated by BISECT to effectively enhance outlier detection in diverse datasets, for multiple use cases. For instance, oversampling with BISECT reduced the error by up to 3 times when compared with the baselines.

A benchmark of categorical encoders for binary classification

Jul 19, 2023Abstract:Categorical encoders transform categorical features into numerical representations that are indispensable for a wide range of machine learning models. Existing encoder benchmark studies lack generalizability because of their limited choice of (1) encoders, (2) experimental factors, and (3) datasets. Additionally, inconsistencies arise from the adoption of varying aggregation strategies. This paper is the most comprehensive benchmark of categorical encoders to date, including an extensive evaluation of 32 configurations of encoders from diverse families, with 36 combinations of experimental factors, and on 50 datasets. The study shows the profound influence of dataset selection, experimental factors, and aggregation strategies on the benchmark's conclusions -- aspects disregarded in previous encoder benchmarks.

Adaptive Bernstein Change Detector for High-Dimensional Data Streams

Jun 22, 2023

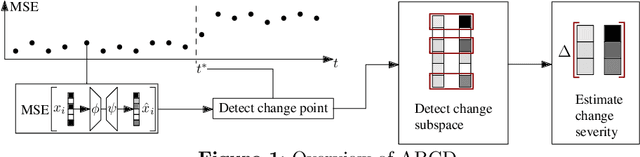

Abstract:Change detection is of fundamental importance when analyzing data streams. Detecting changes both quickly and accurately enables monitoring and prediction systems to react, e.g., by issuing an alarm or by updating a learning algorithm. However, detecting changes is challenging when observations are high-dimensional. In high-dimensional data, change detectors should not only be able to identify when changes happen, but also in which subspace they occur. Ideally, one should also quantify how severe they are. Our approach, ABCD, has these properties. ABCD learns an encoder-decoder model and monitors its accuracy over a window of adaptive size. ABCD derives a change score based on Bernstein's inequality to detect deviations in terms of accuracy, which indicate changes. Our experiments demonstrate that ABCD outperforms its best competitor by at least 8% and up to 23% in F1-score on average. It can also accurately estimate changes' subspace, together with a severity measure that correlates with the ground truth.

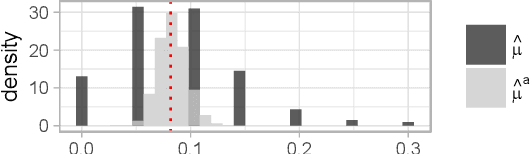

Budgeted Multi-Armed Bandits with Asymmetric Confidence Intervals

Jun 12, 2023Abstract:We study the stochastic Budgeted Multi-Armed Bandit (MAB) problem, where a player chooses from $K$ arms with unknown expected rewards and costs. The goal is to maximize the total reward under a budget constraint. A player thus seeks to choose the arm with the highest reward-cost ratio as often as possible. Current state-of-the-art policies for this problem have several issues, which we illustrate. To overcome them, we propose a new upper confidence bound (UCB) sampling policy, $\omega$-UCB, that uses asymmetric confidence intervals. These intervals scale with the distance between the sample mean and the bounds of a random variable, yielding a more accurate and tight estimation of the reward-cost ratio compared to our competitors. We show that our approach has logarithmic regret and consistently outperforms existing policies in synthetic and real settings.

Pedagogical Rule Extraction for Learning Interpretable Models

Dec 25, 2021

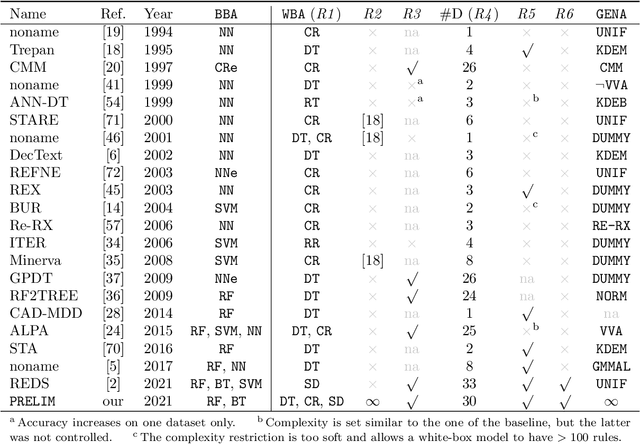

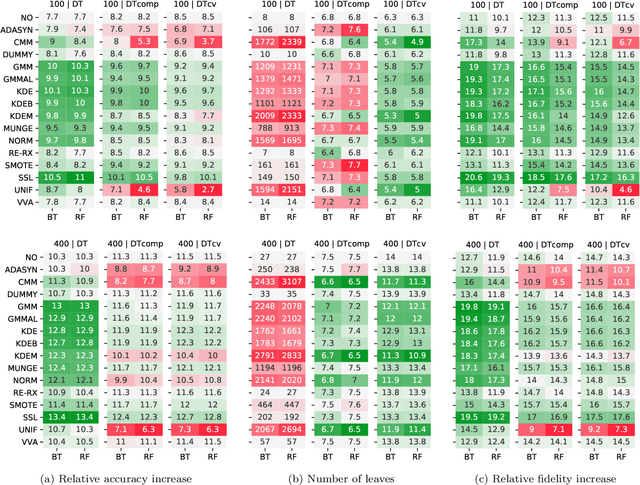

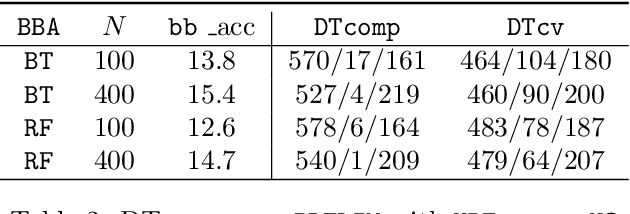

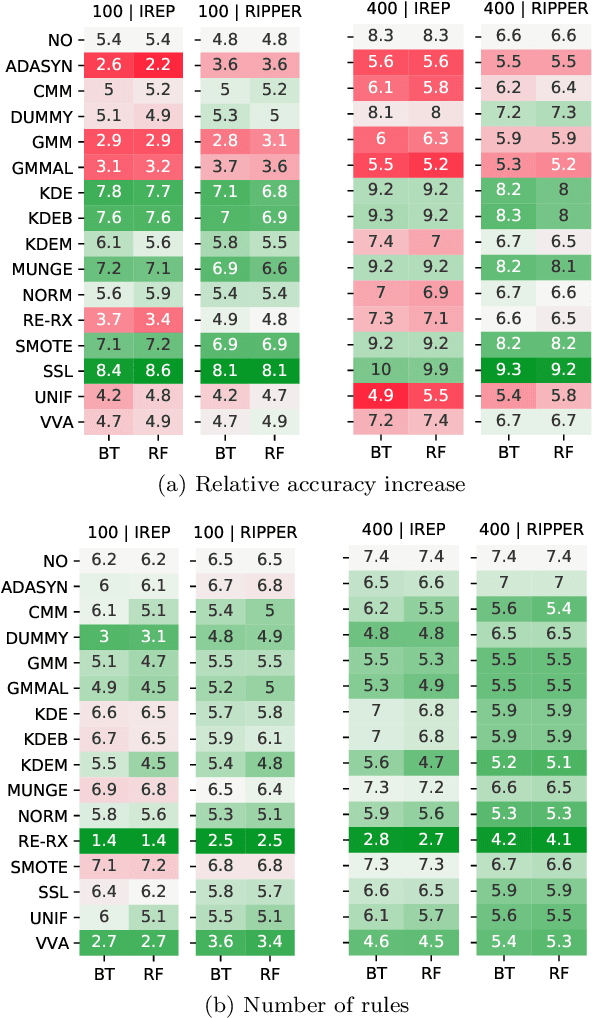

Abstract:Machine-learning models are ubiquitous. In some domains, for instance, in medicine, the models' predictions must be interpretable. Decision trees, classification rules, and subgroup discovery are three broad categories of supervised machine-learning models presenting knowledge in the form of interpretable rules. The accuracy of these models learned from small datasets is usually low. Obtaining larger datasets is often hard to impossible. We propose a framework dubbed PRELIM to learn better rules from small data. It augments data using statistical models and employs it to learn a rulebased model. In our extensive experiments, we identified PRELIM configurations that outperform state-of-the-art.

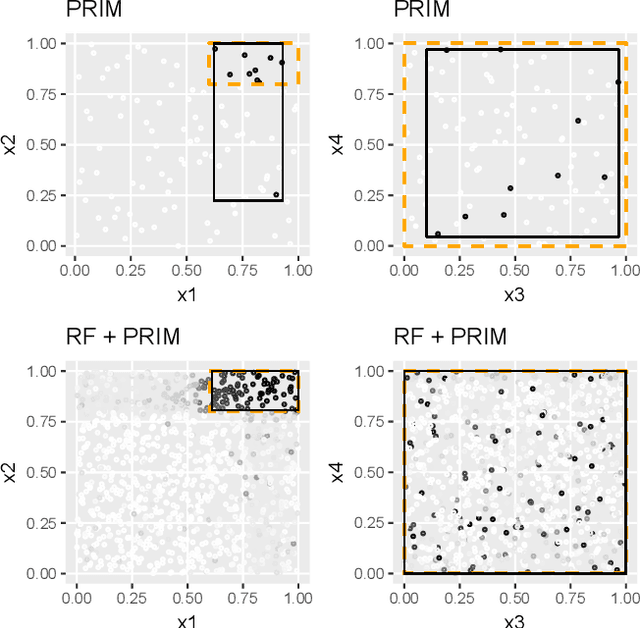

Scenario Discovery via Rule Extraction

Oct 03, 2019

Abstract:Scenario discovery is the process of finding areas of interest, commonly referred to as scenarios, in data spaces resulting from simulations. For instance, one might search for conditions - which are inputs of the simulation model - where the system under investigation is unstable. A commonly used algorithm for scenario discovery is PRIM. It yields scenarios in the form of hyper-rectangles which are human-comprehensible. When the simulation model has many inputs, and the simulations are computationally expensive, PRIM may not produce good results, given the affordable volume of data. So we propose a new procedure for scenario discovery - we train an intermediate statistical model which generalizes fast, and use it to label (a lot of) data for PRIM. We provide the statistical intuition behind our idea. Our experimental study shows that this method is much better than PRIM itself. Specifically, our method reduces the number of simulations runs necessary by 75% on average.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge