Ulpu Remes

Amortized Probabilistic Conditioning for Optimization, Simulation and Inference

Oct 20, 2024Abstract:Amortized meta-learning methods based on pre-training have propelled fields like natural language processing and vision. Transformer-based neural processes and their variants are leading models for probabilistic meta-learning with a tractable objective. Often trained on synthetic data, these models implicitly capture essential latent information in the data-generation process. However, existing methods do not allow users to flexibly inject (condition on) and extract (predict) this probabilistic latent information at runtime, which is key to many tasks. We introduce the Amortized Conditioning Engine (ACE), a new transformer-based meta-learning model that explicitly represents latent variables of interest. ACE affords conditioning on both observed data and interpretable latent variables, the inclusion of priors at runtime, and outputs predictive distributions for discrete and continuous data and latents. We show ACE's modeling flexibility and performance in diverse tasks such as image completion and classification, Bayesian optimization, and simulation-based inference.

Practical Equivariances via Relational Conditional Neural Processes

Jun 19, 2023

Abstract:Conditional Neural Processes (CNPs) are a class of metalearning models popular for combining the runtime efficiency of amortized inference with reliable uncertainty quantification. Many relevant machine learning tasks, such as spatio-temporal modeling, Bayesian Optimization and continuous control, contain equivariances -- for example to translation -- which the model can exploit for maximal performance. However, prior attempts to include equivariances in CNPs do not scale effectively beyond two input dimensions. In this work, we propose Relational Conditional Neural Processes (RCNPs), an effective approach to incorporate equivariances into any neural process model. Our proposed method extends the applicability and impact of equivariant neural processes to higher dimensions. We empirically demonstrate the competitive performance of RCNPs on a large array of tasks naturally containing equivariances.

Likelihood-free Model Choice for Simulator-based Models with the Jensen--Shannon Divergence

Jun 08, 2022

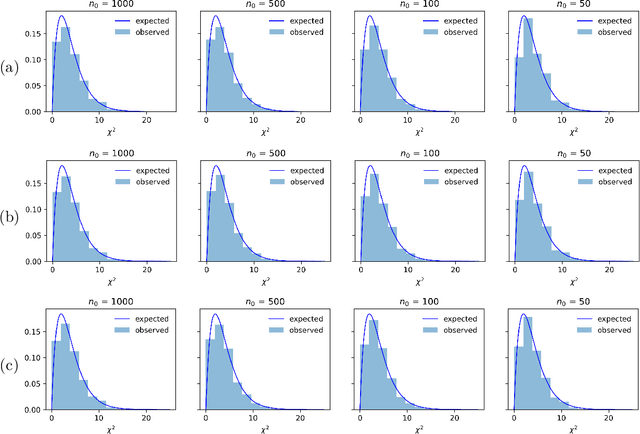

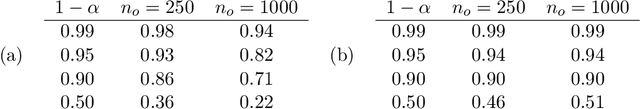

Abstract:Choice of appropriate structure and parametric dimension of a model in the light of data has a rich history in statistical research, where the first seminal approaches were developed in 1970s, such as the Akaike's and Schwarz's model scoring criteria that were inspired by information theory and embodied the rationale called Occam's razor. After those pioneering works, model choice was quickly established as its own field of research, gaining considerable attention in both computer science and statistics. However, to date, there have been limited attempts to derive scoring criteria for simulator-based models lacking a likelihood expression. Bayes factors have been considered for such models, but arguments have been put both for and against use of them and around issues related to their consistency. Here we use the asymptotic properties of Jensen--Shannon divergence (JSD) to derive a consistent model scoring criterion for the likelihood-free setting called JSD-Razor. Relationships of JSD-Razor with established scoring criteria for the likelihood-based approach are analyzed and we demonstrate the favorable properties of our criterion using both synthetic and real modeling examples.

Nonparametric likelihood-free inference with Jensen-Shannon divergence for simulator-based models with categorical output

May 26, 2022

Abstract:Likelihood-free inference for simulator-based statistical models has recently attracted a surge of interest, both in the machine learning and statistics communities. The primary focus of these research fields has been to approximate the posterior distribution of model parameters, either by various types of Monte Carlo sampling algorithms or deep neural network -based surrogate models. Frequentist inference for simulator-based models has been given much less attention to date, despite that it would be particularly amenable to applications with big data where implicit asymptotic approximation of the likelihood is expected to be accurate and can leverage computationally efficient strategies. Here we derive a set of theoretical results to enable estimation, hypothesis testing and construction of confidence intervals for model parameters using asymptotic properties of the Jensen--Shannon divergence. Such asymptotic approximation offers a rapid alternative to more computation-intensive approaches and can be attractive for diverse applications of simulator-based models. 61

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge