Tshilidzi Marwala

The Use of Synthetic Data to Train AI Models: Opportunities and Risks for Sustainable Development

Aug 31, 2023Abstract:In the current data driven era, synthetic data, artificially generated data that resembles the characteristics of real world data without containing actual personal information, is gaining prominence. This is due to its potential to safeguard privacy, increase the availability of data for research, and reduce bias in machine learning models. This paper investigates the policies governing the creation, utilization, and dissemination of synthetic data. Synthetic data can be a powerful instrument for protecting the privacy of individuals, but it also presents challenges, such as ensuring its quality and authenticity. A well crafted synthetic data policy must strike a balance between privacy concerns and the utility of data, ensuring that it can be utilized effectively without compromising ethical or legal standards. Organizations and institutions must develop standardized guidelines and best practices in order to capitalize on the benefits of synthetic data while addressing its inherent challenges.

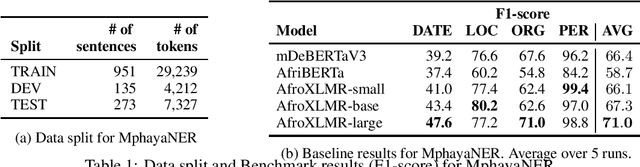

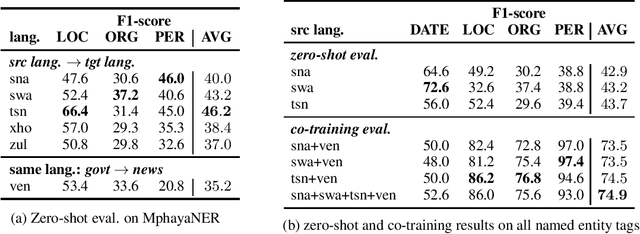

MphayaNER: Named Entity Recognition for Tshivenda

Apr 08, 2023

Abstract:Named Entity Recognition (NER) plays a vital role in various Natural Language Processing tasks such as information retrieval, text classification, and question answering. However, NER can be challenging, especially in low-resource languages with limited annotated datasets and tools. This paper adds to the effort of addressing these challenges by introducing MphayaNER, the first Tshivenda NER corpus in the news domain. We establish NER baselines by \textit{fine-tuning} state-of-the-art models on MphayaNER. The study also explores zero-shot transfer between Tshivenda and other related Bantu languages, with chiShona and Kiswahili showing the best results. Augmenting MphayaNER with chiShona data was also found to improve model performance significantly. Both MphayaNER and the baseline models are made publicly available.

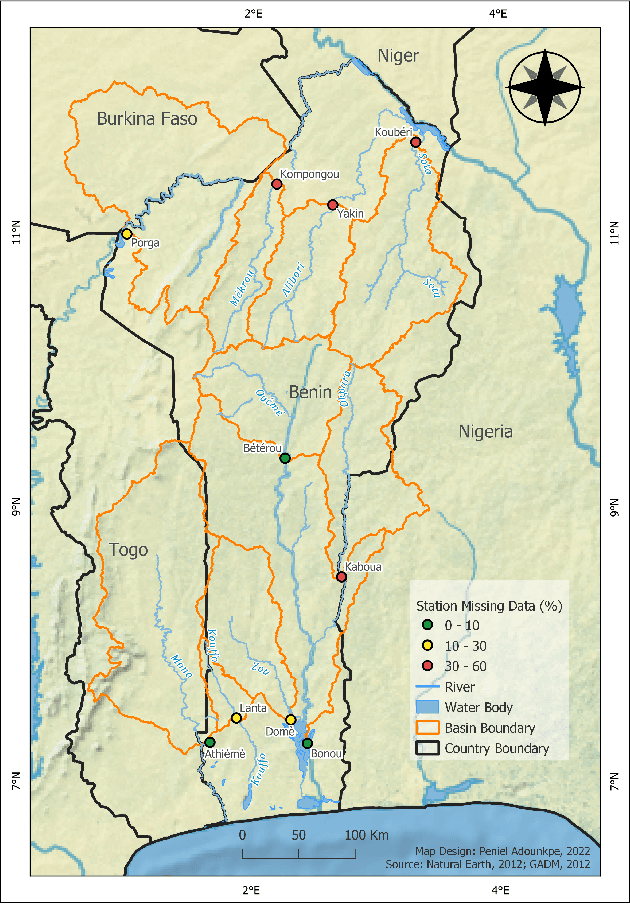

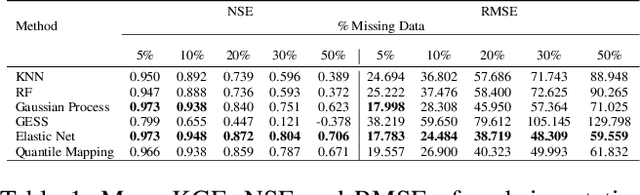

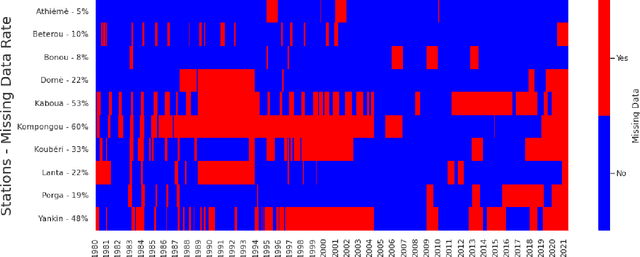

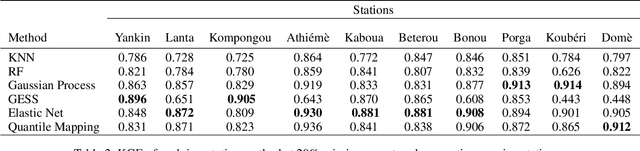

Imputation of Missing Streamflow Data at Multiple Gauging Stations in Benin Republic

Nov 17, 2022

Abstract:Streamflow observation data is vital for flood monitoring, agricultural, and settlement planning. However, such streamflow data are commonly plagued with missing observations due to various causes such as harsh environmental conditions and constrained operational resources. This problem is often more pervasive in under-resourced areas such as Sub-Saharan Africa. In this work, we reconstruct streamflow time series data through bias correction of the GEOGloWS ECMWF streamflow service (GESS) forecasts at ten river gauging stations in Benin Republic. We perform bias correction by fitting Quantile Mapping, Gaussian Process, and Elastic Net regression in a constrained training period. We show by simulating missingness in a testing period that GESS forecasts have a significant bias that results in low predictive skill over the ten Beninese stations. Our findings suggest that overall bias correction by Elastic Net and Gaussian Process regression achieves superior skill relative to traditional imputation by Random Forest, k-Nearest Neighbour, and GESS lookup. The findings of this work provide a basis for integrating global GESS streamflow data into operational early-warning decision-making systems (e.g., flood alert) in countries vulnerable to drought and flooding due to extreme weather events.

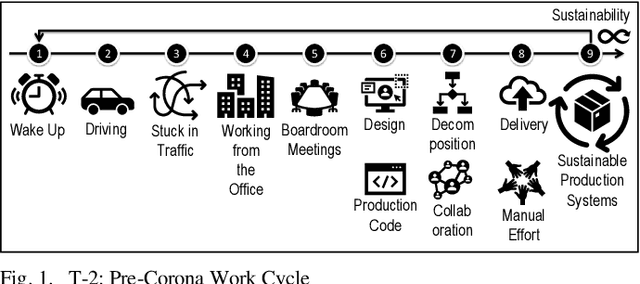

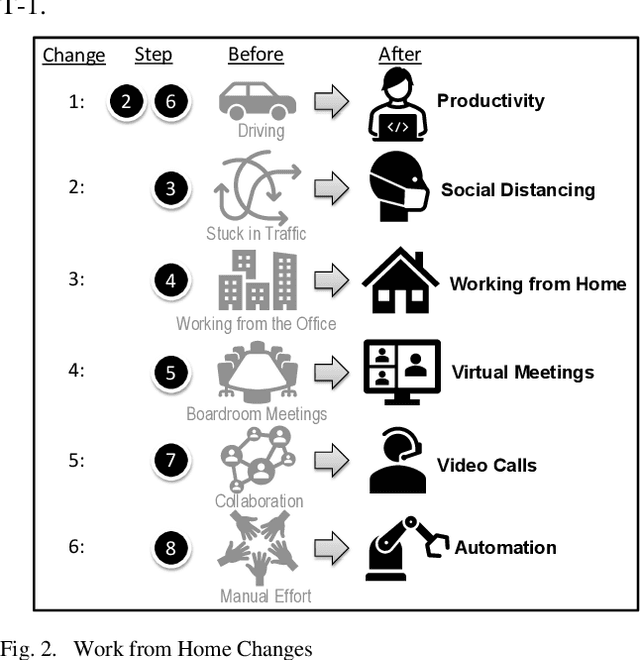

Nano Version Control and Robots of Robots: Data Driven, Regenerative Production Code

Oct 10, 2021

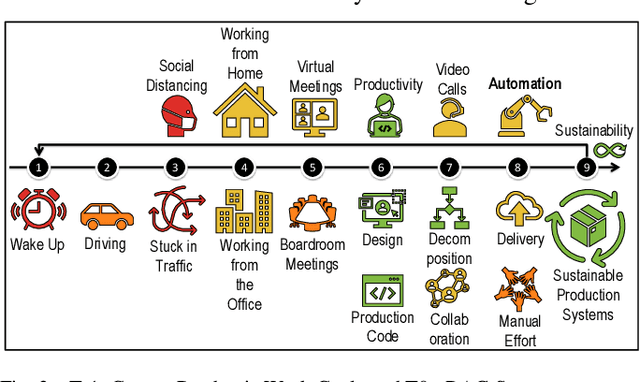

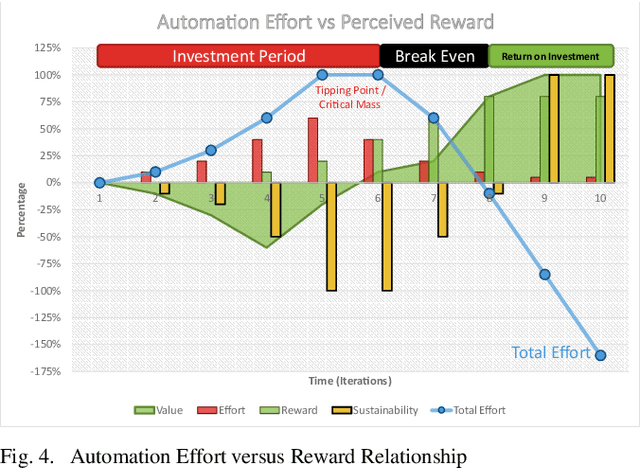

Abstract:A reflection of the Corona pandemic highlights the need for more sustainable production systems using automation. The goal is to retain automation of repetitive tasks while allowing complex parts to come together. We recognize the fragility and how hard it is to create traditional automation. We introduce a method which converts one really hard problem of producing sustainable production code into three simpler problems being data, patterns and working prototypes. We use developer seniority as a metric to measure whether the proposed method is easier. By using agent-based simulation and NanoVC repos for agent arbitration, we are able to create a simulated environment where patterns developed by people are used to transform working prototypes into templates that data can be fed through to create the robots that create the production code. Having two layers of robots allow early implementation choices to be replaced as we gather more feedback from the working system. Several benefits of this approach have been discovered, with the most notable being that the Robot of Robots encodes a legacy of the person that designed it in the form of the 3 ingredients (data, patterns and working prototypes). This method allows us to achieve our goal of reducing the fragility of the production code while removing the difficulty of getting there.

Antithetic Riemannian Manifold And Quantum-Inspired Hamiltonian Monte Carlo

Jul 05, 2021

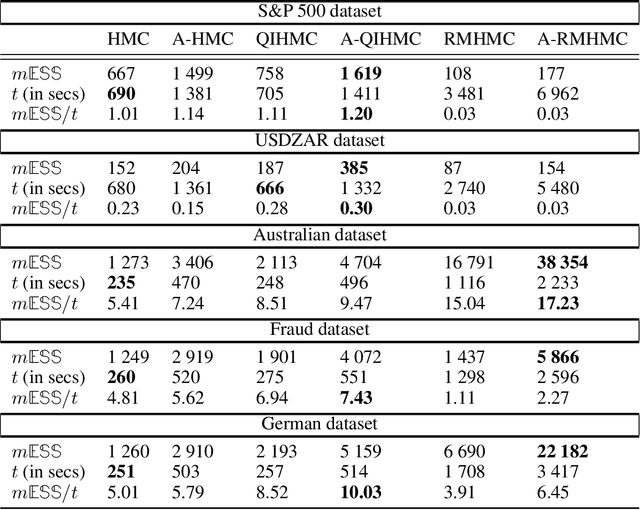

Abstract:Markov Chain Monte Carlo inference of target posterior distributions in machine learning is predominately conducted via Hamiltonian Monte Carlo and its variants. This is due to Hamiltonian Monte Carlo based samplers ability to suppress random-walk behaviour. As with other Markov Chain Monte Carlo methods, Hamiltonian Monte Carlo produces auto-correlated samples which results in high variance in the estimators, and low effective sample size rates in the generated samples. Adding antithetic sampling to Hamiltonian Monte Carlo has been previously shown to produce higher effective sample rates compared to vanilla Hamiltonian Monte Carlo. In this paper, we present new algorithms which are antithetic versions of Riemannian Manifold Hamiltonian Monte Carlo and Quantum-Inspired Hamiltonian Monte Carlo. The Riemannian Manifold Hamiltonian Monte Carlo algorithm improves on Hamiltonian Monte Carlo by taking into account the local geometry of the target, which is beneficial for target densities that may exhibit strong correlations in the parameters. Quantum-Inspired Hamiltonian Monte Carlo is based on quantum particles that can have random mass. Quantum-Inspired Hamiltonian Monte Carlo uses a random mass matrix which results in better sampling than Hamiltonian Monte Carlo on spiky and multi-modal distributions such as jump diffusion processes. The analysis is performed on jump diffusion process using real world financial market data, as well as on real world benchmark classification tasks using Bayesian logistic regression.

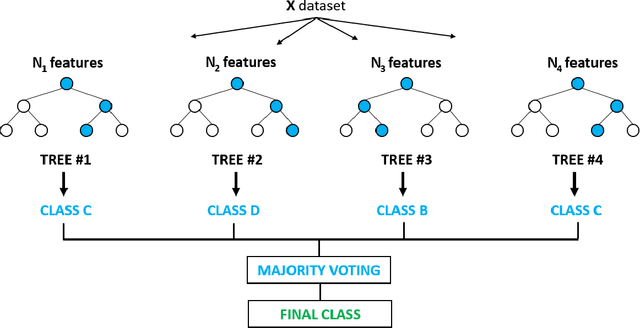

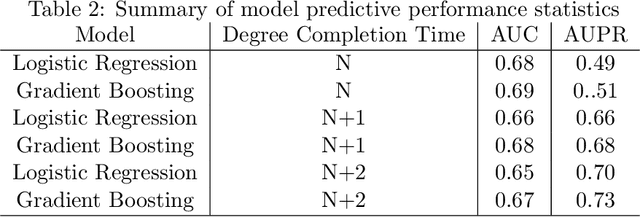

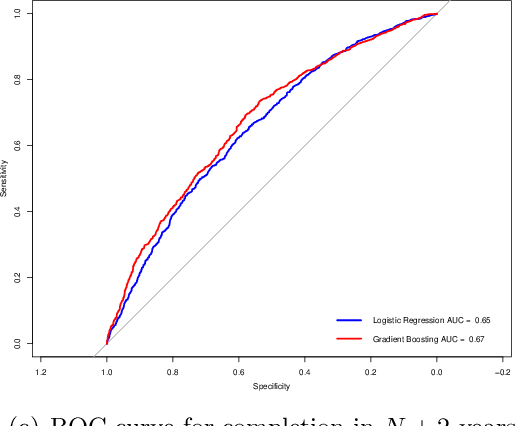

Predicting Higher Education Throughput in South Africa Using a Tree-Based Ensemble Technique

Jun 12, 2021

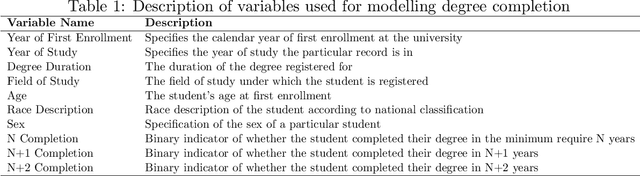

Abstract:We use gradient boosting machines and logistic regression to predict academic throughput at a South African university. The results highlight the significant influence of socio-economic factors and field of study as predictors of throughput. We further find that socio-economic factors become less of a predictor relative to the field of study as the time to completion increases. We provide recommendations on interventions to counteract the identified effects, which include academic, psychosocial and financial support.

Healing Products of Gaussian Processes

Feb 14, 2021

Abstract:Gaussian processes (GPs) are nonparametric Bayesian models that have been applied to regression and classification problems. One of the approaches to alleviate their cubic training cost is the use of local GP experts trained on subsets of the data. In particular, product-of-expert models combine the predictive distributions of local experts through a tractable product operation. While these expert models allow for massively distributed computation, their predictions typically suffer from erratic behaviour of the mean or uncalibrated uncertainty quantification. By calibrating predictions via a tempered softmax weighting, we provide a solution to these problems for multiple product-of-expert models, including the generalised product of experts and the robust Bayesian committee machine. Furthermore, we leverage the optimal transport literature and propose a new product-of-expert model that combines predictions of local experts by computing their Wasserstein barycenter, which can be applied to both regression and classification.

An Automatic Relevance Determination Prior Bayesian Neural Network for Controlled Variable Selection

Jan 06, 2020

Abstract:We present an Automatic Relevance Determination prior Bayesian Neural Network(BNN-ARD) weight l2-norm measure as a feature importance statistic for the model-x knockoff filter. We show on both simulated data and the Norwegian wind farm dataset that the proposed feature importance statistic yields statistically significant improvements relative to similar feature importance measures in both variable selection power and predictive performance on a real world dataset.

Relative Net Utility and the Saint Petersburg Paradox

Oct 24, 2019

Abstract:The famous St Petersburg Paradox shows that the theory of expected value does not capture the real-world economics of decision-making problem. Over the years, many economic theories were developed to resolve the paradox and explain the subjective utility of the expected outcomes and risk aversion. In this paper, we use the concept of the net utility to resolve the St Petersburg paradox. The reason why the principle of absolute instead of net utility does not work is because it is a first order approximation of some unknown utility function. Because the net utility concept is able to explain both behavioral economics and the St Petersburg paradox it is deemed a universal approach to handling utility. Finally, this paper explored how artificial intelligent (AI) agent will make choices and observed that if AI agent uses the nominal utility approach it will see infinite reward while if it uses the net utility approach it will see the limited reward that human beings see.

Automatic Relevance Determination Bayesian Neural Networks for Credit Card Default Modelling

Jun 14, 2019

Abstract:Credit risk modelling is an integral part of the global financial system. While there has been great attention paid to neural network models for credit default prediction, such models often lack the required interpretation mechanisms and measures of the uncertainty around their predictions. This work develops and compares Bayesian Neural Networks(BNNs) for credit card default modelling. This includes a BNNs trained by Gaussian approximation and the first implementation of BNNs trained by Hybrid Monte Carlo(HMC) in credit risk modelling. The results on the Taiwan Credit Dataset show that BNNs with Automatic Relevance Determination(ARD) outperform normal BNNs without ARD. The results also show that BNNs trained by Gaussian approximation display similar predictive performance to those trained by the HMC. The results further show that BNN with ARD can be used to draw inferences about the relative importance of different features thus critically aiding decision makers in explaining model output to consumers. The robustness of this result is reinforced by high levels of congruence between the features identified as important using the two different approaches for training BNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge