Trilce Estrada

University of New Mexico

Global explainability of a deep abstaining classifier

Apr 01, 2025

Abstract:We present a global explainability method to characterize sources of errors in the histology prediction task of our real-world multitask convolutional neural network (MTCNN)-based deep abstaining classifier (DAC), for automated annotation of cancer pathology reports from NCI-SEER registries. Our classifier was trained and evaluated on 1.04 million hand-annotated samples and makes simultaneous predictions of cancer site, subsite, histology, laterality, and behavior for each report. The DAC framework enables the model to abstain on ambiguous reports and/or confusing classes to achieve a target accuracy on the retained (non-abstained) samples, but at the cost of decreased coverage. Requiring 97% accuracy on the histology task caused our model to retain only 22% of all samples, mostly the less ambiguous and common classes. Local explainability with the GradInp technique provided a computationally efficient way of obtaining contextual reasoning for thousands of individual predictions. Our method, involving dimensionality reduction of approximately 13000 aggregated local explanations, enabled global identification of sources of errors as hierarchical complexity among classes, label noise, insufficient information, and conflicting evidence. This suggests several strategies such as exclusion criteria, focused annotation, and reduced penalties for errors involving hierarchically related classes to iteratively improve our DAC in this complex real-world implementation.

Girasol, a Sky Imaging and Global Solar Irradiance Dataset

Feb 26, 2021

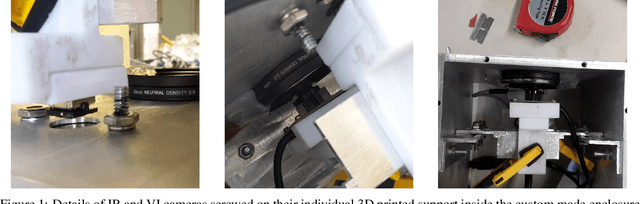

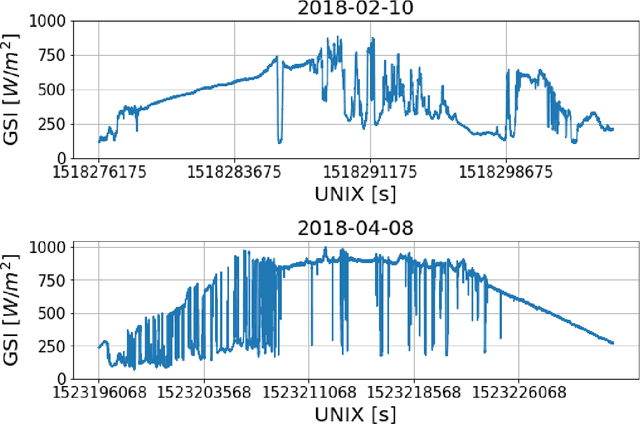

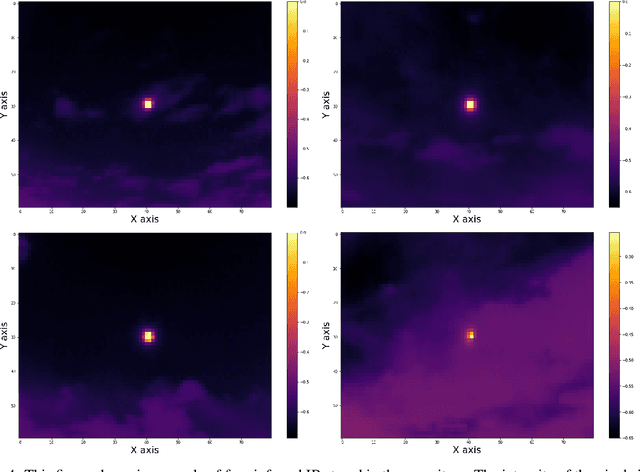

Abstract:The energy available in Micro Grid (MG) that is powered by solar energy is tightly related to the weather conditions in the moment of generation. Very short-term forecast of solar irradiance provides the MG with the capability of automatically controlling the dispatch of energy. We propose to achieve this using a data acquisition systems (DAQ) that simultaneously records sky imaging and Global Solar Irradiance (GSI) measurements, with the objective of extracting features from clouds and use them to forecast the power produced by a Photovoltaic (PV) system. The DAQ system is nicknamed as the \emph{Girasol Machine} (Girasol means Sunflower in Spanish). The sky imaging system consists of a longwave infrared (IR) camera and a visible (VI) light camera with a fisheye lens attached to it. The cameras are installed inside a weatherproof enclosure that it is mounted on an outdoor tracker. The tracker updates its pan an tilt every second using a solar position algorithm to maintain the Sun in the center of the IR and VI images. A pyranometer is situated on a horizontal support next to the DAQ system to measure GSI. The dataset, composed of IR images, VI images, GSI measurements, and the Sun's positions, has been tagged with timestamps.

In situ TensorView: In situ Visualization of Convolutional Neural Networks

Jun 16, 2018

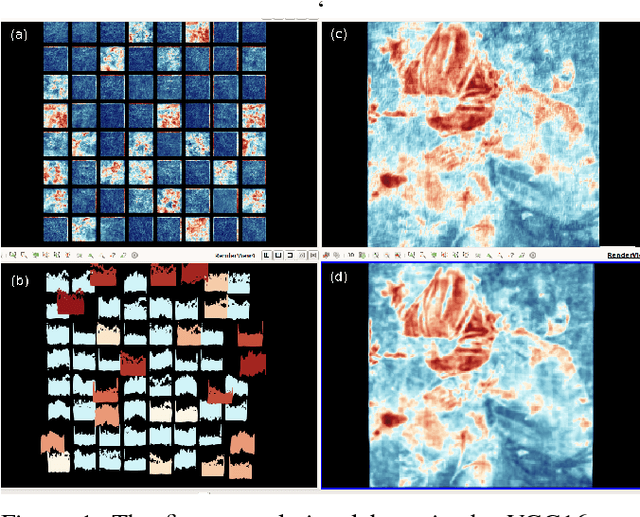

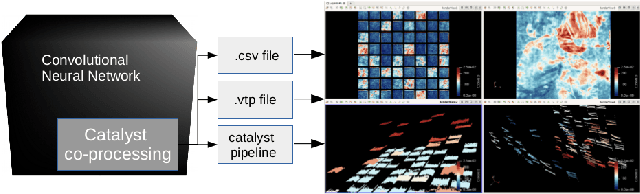

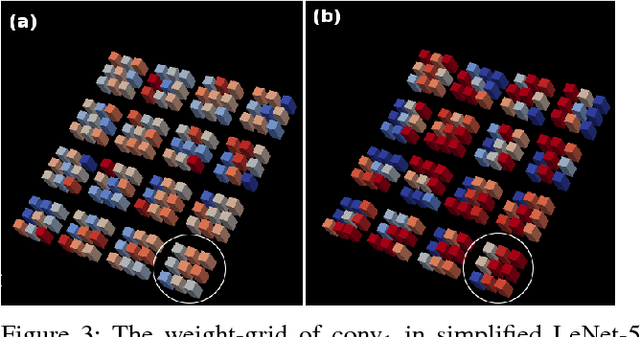

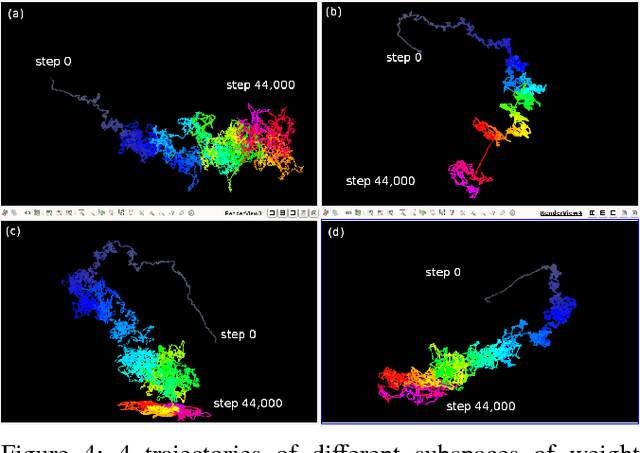

Abstract:Convolutional Neural Networks(CNNs) are complex systems. They are trained so they can adapt their internal connections to recognize images, texts and more. It is both interesting and helpful to visualize the dynamics within such deep artificial neural networks so that people can understand how these artificial networks are learning and making predictions. In the field of scientific simulations, visualization tools like Paraview have long been utilized to provide insights and understandings. We present in situ TensorView to visualize the training and functioning of CNNs as if they are systems of scientific simulations. In situ TensorView is a loosely coupled in situ visualization open framework that provides multiple viewers to help users to visualize and understand their networks. It leverages the capability of co-processing from Paraview to provide real-time visualization during training and predicting phases. This avoid heavy I/O overhead for visualizing large dynamic systems. Only a small number of lines of codes are injected in TensorFlow framework. The visualization can provide guidance to adjust the architecture of networks, or compress the pre-trained networks. We showcase visualizing the training of LeNet-5 and VGG16 using in situ TensorView.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge