Simon Su

Accelerating Binarized Neural Networks via Bit-Tensor-Cores in Turing GPUs

Jun 30, 2020

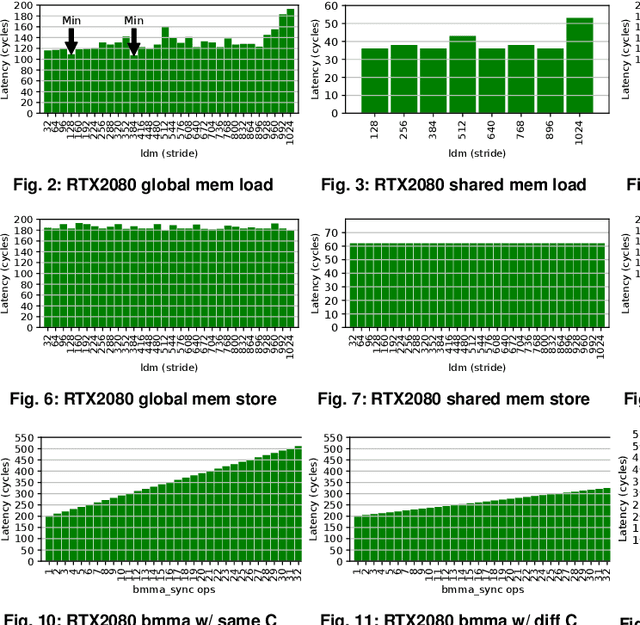

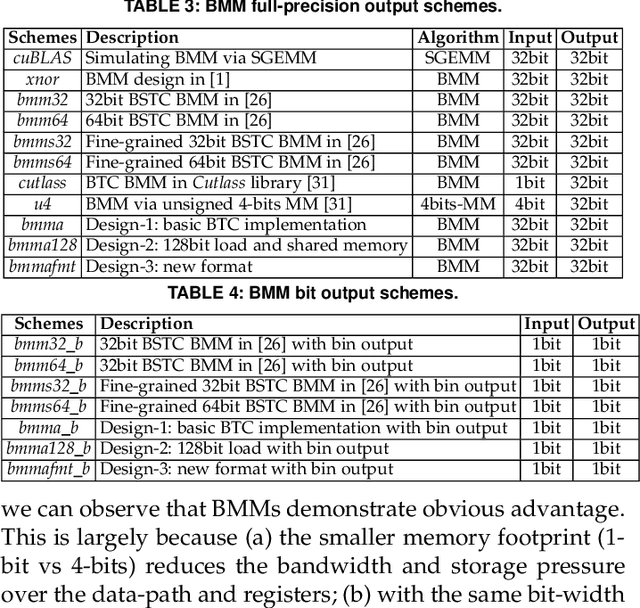

Abstract:Despite foreseeing tremendous speedups over conventional deep neural networks, the performance advantage of binarized neural networks (BNNs) has merely been showcased on general-purpose processors such as CPUs and GPUs. In fact, due to being unable to leverage bit-level-parallelism with a word-based architecture, GPUs have been criticized for extremely low utilization (1%) when executing BNNs. Consequently, the latest tensorcores in NVIDIA Turing GPUs start to experimentally support bit computation. In this work, we look into this brand new bit computation capability and characterize its unique features. We show that the stride of memory access can significantly affect performance delivery and a data-format co-design is highly desired to support the tensorcores for achieving superior performance than existing software solutions without tensorcores. We realize the tensorcore-accelerated BNN design, particularly the major functions for fully-connect and convolution layers -- bit matrix multiplication and bit convolution. Evaluations on two NVIDIA Turing GPUs show that, with ResNet-18, our BTC-BNN design can process ImageNet at a rate of 5.6K images per second, 77% faster than state-of-the-art. Our BNN approach is released on https://github.com/pnnl/TCBNN.

In situ TensorView: In situ Visualization of Convolutional Neural Networks

Jun 16, 2018

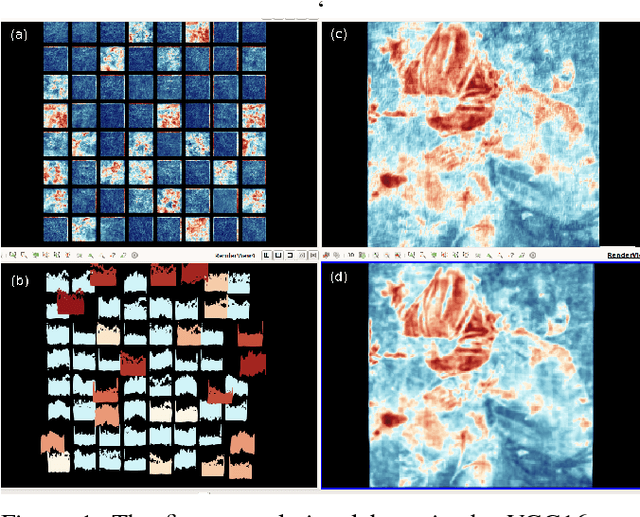

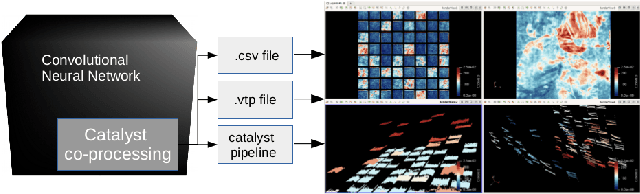

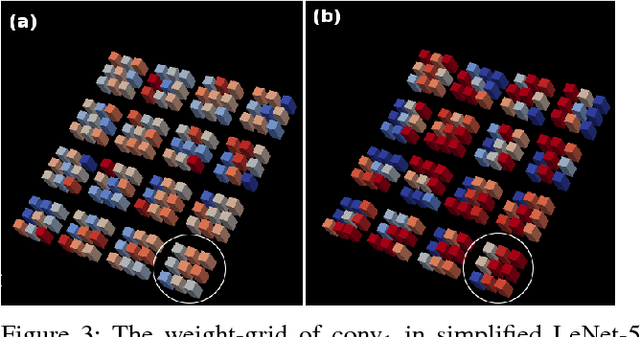

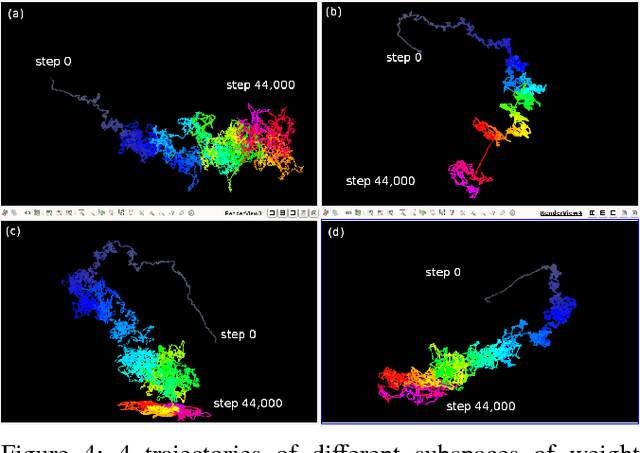

Abstract:Convolutional Neural Networks(CNNs) are complex systems. They are trained so they can adapt their internal connections to recognize images, texts and more. It is both interesting and helpful to visualize the dynamics within such deep artificial neural networks so that people can understand how these artificial networks are learning and making predictions. In the field of scientific simulations, visualization tools like Paraview have long been utilized to provide insights and understandings. We present in situ TensorView to visualize the training and functioning of CNNs as if they are systems of scientific simulations. In situ TensorView is a loosely coupled in situ visualization open framework that provides multiple viewers to help users to visualize and understand their networks. It leverages the capability of co-processing from Paraview to provide real-time visualization during training and predicting phases. This avoid heavy I/O overhead for visualizing large dynamic systems. Only a small number of lines of codes are injected in TensorFlow framework. The visualization can provide guidance to adjust the architecture of networks, or compress the pre-trained networks. We showcase visualizing the training of LeNet-5 and VGG16 using in situ TensorView.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge