Travis E. Gibson

Regret Analysis: a control perspective

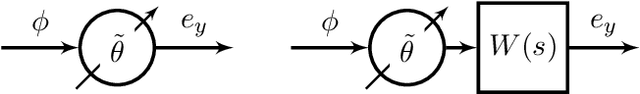

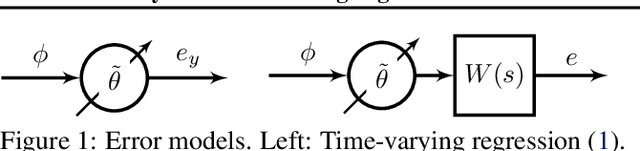

Jan 08, 2025Abstract:Online learning and model reference adaptive control have many interesting intersections. One area where they differ however is in how the algorithms are analyzed and what objective or metric is used to discriminate "good" algorithms from "bad" algorithms. In adaptive control there are usually two objectives: 1) prove that all time varying parameters/states of the system are bounded, and 2) that the instantaneous error between the adaptively controlled system and a reference system converges to zero over time (or at least a compact set). For online learning the performance of algorithms is often characterized by the regret the algorithm incurs. Regret is defined as the cumulative loss (cost) over time from the online algorithm minus the cumulative loss (cost) of the single optimal fixed parameter choice in hindsight. Another significant difference between the two areas of research is with regard to the assumptions made in order to obtain said results. Adaptive control makes assumptions about the input-output properties of the control problem and derives solutions for a fixed error model or optimization task. In the online learning literature results are derived for classes of loss functions (i.e. convex) while a priori assuming that all time varying parameters are bounded, which for many optimization tasks is not unrealistic, but is a non starter in control applications. In this work we discuss these differences in detail through the regret based analysis of gradient descent for convex functions and the control based analysis of a streaming regression problem. We close with a discussion about the newly defined paradigm of online adaptive control and ask the following question "Are regret optimal control strategies deployable?"

On the stability of second order gradient descent for time varying convex functions

May 22, 2024Abstract:Gradient based optimization algorithms deployed in Machine Learning (ML) applications are often analyzed and compared by their convergence rates or regret bounds. While these rates and bounds convey valuable information they don't always directly translate to stability guarantees. Stability and similar concepts, like robustness, will become ever more important as we move towards deploying models in real-time and safety critical systems. In this work we build upon the results in Gaudio et al. 2021 and Moreu and Annaswamy 2022 for second order gradient descent when applied to explicitly time varying cost functions and provide more general stability guarantees. These more general results can aid in the design and certification of these optimization schemes so as to help ensure safe and reliable deployment for real-time learning applications. We also hope that the techniques provided here will stimulate and cross-fertilize the analysis that occurs on the same algorithms from the online learning and stochastic optimization communities.

Accelerated Learning with Robustness to Adversarial Regressors

May 04, 2020

Abstract:High order iterative momentum-based parameter update algorithms have seen widespread applications in training machine learning models. Recently, connections with variational approaches and continuous dynamics have led to the derivation of new classes of high order learning algorithms with accelerated learning guarantees. Such methods however, have only considered the case of static regressors. There is a significant need in continual/lifelong learning applications for parameter update algorithms which can be proven stable in the presence of adversarial time-varying regressors. In such settings, the learning algorithm must continually adapt to changes in the distribution of regressors. In this paper, we propose a new discrete time algorithm which: 1) provides stability and asymptotic convergence guarantees in the presence of adversarial regressors by leveraging insights from adaptive control theory and 2) provides non-asymptotic accelerated learning guarantees leveraging insights from convex optimization. In particular, our algorithm reaches an $\epsilon$ sub-optimal point in at most $\tilde{\mathcal{O}}(1/\sqrt{\epsilon})$ iterations when regressors are constant - matching lower bounds due to Nesterov of $\Omega(1/\sqrt{\epsilon})$, up to a $\log(1/\epsilon)$ factor and provides guaranteed bounds for stability when regressors are time-varying.

Connections Between Adaptive Control and Optimization in Machine Learning

Apr 11, 2019

Abstract:This paper demonstrates many immediate connections between adaptive control and optimization methods commonly employed in machine learning. Starting from common output error formulations, similarities in update law modifications are examined. Concepts in stability, performance, and learning, common to both fields are then discussed. Building on the similarities in update laws and common concepts, new intersections and opportunities for improved algorithm analysis are provided. In particular, a specific problem related to higher order learning is solved through insights obtained from these intersections.

Accelerated Learning in the Presence of Time Varying Features with Applications to Machine Learning and Adaptive Control

Mar 12, 2019

Abstract:Features in machine learning problems are often time varying and may be related to outputs in an algebraic or dynamical manner. The dynamic nature of these machine learning problems renders current accelerated gradient descent methods unstable or weakens their convergence guarantees. This paper proposes algorithms for the case when time varying features are present, and demonstrates provable performance guarantees. We develop a variational perspective within a continuous time algorithm. This variational perspective includes, among other things, higher-order learning concepts and normalization, both of which stem from adaptive control, and allows stability to be established for dynamical machine learning problems. These higher-order algorithms are also examined for achieving accelerated learning in adaptive control. Simulations are provided to verify the theoretical results.

Robust and Scalable Models of Microbiome Dynamics

Jun 08, 2018

Abstract:Microbes are everywhere, including in and on our bodies, and have been shown to play key roles in a variety of prevalent human diseases. Consequently, there has been intense interest in the design of bacteriotherapies or "bugs as drugs," which are communities of bacteria administered to patients for specific therapeutic applications. Central to the design of such therapeutics is an understanding of the causal microbial interaction network and the population dynamics of the organisms. In this work we present a Bayesian nonparametric model and associated efficient inference algorithm that addresses the key conceptual and practical challenges of learning microbial dynamics from time series microbe abundance data. These challenges include high-dimensional (300+ strains of bacteria in the gut) but temporally sparse and non-uniformly sampled data; high measurement noise; and, nonlinear and physically non-negative dynamics. Our contributions include a new type of dynamical systems model for microbial dynamics based on what we term interaction modules, or learned clusters of latent variables with redundant interaction structure (reducing the expected number of interaction coefficients from $O(n^2)$ to $O((\log n)^2)$); a fully Bayesian formulation of the stochastic dynamical systems model that propagates measurement and latent state uncertainty throughout the model; and introduction of a temporally varying auxiliary variable technique to enable efficient inference by relaxing the hard non-negativity constraint on states. We apply our method to simulated and real data, and demonstrate the utility of our technique for system identification from limited data and gaining new biological insights into bacteriotherapy design.

* ICML 2018

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge