Michael A. Bolender

Accelerated Learning with Robustness to Adversarial Regressors

May 04, 2020

Abstract:High order iterative momentum-based parameter update algorithms have seen widespread applications in training machine learning models. Recently, connections with variational approaches and continuous dynamics have led to the derivation of new classes of high order learning algorithms with accelerated learning guarantees. Such methods however, have only considered the case of static regressors. There is a significant need in continual/lifelong learning applications for parameter update algorithms which can be proven stable in the presence of adversarial time-varying regressors. In such settings, the learning algorithm must continually adapt to changes in the distribution of regressors. In this paper, we propose a new discrete time algorithm which: 1) provides stability and asymptotic convergence guarantees in the presence of adversarial regressors by leveraging insights from adaptive control theory and 2) provides non-asymptotic accelerated learning guarantees leveraging insights from convex optimization. In particular, our algorithm reaches an $\epsilon$ sub-optimal point in at most $\tilde{\mathcal{O}}(1/\sqrt{\epsilon})$ iterations when regressors are constant - matching lower bounds due to Nesterov of $\Omega(1/\sqrt{\epsilon})$, up to a $\log(1/\epsilon)$ factor and provides guaranteed bounds for stability when regressors are time-varying.

Parameter Estimation in Adaptive Control of Time-Varying Systems Under a Range of Excitation Conditions

Nov 10, 2019

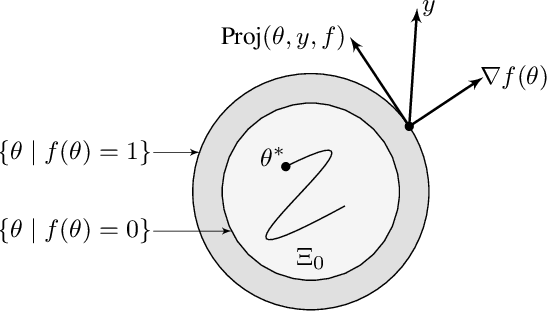

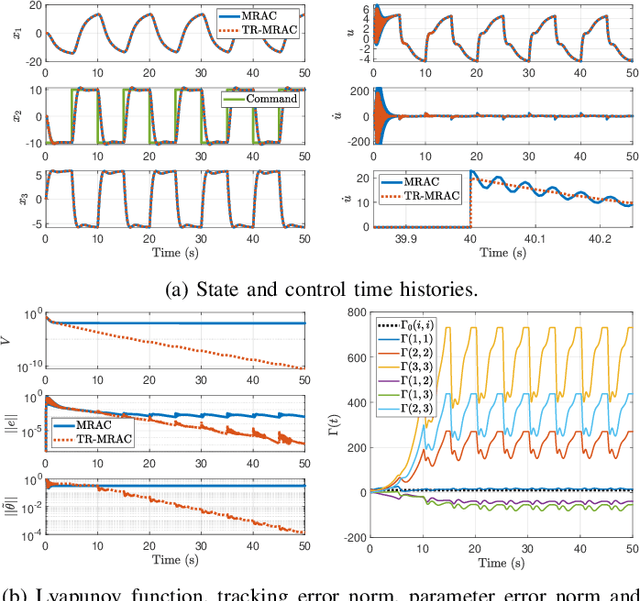

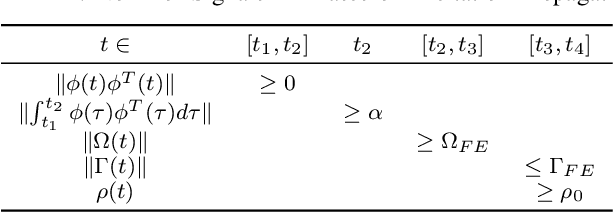

Abstract:This paper presents a new parameter estimation algorithm for the adaptive control of a class of time-varying plants. The main feature of this algorithm is a matrix of time-varying learning rates, which enables parameter estimation error trajectories to tend exponentially fast towards a compact set whenever excitation conditions are satisfied. This algorithm is employed in a large class of problems where unknown parameters are present and are time-varying. It is shown that this algorithm guarantees global boundedness of the state and parameter errors of the system, and avoids an often used filtering approach for constructing key regressor signals. In addition, intervals of time over which these errors tend exponentially fast toward a compact set are provided, both in the presence of finite and persistent excitation. A projection operator is used to ensure the boundedness of the learning rate matrix, as compared to a time-varying forgetting factor. Numerical simulations are provided to complement the theoretical analysis.

Connections Between Adaptive Control and Optimization in Machine Learning

Apr 11, 2019

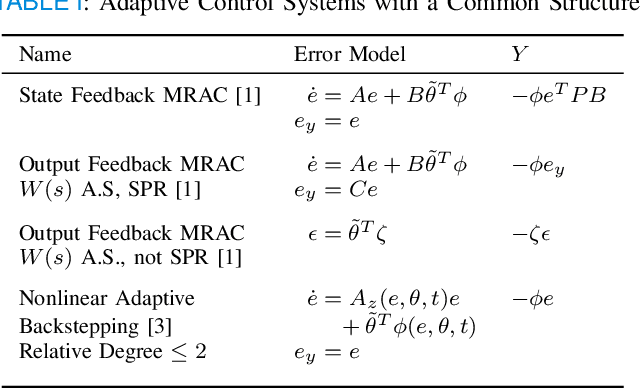

Abstract:This paper demonstrates many immediate connections between adaptive control and optimization methods commonly employed in machine learning. Starting from common output error formulations, similarities in update law modifications are examined. Concepts in stability, performance, and learning, common to both fields are then discussed. Building on the similarities in update laws and common concepts, new intersections and opportunities for improved algorithm analysis are provided. In particular, a specific problem related to higher order learning is solved through insights obtained from these intersections.

Accelerated Learning in the Presence of Time Varying Features with Applications to Machine Learning and Adaptive Control

Mar 12, 2019

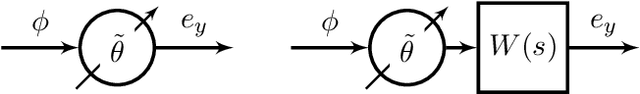

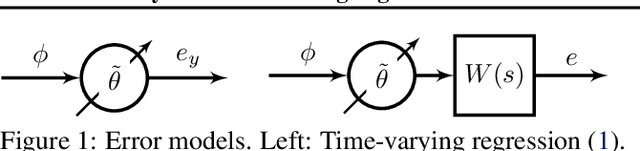

Abstract:Features in machine learning problems are often time varying and may be related to outputs in an algebraic or dynamical manner. The dynamic nature of these machine learning problems renders current accelerated gradient descent methods unstable or weakens their convergence guarantees. This paper proposes algorithms for the case when time varying features are present, and demonstrates provable performance guarantees. We develop a variational perspective within a continuous time algorithm. This variational perspective includes, among other things, higher-order learning concepts and normalization, both of which stem from adaptive control, and allows stability to be established for dynamical machine learning problems. These higher-order algorithms are also examined for achieving accelerated learning in adaptive control. Simulations are provided to verify the theoretical results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge