Accelerated Learning in the Presence of Time Varying Features with Applications to Machine Learning and Adaptive Control

Paper and Code

Mar 12, 2019

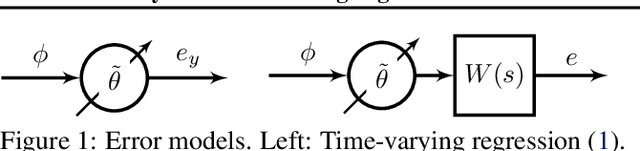

Features in machine learning problems are often time varying and may be related to outputs in an algebraic or dynamical manner. The dynamic nature of these machine learning problems renders current accelerated gradient descent methods unstable or weakens their convergence guarantees. This paper proposes algorithms for the case when time varying features are present, and demonstrates provable performance guarantees. We develop a variational perspective within a continuous time algorithm. This variational perspective includes, among other things, higher-order learning concepts and normalization, both of which stem from adaptive control, and allows stability to be established for dynamical machine learning problems. These higher-order algorithms are also examined for achieving accelerated learning in adaptive control. Simulations are provided to verify the theoretical results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge