Traian Iliescu

Defining Foundation Models for Computational Science: A Call for Clarity and Rigor

May 28, 2025Abstract:The widespread success of foundation models in natural language processing and computer vision has inspired researchers to extend the concept to scientific machine learning and computational science. However, this position paper argues that as the term "foundation model" is an evolving concept, its application in computational science is increasingly used without a universally accepted definition, potentially creating confusion and diluting its precise scientific meaning. In this paper, we address this gap by proposing a formal definition of foundation models in computational science, grounded in the core values of generality, reusability, and scalability. We articulate a set of essential and desirable characteristics that such models must exhibit, drawing parallels with traditional foundational methods, like the finite element and finite volume methods. Furthermore, we introduce the Data-Driven Finite Element Method (DD-FEM), a framework that fuses the modular structure of classical FEM with the representational power of data-driven learning. We demonstrate how DD-FEM addresses many of the key challenges in realizing foundation models for computational science, including scalability, adaptability, and physics consistency. By bridging traditional numerical methods with modern AI paradigms, this work provides a rigorous foundation for evaluating and developing novel approaches toward future foundation models in computational science.

Symbolic Regression of Data-Driven Reduced Order Model Closures for Under-Resolved, Convection-Dominated Flows

Feb 07, 2025

Abstract:Data-driven closures correct the standard reduced order models (ROMs) to increase their accuracy in under-resolved, convection-dominated flows. There are two types of data-driven ROM closures in current use: (i) structural, with simple ansatzes (e.g., linear or quadratic); and (ii) machine learning-based, with neural network ansatzes. We propose a novel symbolic regression (SR) data-driven ROM closure strategy, which combines the advantages of current approaches and eliminates their drawbacks. As a result, the new data-driven SR closures yield ROMs that are interpretable, parsimonious, accurate, generalizable, and robust. To compare the data-driven SR-ROM closures with the structural and machine learning-based ROM closures, we consider the data-driven variational multiscale ROM framework and two under-resolved, convection-dominated test problems: the flow past a cylinder and the lid-driven cavity flow at Reynolds numbers Re = 10000, 15000, and 20000. This numerical investigation shows that the new data-driven SR-ROM closures yield more accurate and robust ROMs than the structural and machine learning ROM closures.

Physics Guided Machine Learning for Variational Multiscale Reduced Order Modeling

May 25, 2022

Abstract:We propose a new physics guided machine learning (PGML) paradigm that leverages the variational multiscale (VMS) framework and available data to dramatically increase the accuracy of reduced order models (ROMs) at a modest computational cost. The hierarchical structure of the ROM basis and the VMS framework enable a natural separation of the resolved and unresolved ROM spatial scales. Modern PGML algorithms are used to construct novel models for the interaction among the resolved and unresolved ROM scales. Specifically, the new framework builds ROM operators that are closest to the true interaction terms in the VMS framework. Finally, machine learning is used to reduce the projection error and further increase the ROM accuracy. Our numerical experiments for a two-dimensional vorticity transport problem show that the novel PGML-VMS-ROM paradigm maintains the low computational cost of current ROMs, while significantly increasing the ROM accuracy.

Nonlinear proper orthogonal decomposition for convection-dominated flows

Nov 05, 2021

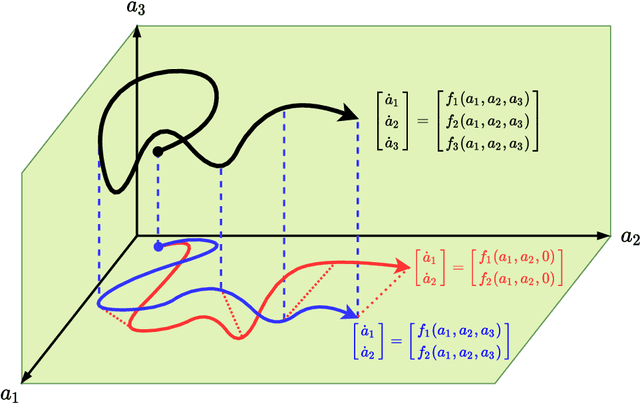

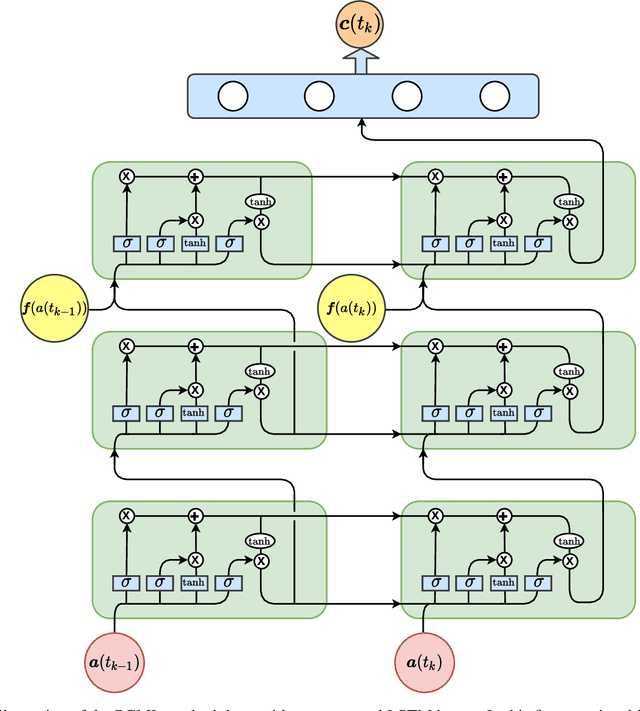

Abstract:Autoencoder techniques find increasingly common use in reduced order modeling as a means to create a latent space. This reduced order representation offers a modular data-driven modeling approach for nonlinear dynamical systems when integrated with a time series predictive model. In this letter, we put forth a nonlinear proper orthogonal decomposition (POD) framework, which is an end-to-end Galerkin-free model combining autoencoders with long short-term memory networks for dynamics. By eliminating the projection error due to the truncation of Galerkin models, a key enabler of the proposed nonintrusive approach is the kinematic construction of a nonlinear mapping between the full-rank expansion of the POD coefficients and the latent space where the dynamics evolve. We test our framework for model reduction of a convection-dominated system, which is generally challenging for reduced order models. Our approach not only improves the accuracy, but also significantly reduces the computational cost of training and testing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge