Tongyao Wang

ZiGong 1.0: A Large Language Model for Financial Credit

Feb 22, 2025Abstract:Large Language Models (LLMs) have demonstrated strong performance across various general Natural Language Processing (NLP) tasks. However, their effectiveness in financial credit assessment applications remains suboptimal, primarily due to the specialized financial expertise required for these tasks. To address this limitation, we propose ZiGong, a Mistral-based model enhanced through multi-task supervised fine-tuning. To specifically combat model hallucination in financial contexts, we introduce a novel data pruning methodology. Our approach utilizes a proxy model to score training samples, subsequently combining filtered data with original datasets for model training. This data refinement strategy effectively reduces hallucinations in LLMs while maintaining reliability in downstream financial applications. Experimental results show our method significantly enhances model robustness and prediction accuracy in real-world financial scenarios.

FinLangNet: A Novel Deep Learning Framework for Credit Risk Prediction Using Linguistic Analogy in Financial Data

Apr 19, 2024

Abstract:Recent industrial applications in risk prediction still heavily rely on extensively manually-tuned, statistical learning methods. Real-world financial data, characterized by its high-dimensionality, sparsity, high noise levels, and significant imbalance, poses unique challenges for the effective application of deep neural network models. In this work, we introduce a novel deep learning risk prediction framework, FinLangNet, which conceptualizes credit loan trajectories in a structure that mirrors linguistic constructs. This framework is tailored for credit risk prediction using real-world financial data, drawing on structural similarities to language by adapting natural language processing techniques. It focuses on analyzing the evolution and predictability of credit histories through detailed financial event sequences. Our research demonstrates that FinLangNet surpasses traditional statistical methods in predicting credit risk and that its integration with these methods enhances credit card fraud prediction models, achieving a significant improvement of over 1.5 points in the Kolmogorov-Smirnov metric.

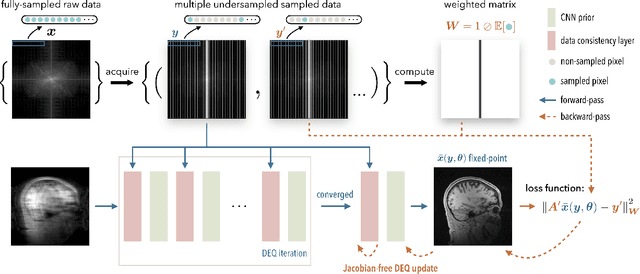

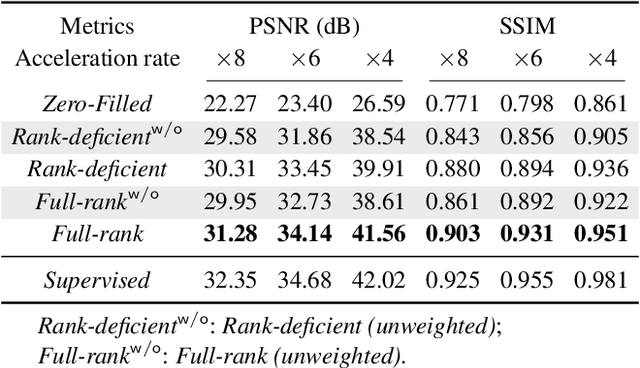

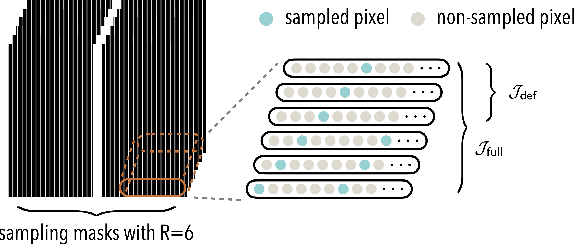

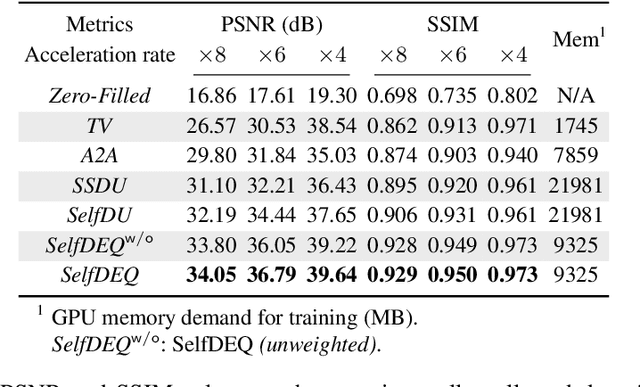

Self-Supervised Deep Equilibrium Models for Inverse Problems with Theoretical Guarantees

Oct 07, 2022

Abstract:Deep equilibrium models (DEQ) have emerged as a powerful alternative to deep unfolding (DU) for image reconstruction. DEQ models-implicit neural networks with effectively infinite number of layers-were shown to achieve state-of-the-art image reconstruction without the memory complexity associated with DU. While the performance of DEQ has been widely investigated, the existing work has primarily focused on the settings where groundtruth data is available for training. We present self-supervised deep equilibrium model (SelfDEQ) as the first self-supervised reconstruction framework for training model-based implicit networks from undersampled and noisy MRI measurements. Our theoretical results show that SelfDEQ can compensate for unbalanced sampling across multiple acquisitions and match the performance of fully supervised DEQ. Our numerical results on in-vivo MRI data show that SelfDEQ leads to state-of-the-art performance using only undersampled and noisy training data.

SPICE: Self-Supervised Learning for MRI with Automatic Coil Sensitivity Estimation

Oct 05, 2022

Abstract:Deep model-based architectures (DMBAs) integrating physical measurement models and learned image regularizers are widely used in parallel magnetic resonance imaging (PMRI). Traditional DMBAs for PMRI rely on pre-estimated coil sensitivity maps (CSMs) as a component of the measurement model. However, estimation of accurate CSMs is a challenging problem when measurements are highly undersampled. Additionally, traditional training of DMBAs requires high-quality groundtruth images, limiting their use in applications where groundtruth is difficult to obtain. This paper addresses these issues by presenting SPICE as a new method that integrates self-supervised learning and automatic coil sensitivity estimation. Instead of using pre-estimated CSMs, SPICE simultaneously reconstructs accurate MR images and estimates high-quality CSMs. SPICE also enables learning from undersampled noisy measurements without any groundtruth. We validate SPICE on experimentally collected data, showing that it can achieve state-of-the-art performance in highly accelerated data acquisition settings (up to 10x).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge