Tomas F. Yago Vicente

ABO: Dataset and Benchmarks for Real-World 3D Object Understanding

Oct 12, 2021

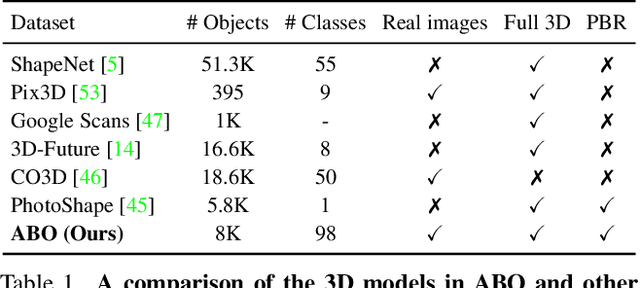

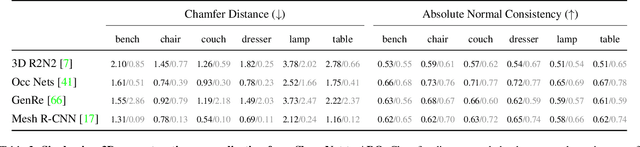

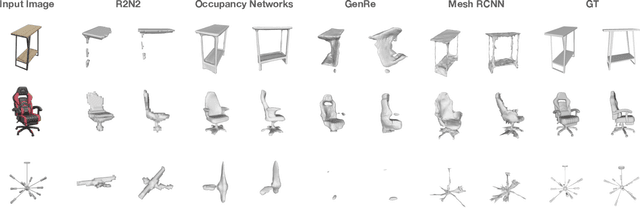

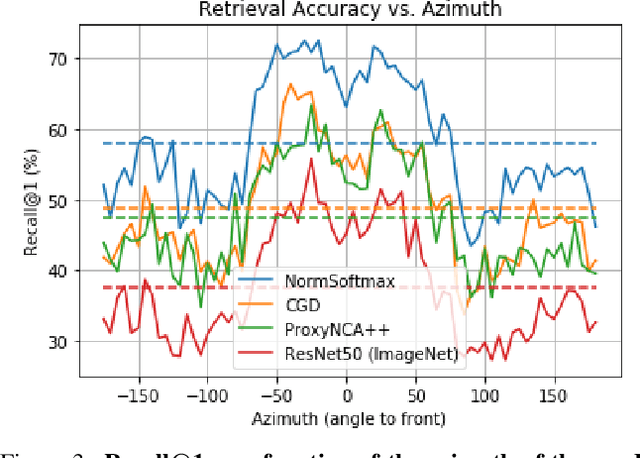

Abstract:We introduce Amazon-Berkeley Objects (ABO), a new large-scale dataset of product images and 3D models corresponding to real household objects. We use this realistic, object-centric 3D dataset to measure the domain gap for single-view 3D reconstruction networks trained on synthetic objects. We also use multi-view images from ABO to measure the robustness of state-of-the-art metric learning approaches to different camera viewpoints. Finally, leveraging the physically-based rendering materials in ABO, we perform single- and multi-view material estimation for a variety of complex, real-world geometries. The full dataset is available for download at https://amazon-berkeley-objects.s3.amazonaws.com/index.html.

A+D Net: Training a Shadow Detector with Adversarial Shadow Attenuation

Jul 27, 2018

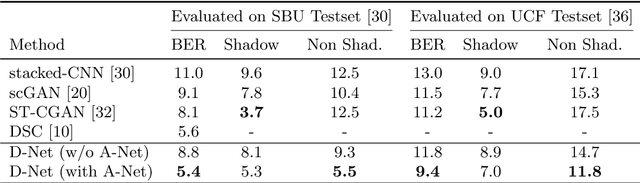

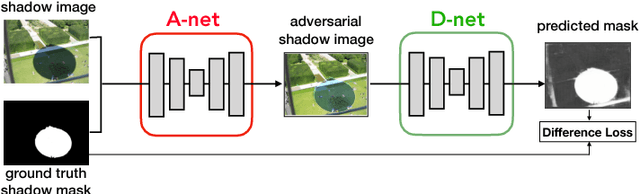

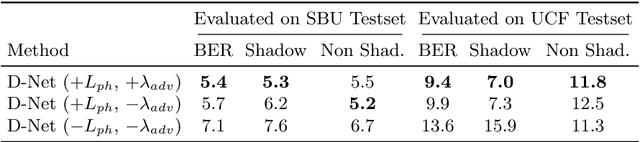

Abstract:We propose a novel GAN-based framework for detecting shadows in images, in which a shadow detection network (D-Net) is trained together with a shadow attenuation network (A-Net) that generates adversarial training examples. The A-Net modifies the original training images constrained by a simplified physical shadow model and is focused on fooling the D-Net's shadow predictions. Hence, it is effectively augmenting the training data for D-Net with hard-to-predict cases. The D-Net is trained to predict shadows in both original images and generated images from the A-Net. Our experimental results show that the additional training data from A-Net significantly improves the shadow detection accuracy of D-Net. Our method outperforms the state-of-the-art methods on the most challenging shadow detection benchmark (SBU) and also obtains state-of-the-art results on a cross-dataset task, testing on UCF. Furthermore, the proposed method achieves accurate real-time shadow detection at 45 frames per second.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge