Tom P. Huck

Reinforcement Learning for Safety Testing: Lessons from A Mobile Robot Case Study

Nov 06, 2023Abstract:Safety-critical robot systems need thorough testing to expose design flaws and software bugs which could endanger humans. Testing in simulation is becoming increasingly popular, as it can be applied early in the development process and does not endanger any real-world operators. However, not all safety-critical flaws become immediately observable in simulation. Some may only become observable under certain critical conditions. If these conditions are not covered, safety flaws may remain undetected. Creating critical tests is therefore crucial. In recent years, there has been a trend towards using Reinforcement Learning (RL) for this purpose. Guided by domain-specific reward functions, RL algorithms are used to learn critical test strategies. This paper presents a case study in which the collision avoidance behavior of a mobile robot is subjected to RL-based testing. The study confirms prior research which shows that RL can be an effective testing tool. However, the study also highlights certain challenges associated with RL-based testing, namely (i) a possible lack of diversity in test conditions and (ii) the phenomenon of reward hacking where the RL agent behaves in undesired ways due to a misalignment of reward and test specification. The challenges are illustrated with data and examples from the experiments, and possible mitigation strategies are discussed.

Uncertainty-aware Risk Assessment of Robotic Systems via Importance Sampling

Aug 27, 2023

Abstract:In this paper, we introduce a probabilistic approach to risk assessment of robot systems by focusing on the impact of uncertainties. While various approaches to identifying systematic hazards (e.g., bugs, design flaws, etc.) can be found in current literature, little attention has been devoted to evaluating risks in robot systems in a probabilistic manner. Existing methods rely on discrete notions for dangerous events and assume that the consequences of these can be described by simple logical operations. In this work, we consider measurement uncertainties as one main contributor to the evolvement of risks. Specifically, we study the impact of temporal and spatial uncertainties on the occurrence probability of dangerous failures, thereby deriving an approach for an uncertainty-aware risk assessment. Secondly, we introduce a method to improve the statistical significance of our results: While the rare occurrence of hazardous events makes it challenging to draw conclusions with reliable accuracy, we show that importance sampling -- a technique that successively generates samples in regions with sparse probability densities -- allows for overcoming this issue. We demonstrate the validity of our novel uncertainty-aware risk assessment method in three simulation scenarios from the domain of human-robot collaboration. Finally, we show how the results can be used to evaluate arbitrary safety limits of robot systems.

Hazard Analysis of Collaborative Automation Systems: A Two-layer Approach based on Supervisory Control and Simulation

Sep 26, 2022

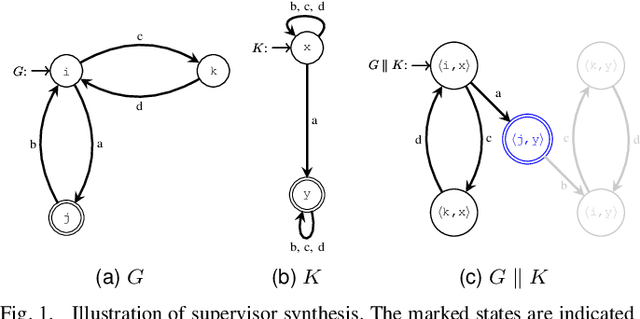

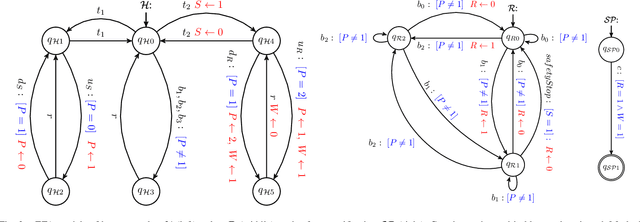

Abstract:Safety critical systems are typically subjected to hazard analysis before commissioning to identify and analyse potentially hazardous system states that may arise during operation. Currently, hazard analysis is mainly based on human reasoning, past experiences, and simple tools such as checklists and spreadsheets. Increasing system complexity makes such approaches decreasingly suitable. Furthermore, testing-based hazard analysis is often not suitable due to high costs or dangers of physical faults. A remedy for this are model-based hazard analysis methods, which either rely on formal models or on simulation models, each with their own benefits and drawbacks. This paper proposes a two-layer approach that combines the benefits of exhaustive analysis using formal methods with detailed analysis using simulation. Unsafe behaviours that lead to unsafe states are first synthesised from a formal model of the system using Supervisory Control Theory. The result is then input to the simulation where detailed analyses using domain-specific risk metrics are performed. Though the presented approach is generally applicable, this paper demonstrates the benefits of the approach on an industrial human-robot collaboration system.

Testing Robot System Safety by creating Hazardous Human Worker Behavior in Simulation

Nov 29, 2021

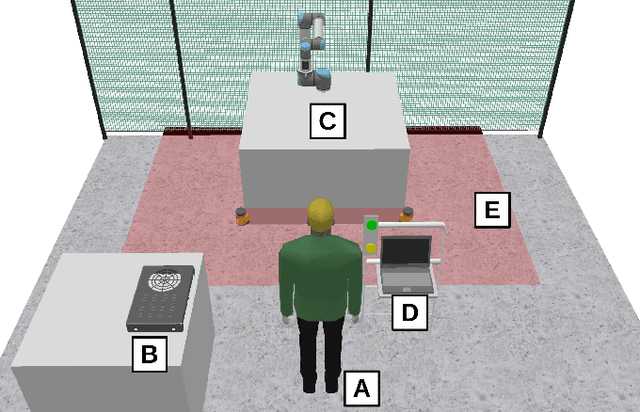

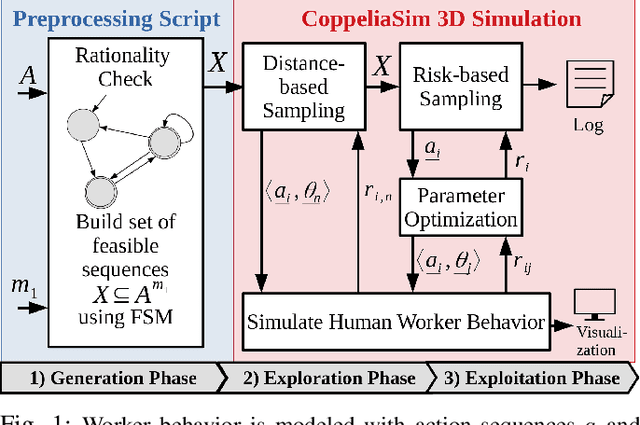

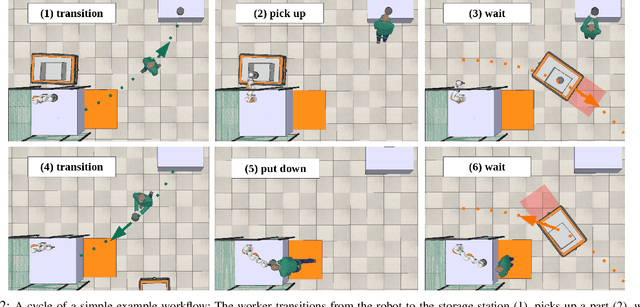

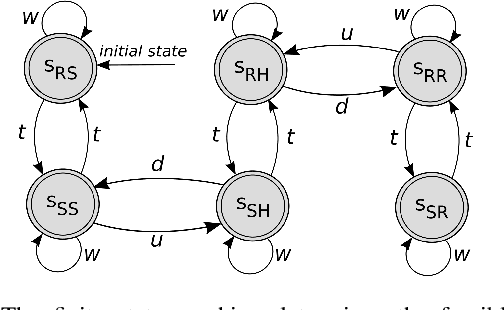

Abstract:We introduce a novel simulation-based approach to identify hazards that result from unexpected worker behavior in human-robot collaboration. Simulation-based safety testing must take into account the fact that human behavior is variable and that human error can occur. When only the expected worker behavior is simulated, critical hazards can remain undiscovered. On the other hand, simulating all possible worker behaviors is computationally infeasible. This raises the problem of how to find interesting (i.e., potentially hazardous) worker behaviors given a limited number of simulation runs. We frame this as a search problem in the space of possible worker behaviors. Because this search space can get quite complex, we introduce the following measures: (1) Search space restriction based on workflow-constraints, (2) prioritization of behaviors based on how far they deviate from the nominal behavior, and (3) the use of a risk metric to guide the search towards high-risk behaviors which are more likely to expose hazards. We demonstrate the approach in a collaborative workflow scenario that involves a human worker, a robot arm, and a mobile robot.

Virtual Adversarial Humans finding Hazards in Robot Workplaces

Mar 01, 2021

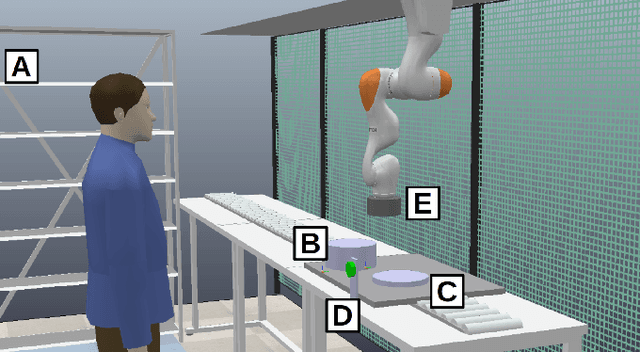

Abstract:During the planning phase of industrial robot workplaces, hazard analyses are required so that potential hazards for human workers can be identified and appropriate safety measures can be implemented. Existing hazard analysis methods use human reasoning, checklists and/or abstract system models, which limit the level of detail. We propose a new approach that frames hazard analysis as a search problem in a dynamic simulation environment. Our goal is to identify workplace hazards by searching for simulation sequences that result in hazardous situations. We solve this search problem by placing virtual humans into workplace simulation models. These virtual humans act in an adversarial manner: They learn to provoke unsafe situations, and thereby uncover workplace hazards. Although this approach cannot replace a thorough hazard analysis, it can help uncover hazards that otherwise may have been overlooked, especially in early development stages. Thus, it helps to prevent costly re-designs at later development stages. For validation, we performed hazard analyses in six different example scenarios that reflect typical industrial robot workplaces.

Simulation-based Testing for Early Safety-Validation of Robot Systems

Nov 20, 2020

Abstract:Industrial human-robot collaborative systems must be validated thoroughly with regard to safety. The sooner potential hazards for workers can be exposed, the less costly is the implementation of necessary changes. Due to the complexity of robot systems, safety flaws often stay hidden, especially at early design stages, when a physical implementation is not yet available for testing. Simulation-based testing is a possible way to identify hazards in an early stage. However, creating simulation conditions in which hazards become observable can be difficult. Brute-force or Monte-Carlo-approaches are often not viable for hazard identification, due to large search spaces. This work addresses this problem by using a human model and an optimization algorithm to generate high-risk human behavior in simulation, thereby exposing potential hazards. A proof of concept is shown in an application example where the method is used to find hazards in an industrial robot cell.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge