Bengt Lennartson

Reinforcement Learning for Safety Testing: Lessons from A Mobile Robot Case Study

Nov 06, 2023Abstract:Safety-critical robot systems need thorough testing to expose design flaws and software bugs which could endanger humans. Testing in simulation is becoming increasingly popular, as it can be applied early in the development process and does not endanger any real-world operators. However, not all safety-critical flaws become immediately observable in simulation. Some may only become observable under certain critical conditions. If these conditions are not covered, safety flaws may remain undetected. Creating critical tests is therefore crucial. In recent years, there has been a trend towards using Reinforcement Learning (RL) for this purpose. Guided by domain-specific reward functions, RL algorithms are used to learn critical test strategies. This paper presents a case study in which the collision avoidance behavior of a mobile robot is subjected to RL-based testing. The study confirms prior research which shows that RL can be an effective testing tool. However, the study also highlights certain challenges associated with RL-based testing, namely (i) a possible lack of diversity in test conditions and (ii) the phenomenon of reward hacking where the RL agent behaves in undesired ways due to a misalignment of reward and test specification. The challenges are illustrated with data and examples from the experiments, and possible mitigation strategies are discussed.

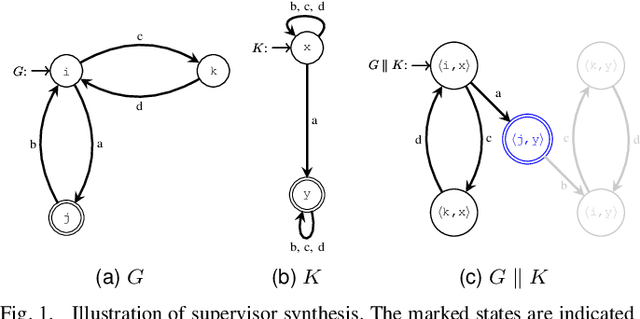

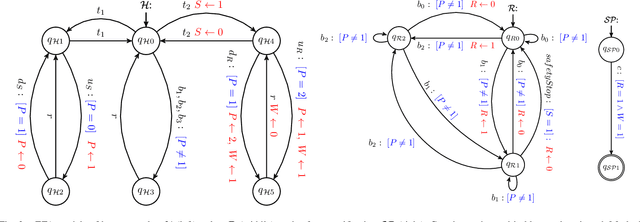

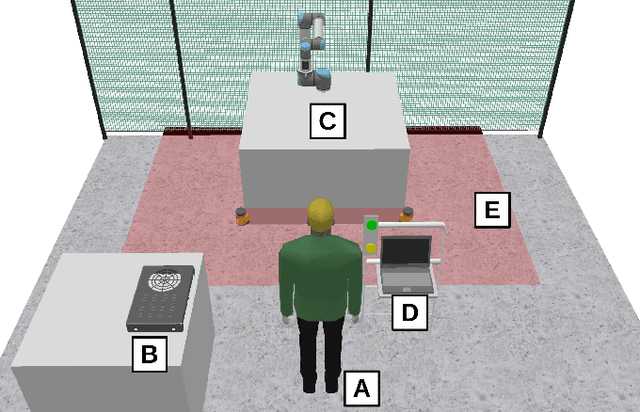

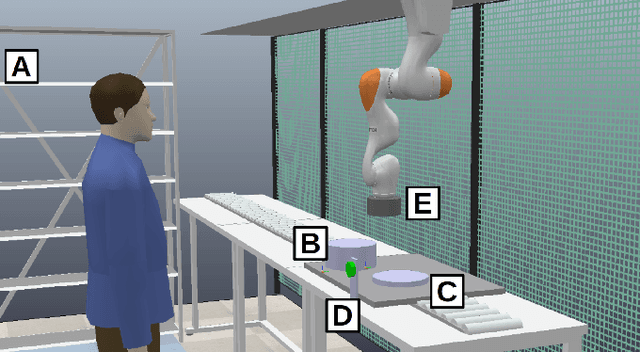

Hazard Analysis of Collaborative Automation Systems: A Two-layer Approach based on Supervisory Control and Simulation

Sep 26, 2022

Abstract:Safety critical systems are typically subjected to hazard analysis before commissioning to identify and analyse potentially hazardous system states that may arise during operation. Currently, hazard analysis is mainly based on human reasoning, past experiences, and simple tools such as checklists and spreadsheets. Increasing system complexity makes such approaches decreasingly suitable. Furthermore, testing-based hazard analysis is often not suitable due to high costs or dangers of physical faults. A remedy for this are model-based hazard analysis methods, which either rely on formal models or on simulation models, each with their own benefits and drawbacks. This paper proposes a two-layer approach that combines the benefits of exhaustive analysis using formal methods with detailed analysis using simulation. Unsafe behaviours that lead to unsafe states are first synthesised from a formal model of the system using Supervisory Control Theory. The result is then input to the simulation where detailed analyses using domain-specific risk metrics are performed. Though the presented approach is generally applicable, this paper demonstrates the benefits of the approach on an industrial human-robot collaboration system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge