Woo-Jeong Baek

Uncertainty-aware Risk Assessment of Robotic Systems via Importance Sampling

Aug 27, 2023

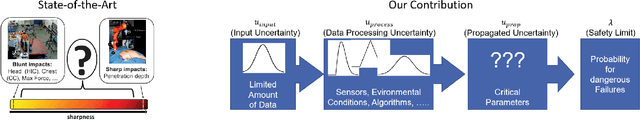

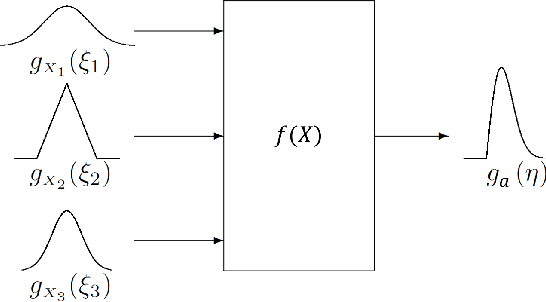

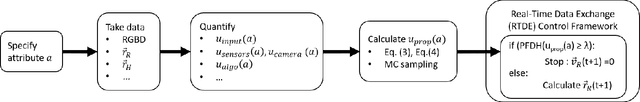

Abstract:In this paper, we introduce a probabilistic approach to risk assessment of robot systems by focusing on the impact of uncertainties. While various approaches to identifying systematic hazards (e.g., bugs, design flaws, etc.) can be found in current literature, little attention has been devoted to evaluating risks in robot systems in a probabilistic manner. Existing methods rely on discrete notions for dangerous events and assume that the consequences of these can be described by simple logical operations. In this work, we consider measurement uncertainties as one main contributor to the evolvement of risks. Specifically, we study the impact of temporal and spatial uncertainties on the occurrence probability of dangerous failures, thereby deriving an approach for an uncertainty-aware risk assessment. Secondly, we introduce a method to improve the statistical significance of our results: While the rare occurrence of hazardous events makes it challenging to draw conclusions with reliable accuracy, we show that importance sampling -- a technique that successively generates samples in regions with sparse probability densities -- allows for overcoming this issue. We demonstrate the validity of our novel uncertainty-aware risk assessment method in three simulation scenarios from the domain of human-robot collaboration. Finally, we show how the results can be used to evaluate arbitrary safety limits of robot systems.

Safety Evaluation of Robot Systems via Uncertainty Quantification

Feb 21, 2023

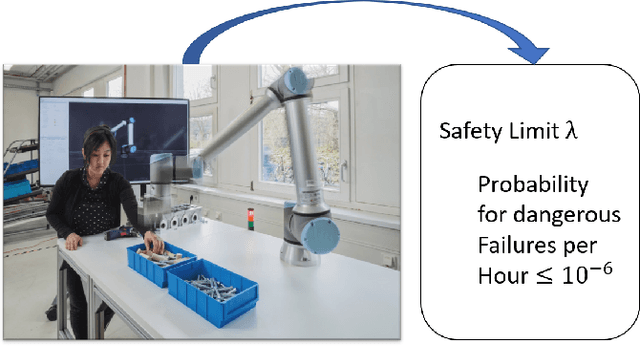

Abstract:In this paper, we present an approach for quantifying the propagated uncertainty of robot systems in an online and data-driven manner. Especially in Human-Robot Collaboration, keeping track of the safety compliance during run time is essential: Misclassifying dangerous situations as safe might result in severe accidents. According to official regulations (eg, ISO standards), safety in industrial robot applications depends on critical parameters, such as the distance and relative velocity between humans and robots. However, safety can only be assured given a measure for the reliability of these parameters. While different risk detection and mitigation approaches exist in literature, a measure that can be used to evaluate safety limits online, and succinctly implies whether a situation is safe or dangerous, is missing to date. Motivated by this, we introduce a generalizable method for calculating the propagated measurement uncertainty of arbitrary parameters, that captures the accumulated uncertainty originating from sensory devices and environmental disturbances of the system. To show that our approach delivers correct results, we perform validation experiments in simulation. In addition, we employ our method in two real-world settings and demonstrate how quantifying the propagated uncertainty of critical parameters facilitates assessing safety online in Human-Robot Collaboration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge