Tiberiu Cocias

Cloud2Edge Elastic AI Framework for Prototyping and Deployment of AI Inference Engines in Autonomous Vehicles

Sep 23, 2020

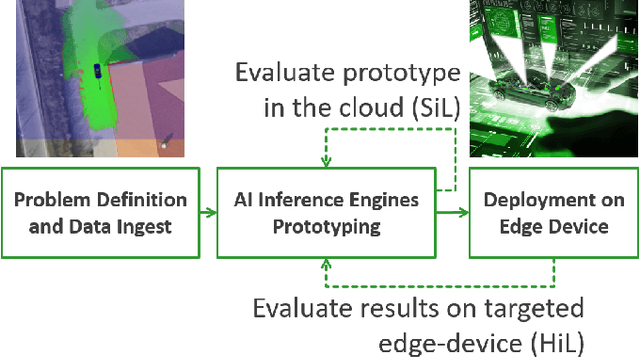

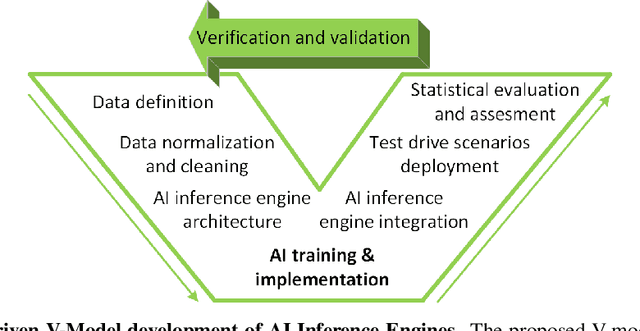

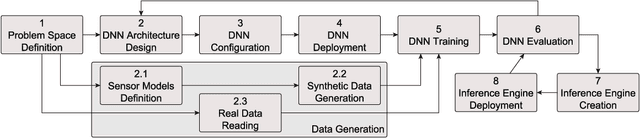

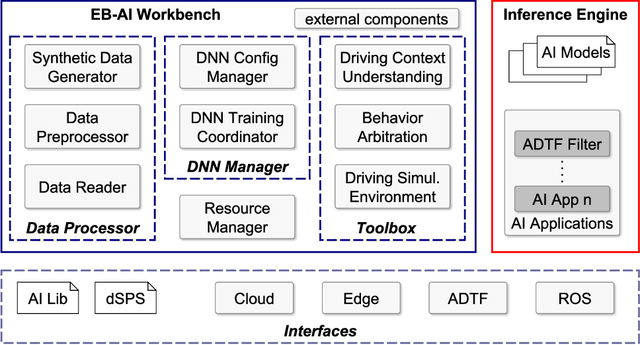

Abstract:Self-driving cars and autonomous vehicles are revolutionizing the automotive sector, shaping the future of mobility altogether. Although the integration of novel technologies such as Artificial Intelligence (AI) and Cloud/Edge computing provides golden opportunities to improve autonomous driving applications, there is the need to modernize accordingly the whole prototyping and deployment cycle of AI components. This paper proposes a novel framework for developing so-called AI Inference Engines for autonomous driving applications based on deep learning modules, where training tasks are deployed elastically over both Cloud and Edge resources, with the purpose of reducing the required network bandwidth, as well as mitigating privacy issues. Based on our proposed data driven V-Model, we introduce a simple yet elegant solution for the AI components development cycle, where prototyping takes place in the cloud according to the Software-in-the-Loop (SiL) paradigm, while deployment and evaluation on the target ECUs (Electronic Control Units) is performed as Hardware-in-the-Loop (HiL) testing. The effectiveness of the proposed framework is demonstrated using two real-world use-cases of AI inference engines for autonomous vehicles, that is environment perception and most probable path prediction.

GFPNet: A Deep Network for Learning Shape Completion in Generic Fitted Primitives

Jun 03, 2020

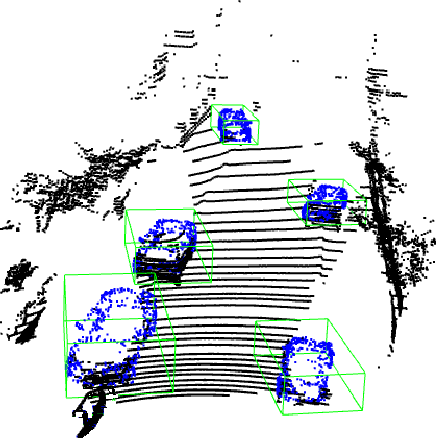

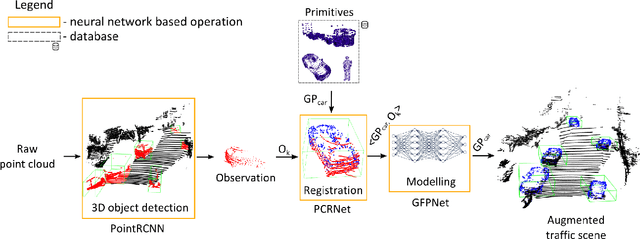

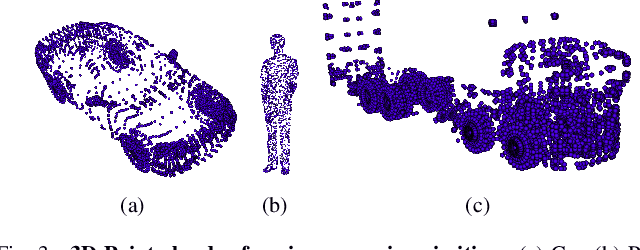

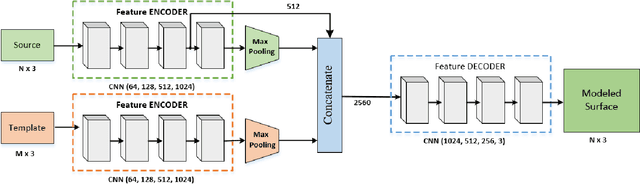

Abstract:In this paper, we propose an object reconstruction apparatus that uses the so-called Generic Primitives (GP) to complete shapes. A GP is a 3D point cloud depicting a generalized shape of a class of objects. To reconstruct the objects in a scene we first fit a GP onto each occluded object to obtain an initial raw structure. Secondly, we use a model-based deformation technique to fold the surface of the GP over the occluded object. The deformation model is encoded within the layers of a Deep Neural Network (DNN), coined GFPNet. The objective of the network is to transfer the particularities of the object from the scene to the raw volume represented by the GP. We show that GFPNet competes with state of the art shape completion methods by providing performance results on the ModelNet and KITTI benchmarking datasets.

A Survey of Deep Learning Techniques for Autonomous Driving

Oct 17, 2019

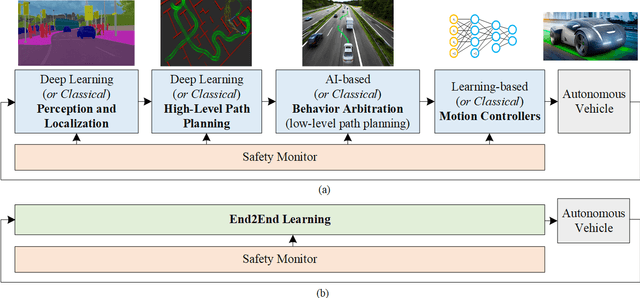

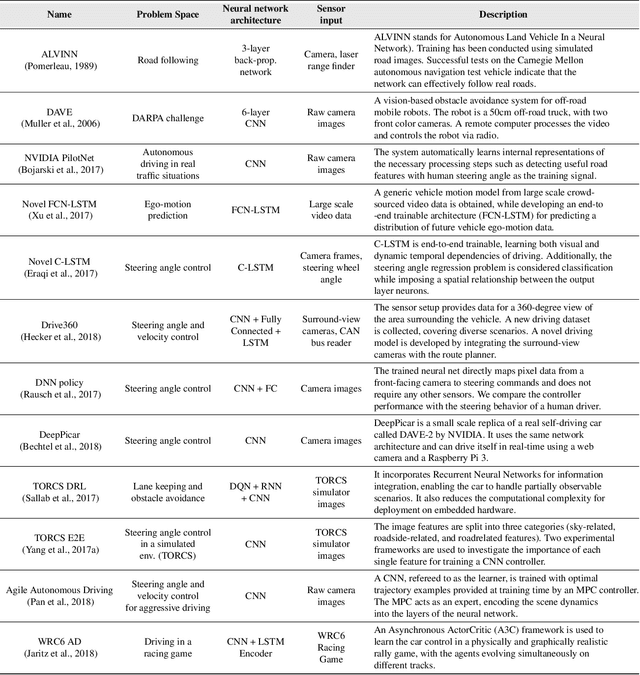

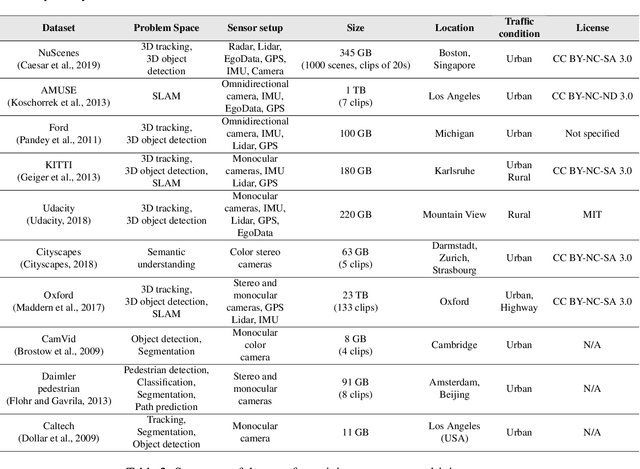

Abstract:The last decade witnessed increasingly rapid progress in self-driving vehicle technology, mainly backed up by advances in the area of deep learning and artificial intelligence. The objective of this paper is to survey the current state-of-the-art on deep learning technologies used in autonomous driving. We start by presenting AI-based self-driving architectures, convolutional and recurrent neural networks, as well as the deep reinforcement learning paradigm. These methodologies form a base for the surveyed driving scene perception, path planning, behavior arbitration and motion control algorithms. We investigate both the modular perception-planning-action pipeline, where each module is built using deep learning methods, as well as End2End systems, which directly map sensory information to steering commands. Additionally, we tackle current challenges encountered in designing AI architectures for autonomous driving, such as their safety, training data sources and computational hardware. The comparison presented in this survey helps to gain insight into the strengths and limitations of deep learning and AI approaches for autonomous driving and assist with design choices

* 38 pages, 7 figures

NeuroTrajectory: A Neuroevolutionary Approach to Local State Trajectory Learning for Autonomous Vehicles

Jun 26, 2019

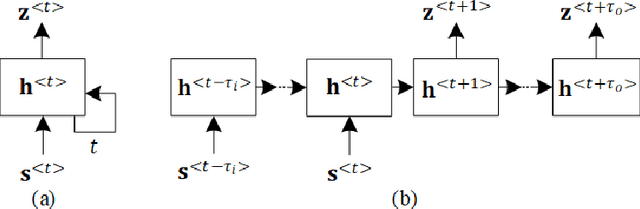

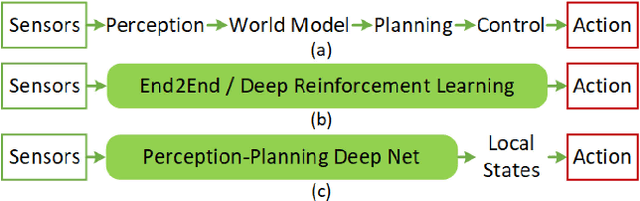

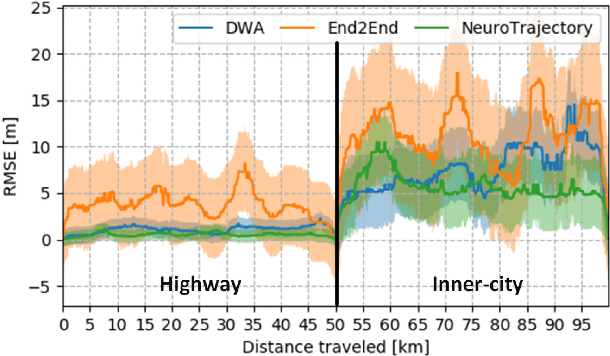

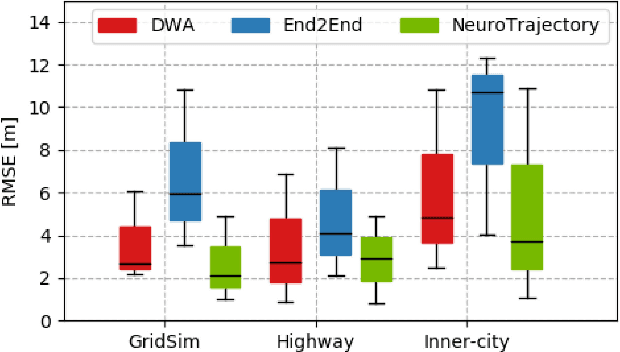

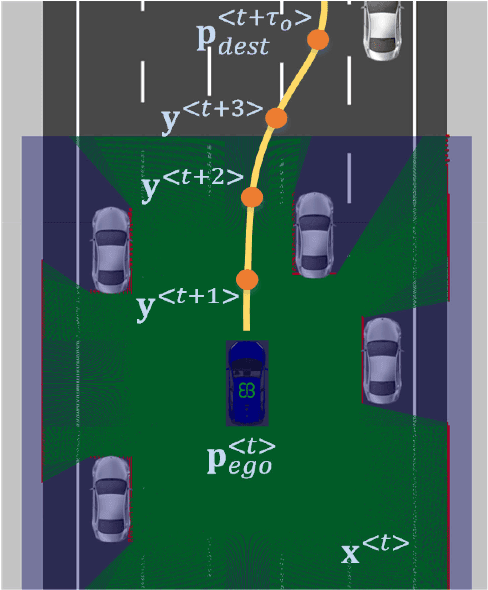

Abstract:Autonomous vehicles are controlled today either based on sequences of decoupled perception-planning-action operations, either based on End2End or Deep Reinforcement Learning (DRL) systems. Current deep learning solutions for autonomous driving are subject to several limitations (e.g. they estimate driving actions through a direct mapping of sensors to actuators, or require complex reward shaping methods). Although the cost function used for training can aggregate multiple weighted objectives, the gradient descent step is computed by the backpropagation algorithm using a single-objective loss. To address these issues, we introduce NeuroTrajectory, which is a multi-objective neuroevolutionary approach to local state trajectory learning for autonomous driving, where the desired state trajectory of the ego-vehicle is estimated over a finite prediction horizon by a perception-planning deep neural network. In comparison to DRL methods, which predict optimal actions for the upcoming sampling time, we estimate a sequence of optimal states that can be used for motion control. We propose an approach which uses genetic algorithms for training a population of deep neural networks, where each network individual is evaluated based on a multi-objective fitness vector, with the purpose of establishing a so-called Pareto front of optimal deep neural networks. The performance of an individual is given by a fitness vector composed of three elements. Each element describes the vehicle's travel path, lateral velocity and longitudinal speed, respectively. The same network structure can be trained on synthetic, as well as on real-world data sequences. We have benchmarked our system against a baseline Dynamic Window Approach (DWA), as well as against an End2End supervised learning method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge