Tiarna Lee

An investigation into the causes of race bias in AI-based cine CMR segmentation

Aug 05, 2024

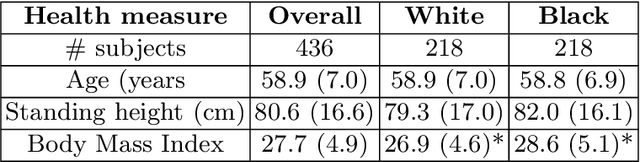

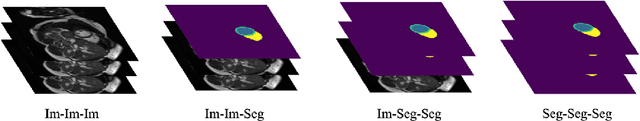

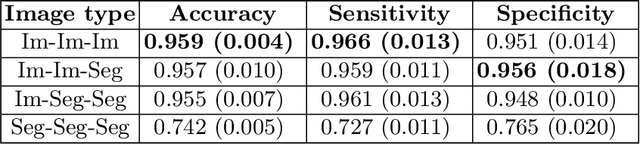

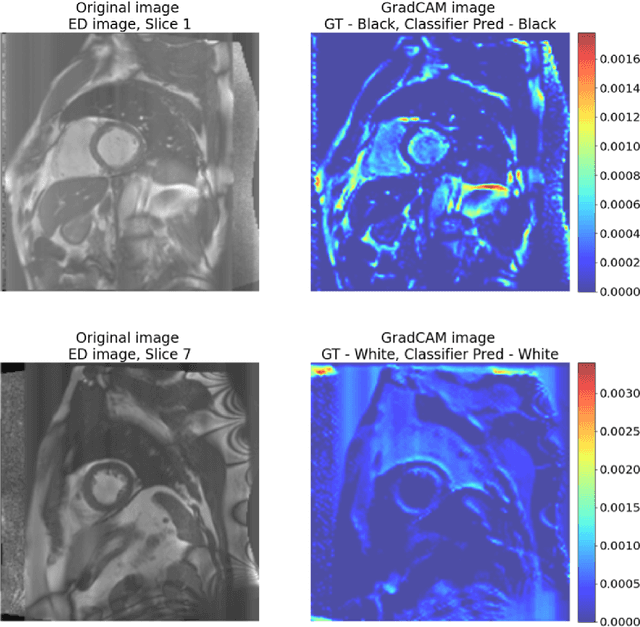

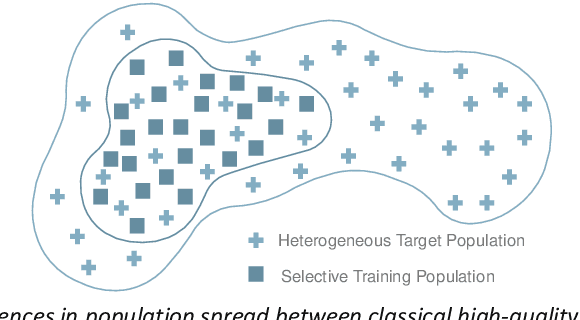

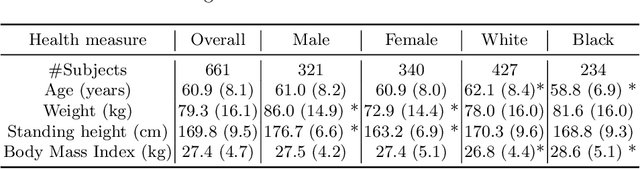

Abstract:Artificial intelligence (AI) methods are being used increasingly for the automated segmentation of cine cardiac magnetic resonance (CMR) imaging. However, these methods have been shown to be subject to race bias, i.e. they exhibit different levels of performance for different races depending on the (im)balance of the data used to train the AI model. In this paper we investigate the source of this bias, seeking to understand its root cause(s) so that it can be effectively mitigated. We perform a series of classification and segmentation experiments on short-axis cine CMR images acquired from Black and White subjects from the UK Biobank and apply AI interpretability methods to understand the results. In the classification experiments, we found that race can be predicted with high accuracy from the images alone, but less accurately from ground truth segmentations, suggesting that the distributional shift between races, which is often the cause of AI bias, is mostly image-based rather than segmentation-based. The interpretability methods showed that most attention in the classification models was focused on non-heart regions, such as subcutaneous fat. Cropping the images tightly around the heart reduced classification accuracy to around chance level. Similarly, race can be predicted from the latent representations of a biased segmentation model, suggesting that race information is encoded in the model. Cropping images tightly around the heart reduced but did not eliminate segmentation bias. We also investigate the influence of possible confounders on the bias observed.

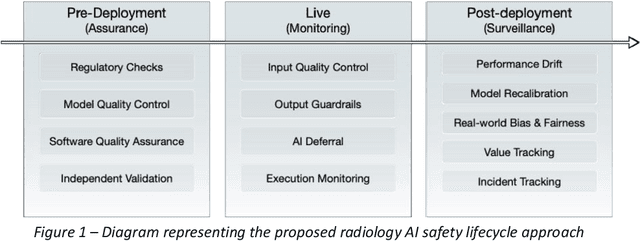

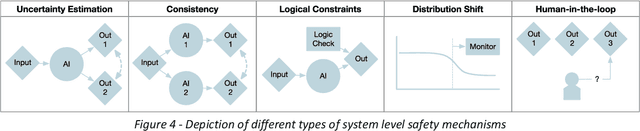

RAISE -- Radiology AI Safety, an End-to-end lifecycle approach

Nov 24, 2023

Abstract:The integration of AI into radiology introduces opportunities for improved clinical care provision and efficiency but it demands a meticulous approach to mitigate potential risks as with any other new technology. Beginning with rigorous pre-deployment evaluation and validation, the focus should be on ensuring models meet the highest standards of safety, effectiveness and efficacy for their intended applications. Input and output guardrails implemented during production usage act as an additional layer of protection, identifying and addressing individual failures as they occur. Continuous post-deployment monitoring allows for tracking population-level performance (data drift), fairness, and value delivery over time. Scheduling reviews of post-deployment model performance and educating radiologists about new algorithmic-driven findings is critical for AI to be effective in clinical practice. Recognizing that no single AI solution can provide absolute assurance even when limited to its intended use, the synergistic application of quality assurance at multiple levels - regulatory, clinical, technical, and ethical - is emphasized. Collaborative efforts between stakeholders spanning healthcare systems, industry, academia, and government are imperative to address the multifaceted challenges involved. Trust in AI is an earned privilege, contingent on a broad set of goals, among them transparently demonstrating that the AI adheres to the same rigorous safety, effectiveness and efficacy standards as other established medical technologies. By doing so, developers can instil confidence among providers and patients alike, enabling the responsible scaling of AI and the realization of its potential benefits. The roadmap presented herein aims to expedite the achievement of deployable, reliable, and safe AI in radiology.

An Investigation Into Race Bias in Random Forest Models Based on Breast DCE-MRI Derived Radiomics Features

Sep 29, 2023Abstract:Recent research has shown that artificial intelligence (AI) models can exhibit bias in performance when trained using data that are imbalanced by protected attribute(s). Most work to date has focused on deep learning models, but classical AI techniques that make use of hand-crafted features may also be susceptible to such bias. In this paper we investigate the potential for race bias in random forest (RF) models trained using radiomics features. Our application is prediction of tumour molecular subtype from dynamic contrast enhanced magnetic resonance imaging (DCE-MRI) of breast cancer patients. Our results show that radiomics features derived from DCE-MRI data do contain race-identifiable information, and that RF models can be trained to predict White and Black race from these data with 60-70% accuracy, depending on the subset of features used. Furthermore, RF models trained to predict tumour molecular subtype using race-imbalanced data seem to produce biased behaviour, exhibiting better performance on test data from the race on which they were trained.

An investigation into the impact of deep learning model choice on sex and race bias in cardiac MR segmentation

Aug 25, 2023

Abstract:In medical imaging, artificial intelligence (AI) is increasingly being used to automate routine tasks. However, these algorithms can exhibit and exacerbate biases which lead to disparate performances between protected groups. We investigate the impact of model choice on how imbalances in subject sex and race in training datasets affect AI-based cine cardiac magnetic resonance image segmentation. We evaluate three convolutional neural network-based models and one vision transformer model. We find significant sex bias in three of the four models and racial bias in all of the models. However, the severity and nature of the bias varies between the models, highlighting the importance of model choice when attempting to train fair AI-based segmentation models for medical imaging tasks.

A systematic study of race and sex bias in CNN-based cardiac MR segmentation

Sep 04, 2022

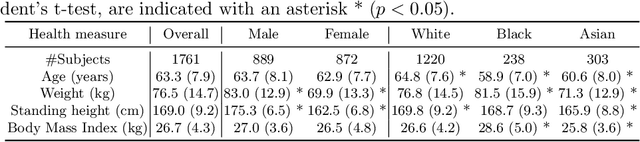

Abstract:In computer vision there has been significant research interest in assessing potential demographic bias in deep learning models. One of the main causes of such bias is imbalance in the training data. In medical imaging, where the potential impact of bias is arguably much greater, there has been less interest. In medical imaging pipelines, segmentation of structures of interest plays an important role in estimating clinical biomarkers that are subsequently used to inform patient management. Convolutional neural networks (CNNs) are starting to be used to automate this process. We present the first systematic study of the impact of training set imbalance on race and sex bias in CNN-based segmentation. We focus on segmentation of the structures of the heart from short axis cine cardiac magnetic resonance images, and train multiple CNN segmentation models with different levels of race/sex imbalance. We find no significant bias in the sex experiment but significant bias in two separate race experiments, highlighting the need to consider adequate representation of different demographic groups in health datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge