Tiago Ribeiro

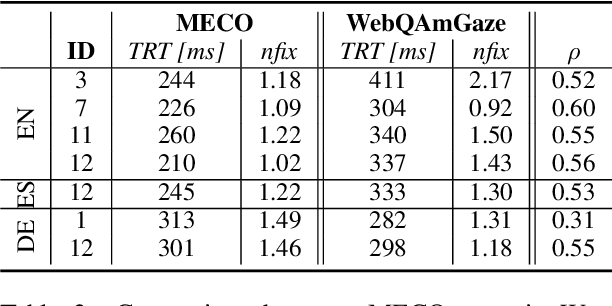

Evaluating Webcam-based Gaze Data as an Alternative for Human Rationale Annotations

Feb 29, 2024Abstract:Rationales in the form of manually annotated input spans usually serve as ground truth when evaluating explainability methods in NLP. They are, however, time-consuming and often biased by the annotation process. In this paper, we debate whether human gaze, in the form of webcam-based eye-tracking recordings, poses a valid alternative when evaluating importance scores. We evaluate the additional information provided by gaze data, such as total reading times, gaze entropy, and decoding accuracy with respect to human rationale annotations. We compare WebQAmGaze, a multilingual dataset for information-seeking QA, with attention and explainability-based importance scores for 4 different multilingual Transformer-based language models (mBERT, distil-mBERT, XLMR, and XLMR-L) and 3 languages (English, Spanish, and German). Our pipeline can easily be applied to other tasks and languages. Our findings suggest that gaze data offers valuable linguistic insights that could be leveraged to infer task difficulty and further show a comparable ranking of explainability methods to that of human rationales.

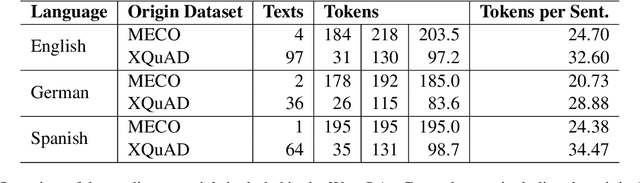

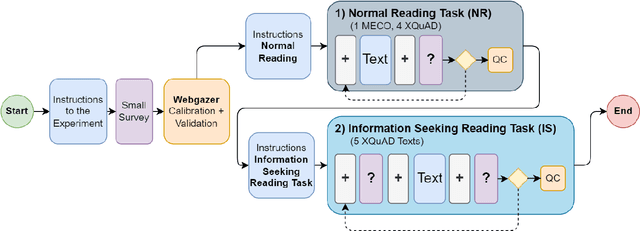

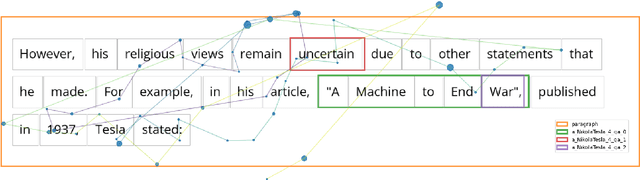

WebQAmGaze: A Multilingual Webcam Eye-Tracking-While-Reading Dataset

Apr 14, 2023

Abstract:We create WebQAmGaze, a multilingual low-cost eye-tracking-while-reading dataset, designed to support the development of fair and transparent NLP models. WebQAmGaze includes webcam eye-tracking data from 332 participants naturally reading English, Spanish, and German texts. Each participant performs two reading tasks composed of five texts, a normal reading and an information-seeking task. After preprocessing the data, we find that fixations on relevant spans seem to indicate correctness when answering the comprehension questions. Additionally, we perform a comparative analysis of the data collected to high-quality eye-tracking data. The results show a moderate correlation between the features obtained with the webcam-ET compared to those of a commercial ET device. We believe this data can advance webcam-based reading studies and open a way to cheaper and more accessible data collection. WebQAmGaze is useful to learn about the cognitive processes behind question answering (QA) and to apply these insights to computational models of language understanding.

Avant-Satie! Using ERIK to encode task-relevant expressivity into the animation of autonomous social robots

Mar 02, 2022

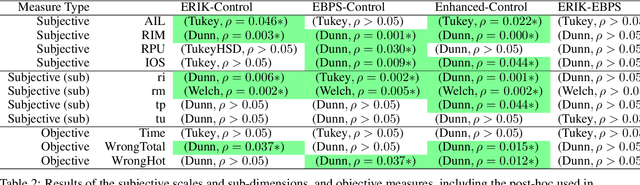

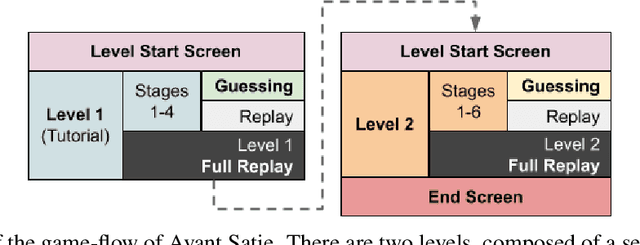

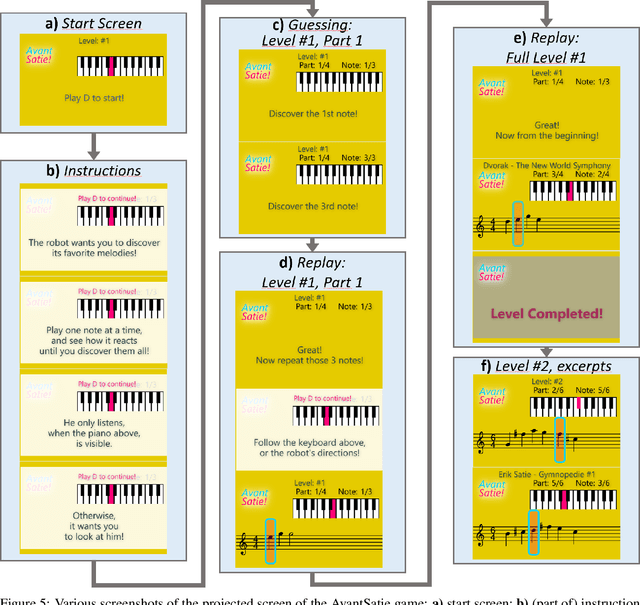

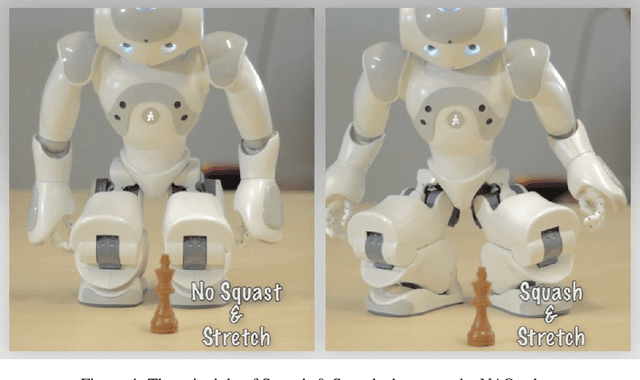

Abstract:ERIK is an expressive inverse kinematics technique that has been previously presented and evaluated both algorithmically and in a limited user-interaction scenario. It allows autonomous social robots to convey posture-based expressive information while gaze-tracking users. We have developed a new scenario aimed at further validating some of the unsupported claims from the previous scenario. Our experiment features a fully autonomous Adelino robot, and concludes that ERIK can be used to direct a user's choice of actions during execution of a given task, fully through its non-verbal expressive queues.

Expressive Inverse Kinematics Solving in Real-time for Virtual and Robotic Interactive Characters

Sep 30, 2019

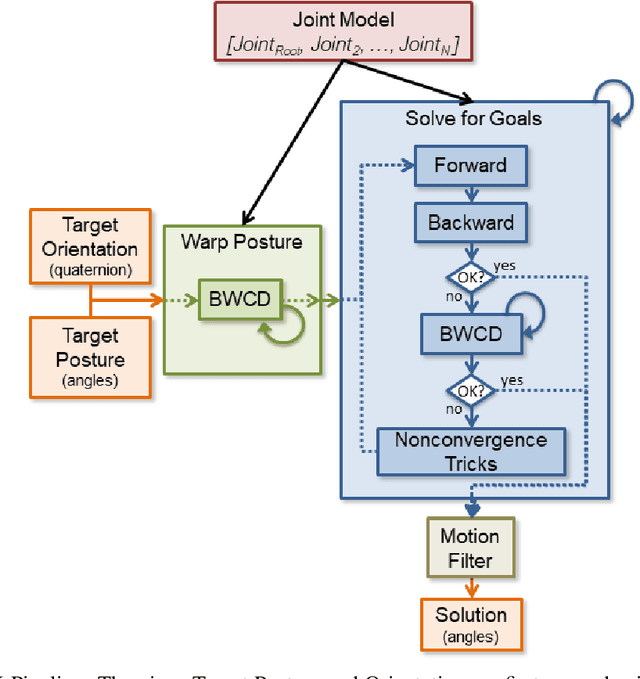

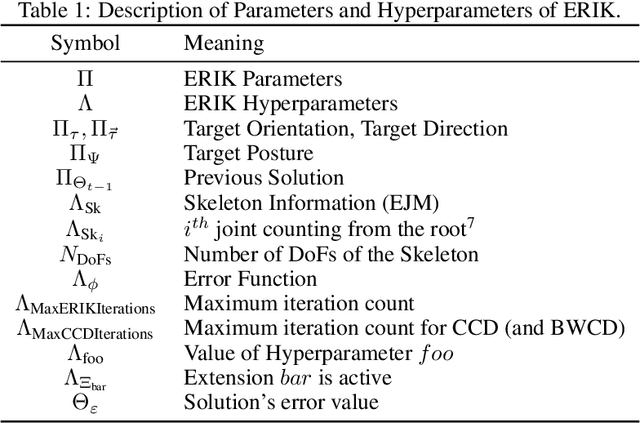

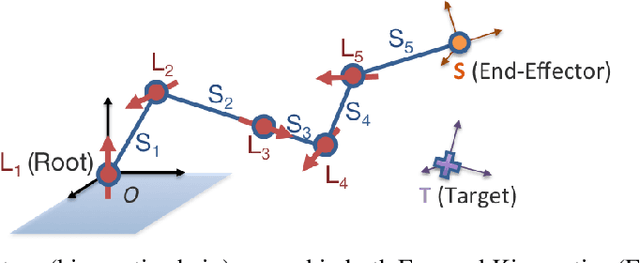

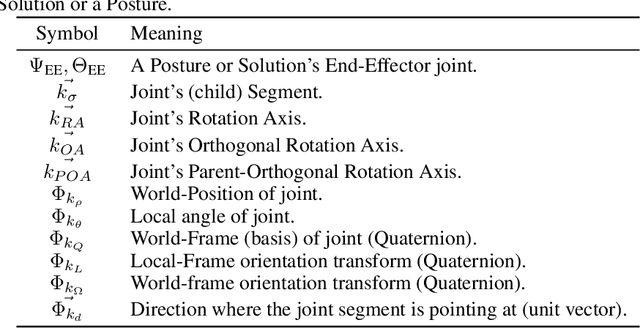

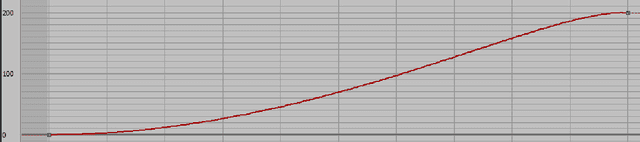

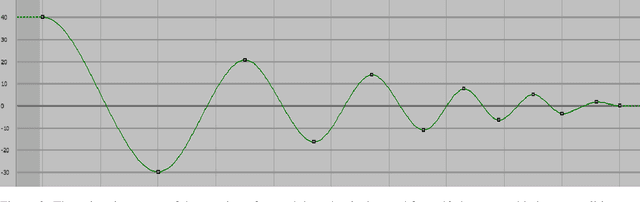

Abstract:With new advancements in interaction techniques, character animation also requires new methods, to support fields such as robotics, and VR/AR. Interactive characters in such fields are becoming driven by AI which opens up the possibility of non-linear and open-ended narratives that may even include interaction with the real, physical world. This paper presents and describes ERIK, an expressive inverse kinematics technique aimed at such applications. Our technique allows an arbitrary kinematic chain, such as an arm, snake, or robotic manipulator, to exhibit an expressive posture while aiming its end-point towards a given target orientation. The technique runs in interactive-time and does not require any pre-processing step such as e.g. training in machine learning techniques, in order to support new embodiments or new postures. That allows it to be integrated in an artist-friendly workflow, bringing artists closer to the development of such AI-driven expressive characters, by allowing them to use their typical animation tools of choice, and to properly pre-visualize the animation during design-time, even on a real robot. The full algorithmic specification is presented and described so that it can be implemented and used throughout the communities of the various fields we address. We demonstrate ERIK on different virtual kinematic structures, and also on a low-fidelity robot that was crafted using wood and hobby-grade servos, to show how well the technique performs even on a low-grade robot. Our evaluation shows how well the technique performs, i.e., how well the character is able to point at the target orientation, while minimally disrupting its target expressive posture, and respecting its mechanical rotation limits.

An extended framework for characterizing social robots

Jul 23, 2019

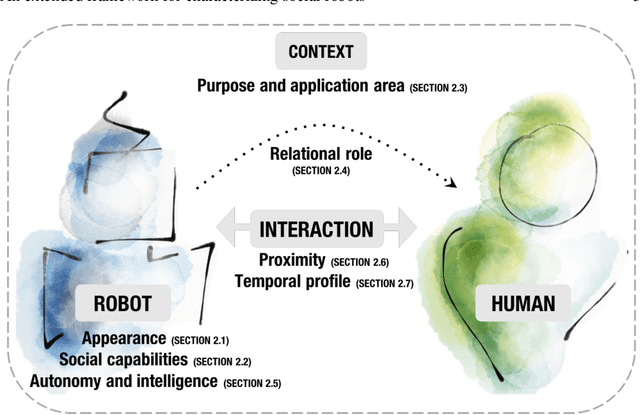

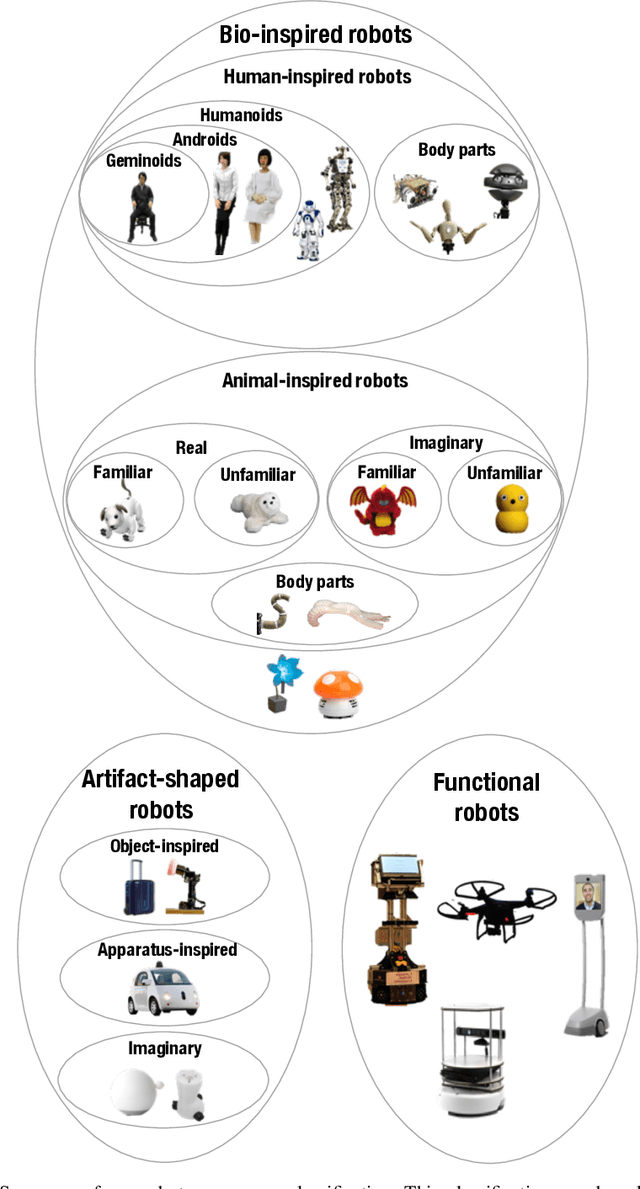

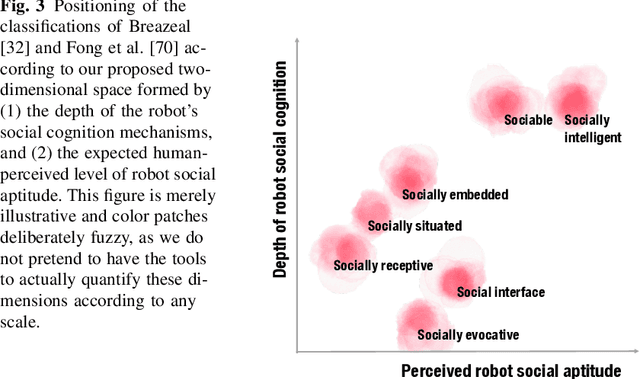

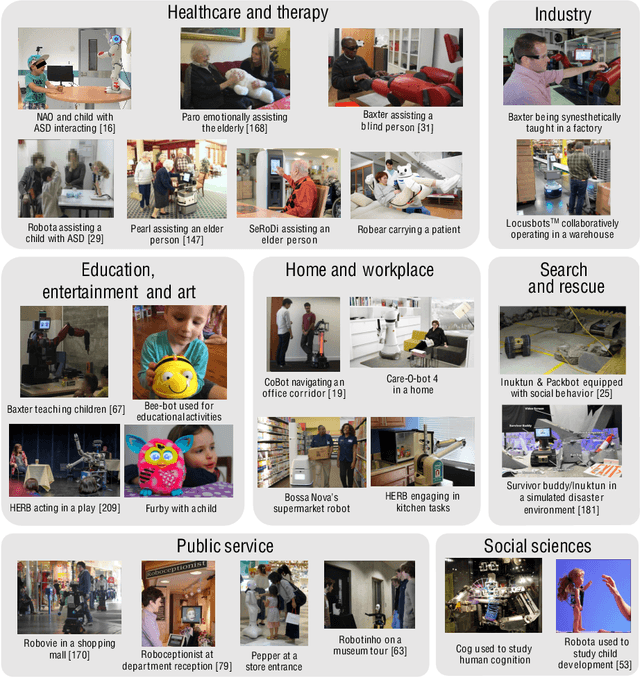

Abstract:Social robots are becoming increasingly diverse in their design, behavior, and usage. In this chapter, we provide a broad-ranging overview of the main characteristics that arise when one considers social robots and their interactions with humans. We specifically contribute a framework for characterizing social robots along 7 dimensions that we found to be most relevant to their design. These dimensions are: appearance, social capabilities, purpose and application area, relational role, autonomy and intelligence, proximity, and temporal profile. Within each dimension, we account for the variety of social robots through a combination of classifications and/or explanations. Our framework builds on and goes beyond existing frameworks, such as classifications and taxonomies found in the literature. More specifically, it contributes to the unification, clarification, and extension of key concepts, drawing from a rich body of relevant literature. This chapter is meant to serve as a resource for researchers, designers, and developers within and outside the field of social robotics. It is intended to provide them with tools to better understand and position existing social robots, as well as to inform their future design.

Nutty-based Robot Animation -- Principles and Practices

Apr 16, 2019

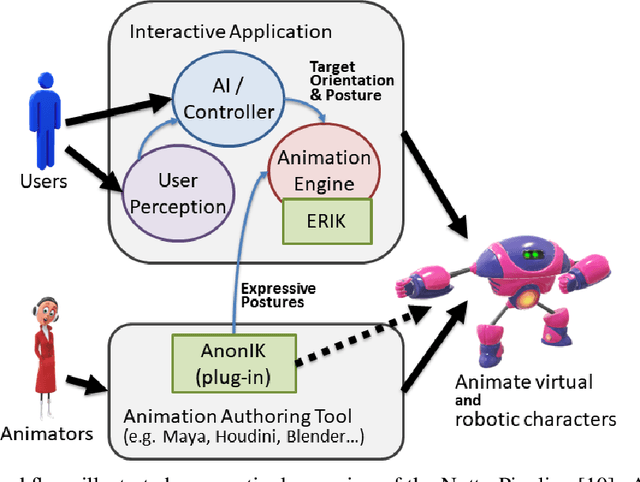

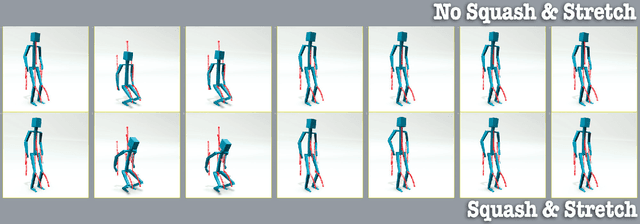

Abstract:Robot animation is a new form of character animation that extends the traditional process by allowing the animated motion to become more interactive and adaptable during interaction with users in real-world settings. This paper reviews how this new type of character animation has evolved and been shaped from character animation principles and practices. We outline some new paradigms that aim at allowing character animators to become robot animators, and to properly take part in the development of social robots. One such paradigm consists of the 12 principles of robot animation, which describes general concepts that both animators and robot developers should consider in order to properly understand each other. We also introduce the concept of Kinematronics, for specifying the controllable and programmable expressive abilities of robots, and the Nutty Workflow and Pipeline. The Nutty Pipeline introduces the concept of the Programmable Robot Animation Engine, which allows to generate, compose and blend various types of animation sources into a final, interaction-enabled motion that can be rendered on robots in real-time during real-world interactions. Additionally, we describe some types of tools that can be developed and integrated into Nutty-based workflows and pipelines, which allow animation artists to perform an integral part of the expressive behaviour development within social robots, and thus to evolve from standard (3D) character animators, towards a full-stack type of robot animators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge