Expressive Inverse Kinematics Solving in Real-time for Virtual and Robotic Interactive Characters

Paper and Code

Sep 30, 2019

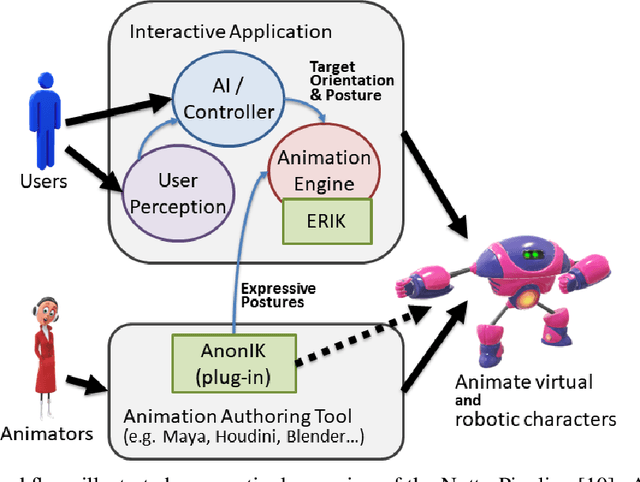

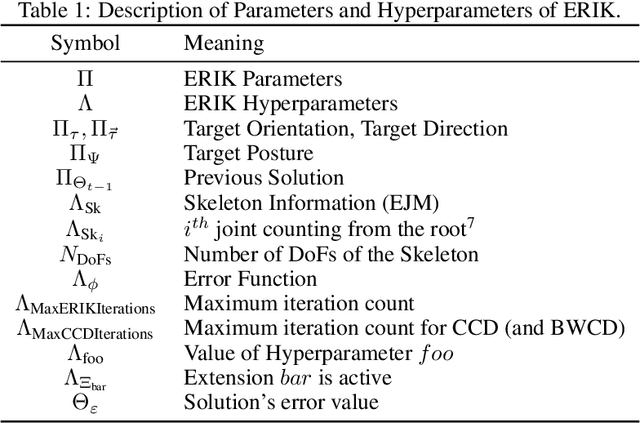

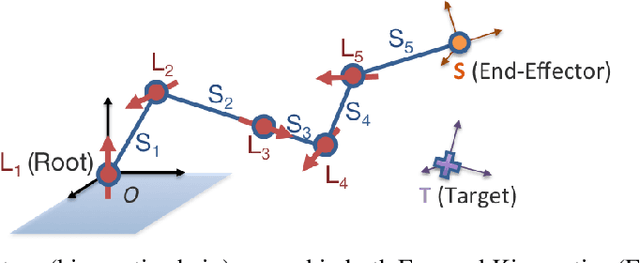

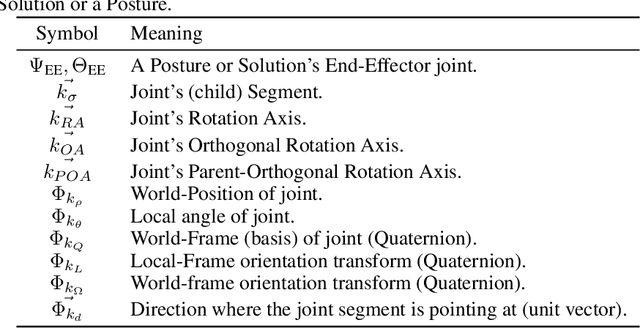

With new advancements in interaction techniques, character animation also requires new methods, to support fields such as robotics, and VR/AR. Interactive characters in such fields are becoming driven by AI which opens up the possibility of non-linear and open-ended narratives that may even include interaction with the real, physical world. This paper presents and describes ERIK, an expressive inverse kinematics technique aimed at such applications. Our technique allows an arbitrary kinematic chain, such as an arm, snake, or robotic manipulator, to exhibit an expressive posture while aiming its end-point towards a given target orientation. The technique runs in interactive-time and does not require any pre-processing step such as e.g. training in machine learning techniques, in order to support new embodiments or new postures. That allows it to be integrated in an artist-friendly workflow, bringing artists closer to the development of such AI-driven expressive characters, by allowing them to use their typical animation tools of choice, and to properly pre-visualize the animation during design-time, even on a real robot. The full algorithmic specification is presented and described so that it can be implemented and used throughout the communities of the various fields we address. We demonstrate ERIK on different virtual kinematic structures, and also on a low-fidelity robot that was crafted using wood and hobby-grade servos, to show how well the technique performs even on a low-grade robot. Our evaluation shows how well the technique performs, i.e., how well the character is able to point at the target orientation, while minimally disrupting its target expressive posture, and respecting its mechanical rotation limits.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge