Tiago Dias

Evaluating LLaMA 3.2 for Software Vulnerability Detection

Mar 10, 2025

Abstract:Deep Learning (DL) has emerged as a powerful tool for vulnerability detection, often outperforming traditional solutions. However, developing effective DL models requires large amounts of real-world data, which can be difficult to obtain in sufficient quantities. To address this challenge, DiverseVul dataset has been curated as the largest dataset of vulnerable and non-vulnerable C/C++ functions extracted exclusively from real-world projects. Its goal is to provide high-quality, large-scale samples for training DL models. However, during our study several inconsistencies were identified in the raw dataset while applying pre-processing techniques, highlighting the need for a refined version. In this work, we present a refined version of DiverseVul dataset, which is used to fine-tune a large language model, LLaMA 3.2, for vulnerability detection. Experimental results show that the use of pre-processing techniques led to an improvement in performance, with the model achieving an F1-Score of 66%, a competitive result when compared to our baseline, which achieved a 47% F1-Score in software vulnerability detection.

SCoPE: Evaluating LLMs for Software Vulnerability Detection

Jul 19, 2024Abstract:In recent years, code security has become increasingly important, especially with the rise of interconnected technologies. Detecting vulnerabilities early in the software development process has demonstrated numerous benefits. Consequently, the scientific community started using machine learning for automated detection of source code vulnerabilities. This work explores and refines the CVEFixes dataset, which is commonly used to train models for code-related tasks, specifically the C/C++ subset. To this purpose, the Source Code Processing Engine (SCoPE), a framework composed of strategized techniques that can be used to reduce the size and normalize C/C++ functions is presented. The output generated by SCoPE was used to create a new version of CVEFixes. This refined dataset was then employed in a feature representation analysis to assess the effectiveness of the tool's code processing techniques, consisting of fine-tuning three pre-trained LLMs for software vulnerability detection. The results show that SCoPE successfully helped to identify 905 duplicates within the evaluated subset. The LLM results corroborate with the literature regarding their suitability for software vulnerability detection, with the best model achieving 53% F1-score.

FuzzTheREST: An Intelligent Automated Black-box RESTful API Fuzzer

Jul 19, 2024Abstract:Software's pervasive impact and increasing reliance in the era of digital transformation raise concerns about vulnerabilities, emphasizing the need for software security. Fuzzy testing is a dynamic analysis software testing technique that consists of feeding faulty input data to a System Under Test (SUT) and observing its behavior. Specifically regarding black-box RESTful API testing, recent literature has attempted to automate this technique using heuristics to perform the input search and using the HTTP response status codes for classification. However, most approaches do not keep track of code coverage, which is important to validate the solution. This work introduces a black-box RESTful API fuzzy testing tool that employs Reinforcement Learning (RL) for vulnerability detection. The fuzzer operates via the OpenAPI Specification (OAS) file and a scenarios file, which includes information to communicate with the SUT and the sequences of functionalities to test, respectively. To evaluate its effectiveness, the tool was tested on the Petstore API. The tool found a total of six unique vulnerabilities and achieved 55\% code coverage.

TestLab: An Intelligent Automated Software Testing Framework

Jun 06, 2023

Abstract:The prevalence of software systems has become an integral part of modern-day living. Software usage has increased significantly, leading to its growth in both size and complexity. Consequently, software development is becoming a more time-consuming process. In an attempt to accelerate the development cycle, the testing phase is often neglected, leading to the deployment of flawed systems that can have significant implications on the users daily activities. This work presents TestLab, an intelligent automated software testing framework that attempts to gather a set of testing methods and automate them using Artificial Intelligence to allow continuous testing of software systems at multiple levels from different scopes, ranging from developers to end-users. The tool consists of three modules, each serving a distinct purpose. The first two modules aim to identify vulnerabilities from different perspectives, while the third module enhances traditional automated software testing by automatically generating test cases through source code analysis.

From Data to Action: Exploring AI and IoT-driven Solutions for Smarter Cities

Jun 06, 2023Abstract:The emergence of smart cities demands harnessing advanced technologies like the Internet of Things (IoT) and Artificial Intelligence (AI) and promises to unlock cities' potential to become more sustainable, efficient, and ultimately livable for their inhabitants. This work introduces an intelligent city management system that provides a data-driven approach to three use cases: (i) analyze traffic information to reduce the risk of traffic collisions and improve driver and pedestrian safety, (ii) identify when and where energy consumption can be reduced to improve cost savings, and (iii) detect maintenance issues like potholes in the city's roads and sidewalks, as well as the beginning of hazards like floods and fires. A case study in Aveiro City demonstrates the system's effectiveness in generating actionable insights that enhance security, energy efficiency, and sustainability, while highlighting the potential of AI and IoT-driven solutions for smart city development.

Constrained Adversarial Learning and its applicability to Automated Software Testing: a systematic review

Mar 14, 2023Abstract:Every novel technology adds hidden vulnerabilities ready to be exploited by a growing number of cyber-attacks. Automated software testing can be a promising solution to quickly analyze thousands of lines of code by generating and slightly modifying function-specific testing data to encounter a multitude of vulnerabilities and attack vectors. This process draws similarities to the constrained adversarial examples generated by adversarial learning methods, so there could be significant benefits to the integration of these methods in automated testing tools. Therefore, this systematic review is focused on the current state-of-the-art of constrained data generation methods applied for adversarial learning and software testing, aiming to guide researchers and developers to enhance testing tools with adversarial learning methods and improve the resilience and robustness of their digital systems. The found constrained data generation applications for adversarial machine learning were systematized, and the advantages and limitations of approaches specific for software testing were thoroughly analyzed, identifying research gaps and opportunities to improve testing tools with adversarial attack methods.

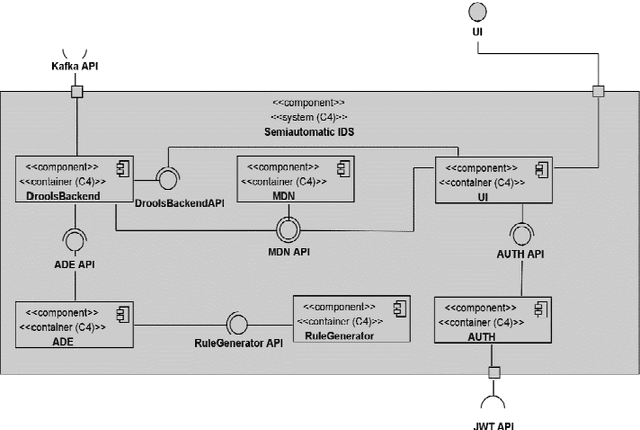

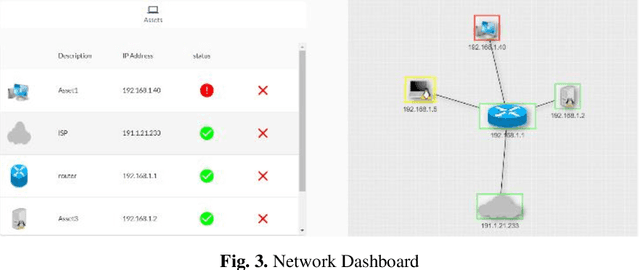

A Hybrid Approach for an Interpretable and Explainable Intrusion Detection System

Nov 19, 2021

Abstract:Cybersecurity has been a concern for quite a while now. In the latest years, cyberattacks have been increasing in size and complexity, fueled by significant advances in technology. Nowadays, there is an unavoidable necessity of protecting systems and data crucial for business continuity. Hence, many intrusion detection systems have been created in an attempt to mitigate these threats and contribute to a timelier detection. This work proposes an interpretable and explainable hybrid intrusion detection system, which makes use of artificial intelligence methods to achieve better and more long-lasting security. The system combines experts' written rules and dynamic knowledge continuously generated by a decision tree algorithm as new shreds of evidence emerge from network activity.

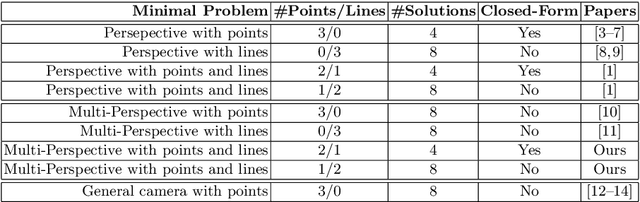

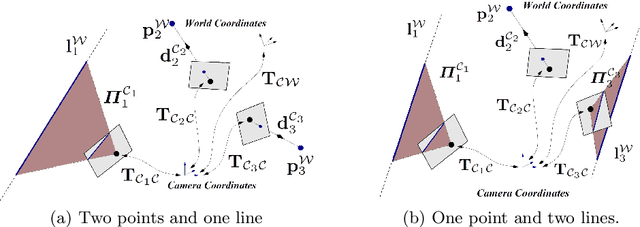

A Minimal Closed-Form Solution for Multi-Perspective Pose Estimation using Points and Lines

Jul 26, 2018

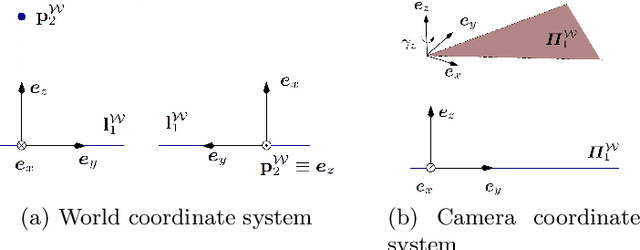

Abstract:We propose a minimal solution for pose estimation using both points and lines for a multi-perspective camera. In this paper, we treat the multi-perspective camera as a collection of rigidly attached perspective cameras. These type of imaging devices are useful for several computer vision applications that require a large coverage such as surveillance, self-driving cars, and motion-capture studios. While prior methods have considered the cases using solely points or lines, the hybrid case involving both points and lines has not been solved for multi-perspective cameras. We present the solutions for two cases. In the first case, we are given 2D to 3D correspondences for two points and one line. In the later case, we are given 2D to 3D correspondences for one point and two lines. We show that the solution for the case of two points and one line can be formulated as a fourth degree equation. This is interesting because we can get a closed-form solution and thereby achieve high computational efficiency. The later case involving two lines and one point can be mapped to an eighth degree equation. We show simulations and real experiments to demonstrate the advantages and benefits over existing methods.

* 22 pages, 6 figures

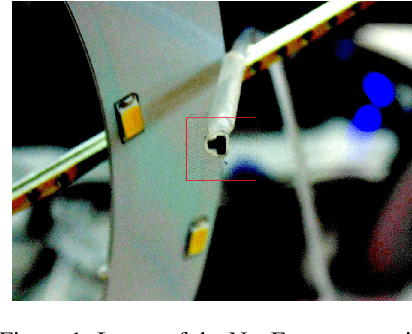

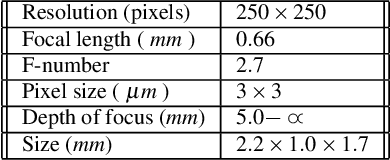

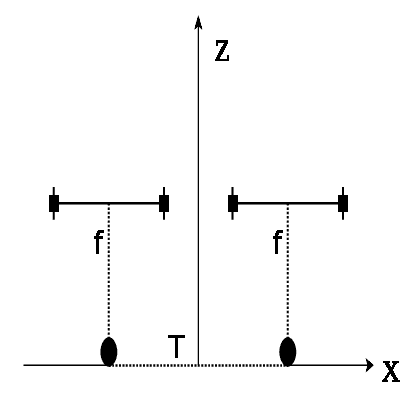

3D Reconstruction with Low Resolution, Small Baseline and High Radial Distortion Stereo Images

Sep 19, 2017

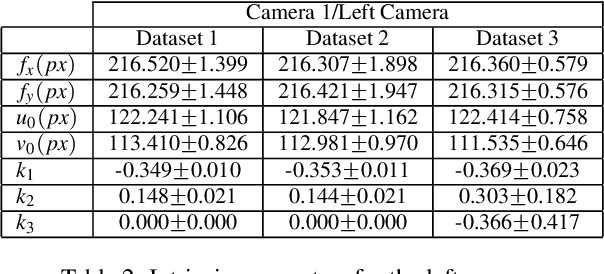

Abstract:In this paper we analyze and compare approaches for 3D reconstruction from low-resolution (250x250), high radial distortion stereo images, which are acquired with small baseline (approximately 1mm). These images are acquired with the system NanEye Stereo manufactured by CMOSIS/AWAIBA. These stereo cameras have also small apertures, which means that high levels of illumination are required. The goal was to develop an approach yielding accurate reconstructions, with a low computational cost, i.e., avoiding non-linear numerical optimization algorithms. In particular we focused on the analysis and comparison of radial distortion models. To perform the analysis and comparison, we defined a baseline method based on available software and methods, such as the Bouguet toolbox [2] or the Computer Vision Toolbox from Matlab. The approaches tested were based on the use of the polynomial model of radial distortion, and on the application of the division model. The issue of the center of distortion was also addressed within the framework of the application of the division model. We concluded that the division model with a single radial distortion parameter has limitations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge