Thippa Reddy Gadekallu

Framework, Standards, Applications and Best practices of Responsible AI : A Comprehensive Survey

Apr 18, 2025

Abstract:Responsible Artificial Intelligence (RAI) is a combination of ethics associated with the usage of artificial intelligence aligned with the common and standard frameworks. This survey paper extensively discusses the global and national standards, applications of RAI, current technology and ongoing projects using RAI, and possible challenges in implementing and designing RAI in the industries and projects based on AI. Currently, ethical standards and implementation of RAI are decoupled which caters each industry to follow their own standards to use AI ethically. Many global firms and government organizations are taking necessary initiatives to design a common and standard framework. Social pressure and unethical way of using AI forces the RAI design rather than implementation.

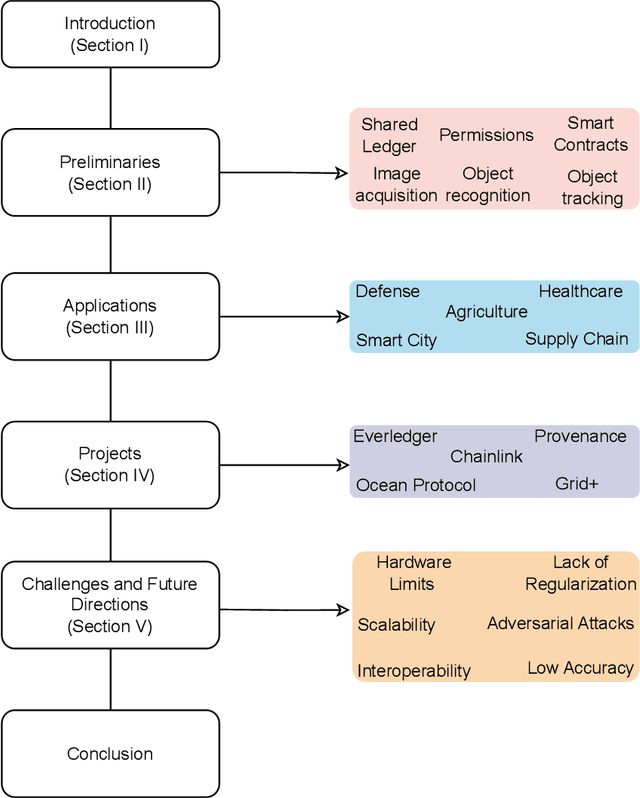

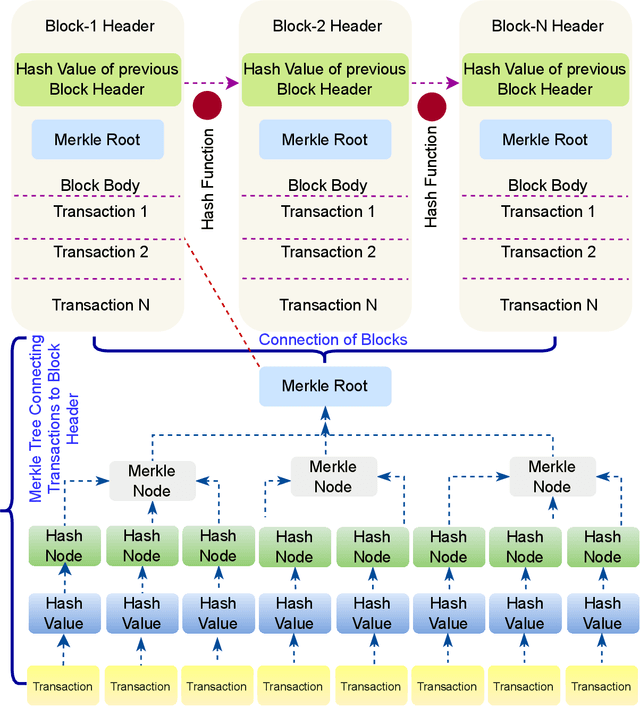

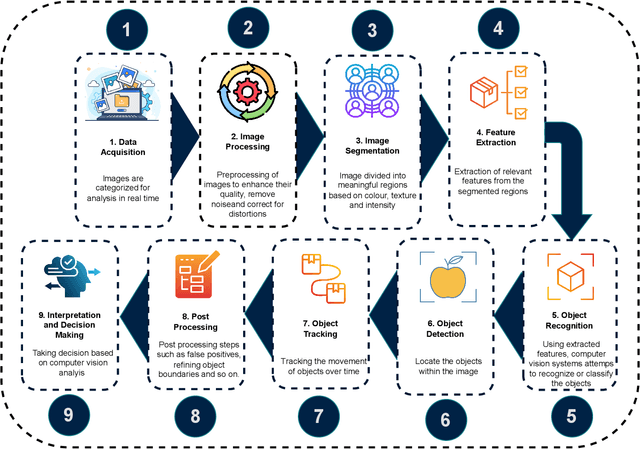

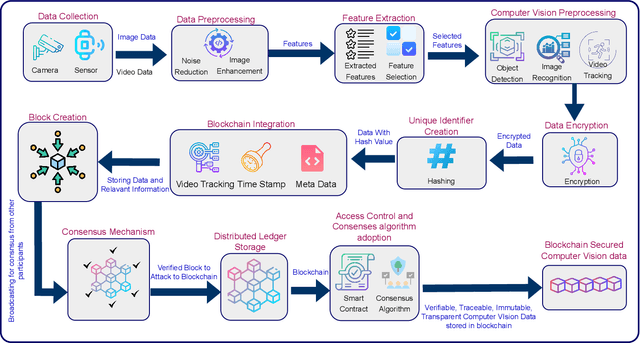

A Comprehensive Analysis of Blockchain Applications for Securing Computer Vision Systems

Jul 13, 2023

Abstract:Blockchain (BC) and Computer Vision (CV) are the two emerging fields with the potential to transform various sectors.The ability of BC can help in offering decentralized and secure data storage, while CV allows machines to learn and understand visual data. This integration of the two technologies holds massive promise for developing innovative applications that can provide solutions to the challenges in various sectors such as supply chain management, healthcare, smart cities, and defense. This review explores a comprehensive analysis of the integration of BC and CV by examining their combination and potential applications. It also provides a detailed analysis of the fundamental concepts of both technologies, highlighting their strengths and limitations. This paper also explores current research efforts that make use of the benefits offered by this combination. The effort includes how BC can be used as an added layer of security in CV systems and also ensure data integrity, enabling decentralized image and video analytics using BC. The challenges and open issues associated with this integration are also identified, and appropriate potential future directions are also proposed.

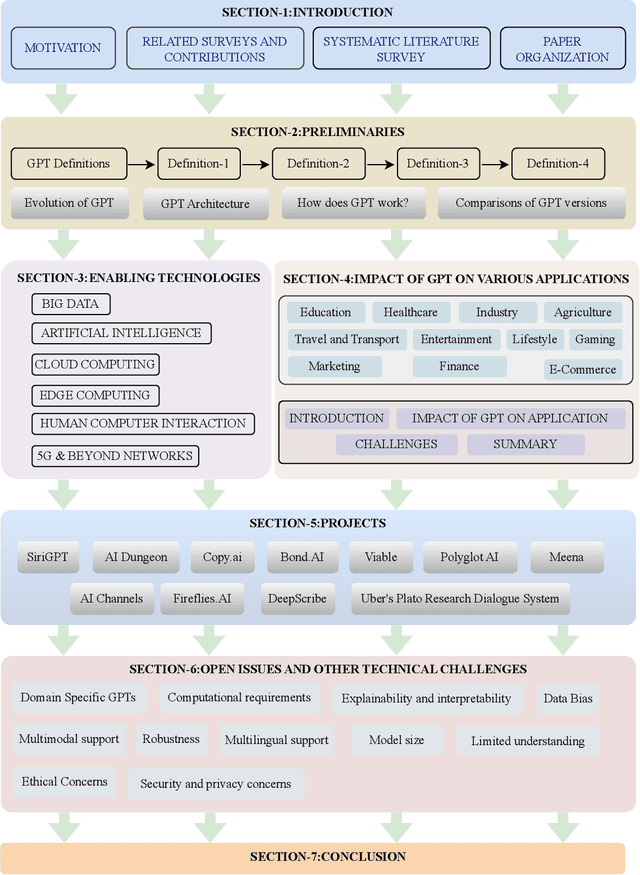

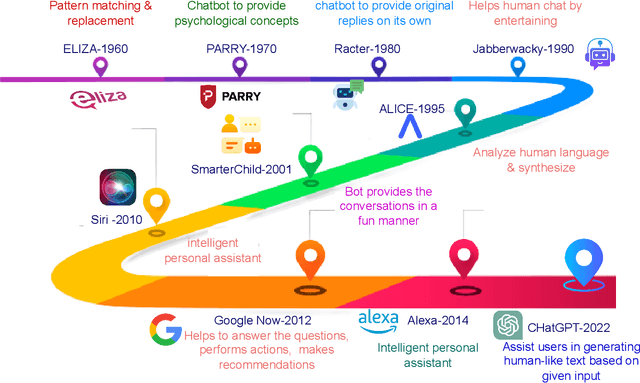

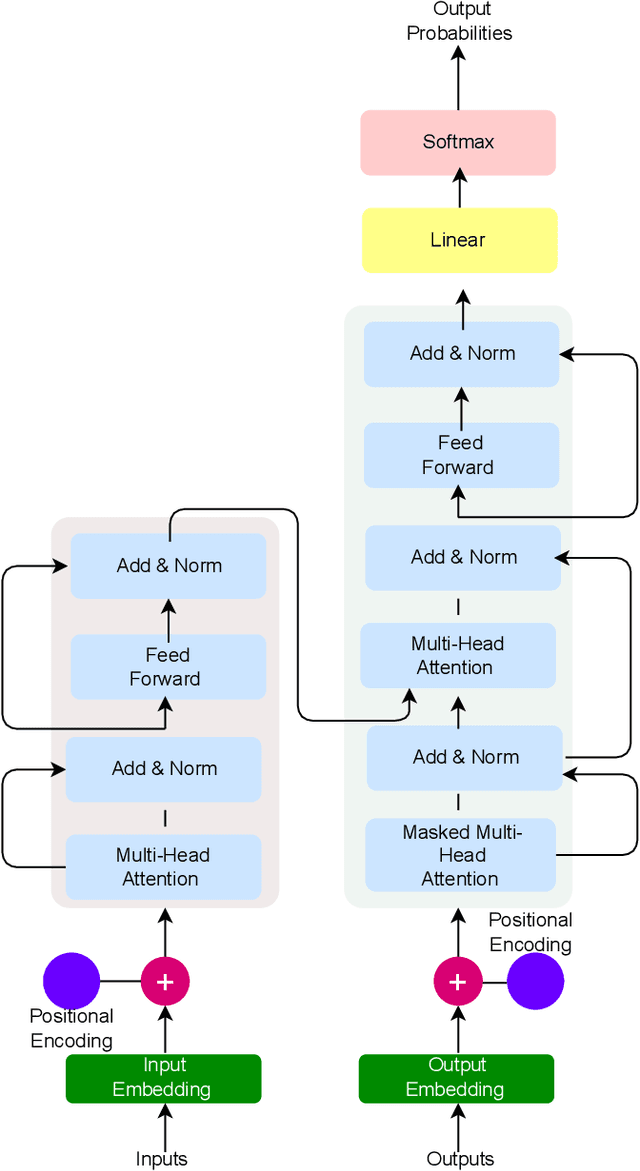

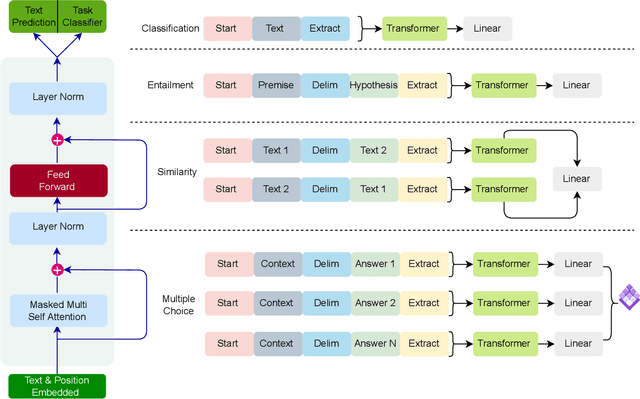

Generative Pre-trained Transformer: A Comprehensive Review on Enabling Technologies, Potential Applications, Emerging Challenges, and Future Directions

May 21, 2023

Abstract:The Generative Pre-trained Transformer (GPT) represents a notable breakthrough in the domain of natural language processing, which is propelling us toward the development of machines that can understand and communicate using language in a manner that closely resembles that of humans. GPT is based on the transformer architecture, a deep neural network designed for natural language processing tasks. Due to their impressive performance on natural language processing tasks and ability to effectively converse, GPT have gained significant popularity among researchers and industrial communities, making them one of the most widely used and effective models in natural language processing and related fields, which motivated to conduct this review. This review provides a detailed overview of the GPT, including its architecture, working process, training procedures, enabling technologies, and its impact on various applications. In this review, we also explored the potential challenges and limitations of a GPT. Furthermore, we discuss potential solutions and future directions. Overall, this paper aims to provide a comprehensive understanding of GPT, enabling technologies, their impact on various applications, emerging challenges, and potential solutions.

A Survey on Federated Learning for the Healthcare Metaverse: Concepts, Applications, Challenges, and Future Directions

Apr 05, 2023

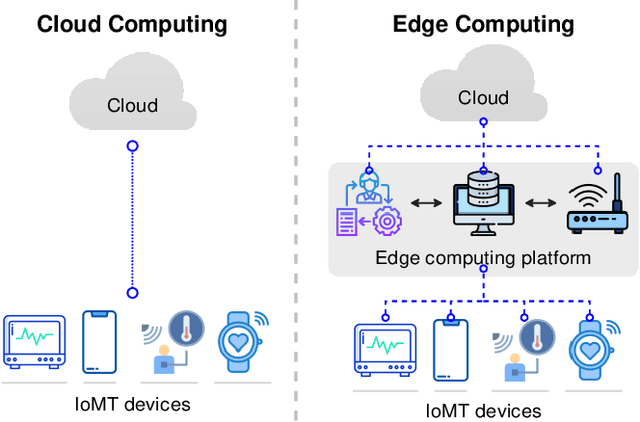

Abstract:Recent technological advancements have considerately improved healthcare systems to provide various intelligent healthcare services and improve the quality of life. Federated learning (FL), a new branch of artificial intelligence (AI), opens opportunities to deal with privacy issues in healthcare systems and exploit data and computing resources available at distributed devices. Additionally, the Metaverse, through integrating emerging technologies, such as AI, cloud edge computing, Internet of Things (IoT), blockchain, and semantic communications, has transformed many vertical domains in general and the healthcare sector in particular. Obviously, FL shows many benefits and provides new opportunities for conventional and Metaverse healthcare, motivating us to provide a survey on the usage of FL for Metaverse healthcare systems. First, we present preliminaries to IoT-based healthcare systems, FL in conventional healthcare, and Metaverse healthcare. The benefits of FL in Metaverse healthcare are then discussed, from improved privacy and scalability, better interoperability, better data management, and extra security to automation and low-latency healthcare services. Subsequently, we discuss several applications pertaining to FL-enabled Metaverse healthcare, including medical diagnosis, patient monitoring, medical education, infectious disease, and drug discovery. Finally, we highlight significant challenges and potential solutions toward the realization of FL in Metaverse healthcare.

Need of 6G for the Metaverse Realization

Dec 28, 2022Abstract:The concept of the Metaverse aims to bring a fully-fledged extended reality environment to provide next generation applications and services. Development of the Metaverse is backed by many technologies, including, 5G, artificial intelligence, edge computing and extended reality. The advent of 6G is envisaged to mark a significant milestone in the development of the Metaverse, facilitating near-zero-latency, a plethora of new services and upgraded real-world infrastructure. This paper establishes the advantages of providing the Metaverse services over 6G along with an overview of the demanded technical requirements. The paper provides an insight to the concepts of the Metaverse and the envisaged technical capabilities of 6G mobile networks. Then, the technical aspects covering 6G for the development of the Metaverse, ranging from validating digital assets, interoperability, and efficient user interaction in the Metaverse to related security and privacy aspects are elaborated. Subsequently, the role of 6G technologies towards enabling the Metaverse, including artificial intelligence, blockchain, open radio access networks, edge computing, cloudification and internet of everything. The paper also presents 6G integration challenges and outlines ongoing projects towards developing the Metaverse technologies to facilitate the Metaverse applications and services.

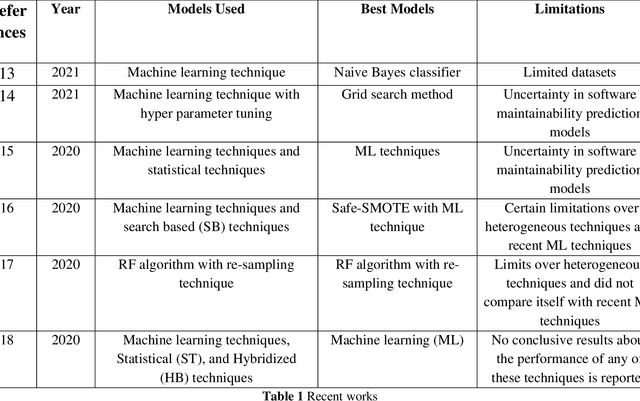

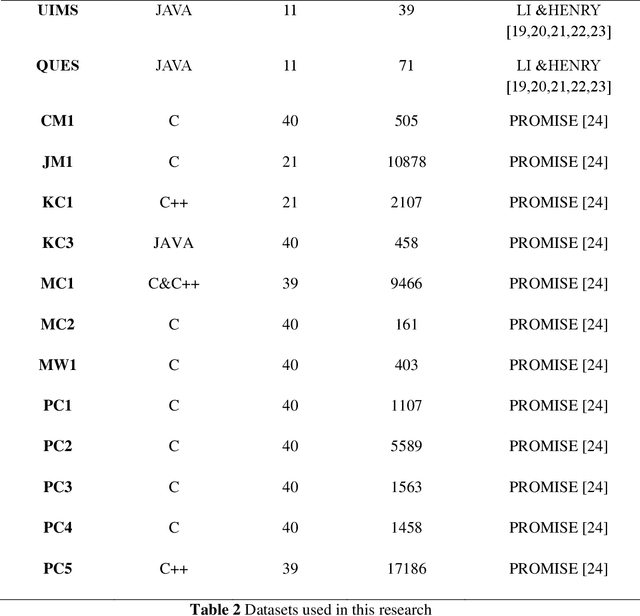

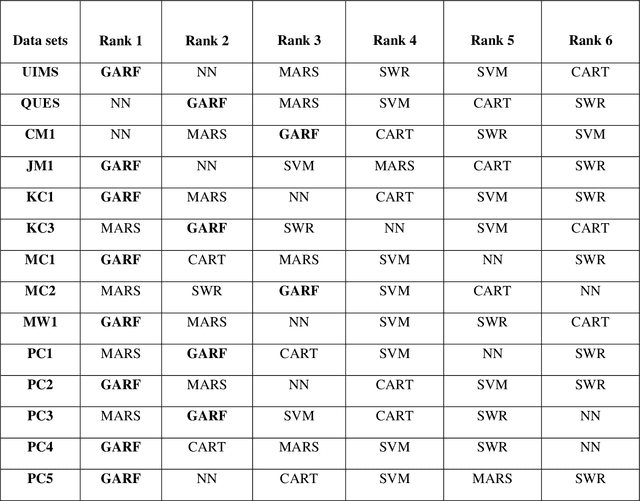

A Multiple Criteria Decision Analysis based Approach to Remove Uncertainty in SMP Models

Sep 30, 2022

Abstract:Advanced AI technologies are serving humankind in a number of ways, from healthcare to manufacturing. Advanced automated machines are quite expensive, but the end output is supposed to be of the highest possible quality. Depending on the agility of requirements, these automation technologies can change dramatically. The likelihood of making changes to automation software is extremely high, so it must be updated regularly. If maintainability is not taken into account, it will have an impact on the entire system and increase maintenance costs. Many companies use different programming paradigms in developing advanced automated machines based on client requirements. Therefore, it is essential to estimate the maintainability of heterogeneous software. As a result of the lack of widespread consensus on software maintainability prediction (SPM) methodologies, individuals and businesses are left perplexed when it comes to determining the appropriate model for estimating the maintainability of software, which serves as the inspiration for this research. A structured methodology was designed, and the datasets were preprocessed and maintainability index (MI) range was also found for all the datasets expect for UIMS and QUES, the metric CHANGE is used for UIMS and QUES. To remove the uncertainty among the aforementioned techniques, a popular multiple criteria decision-making model, namely the technique for order preference by similarity to ideal solution (TOPSIS), is used in this work. TOPSIS revealed that GARF outperforms the other considered techniques in predicting the maintainability of heterogeneous automated software.

A Systematic Literature Review of Soft Computing Techniques for Software Maintainability Prediction: State-of-the-Art, Challenges and Future Directions

Sep 21, 2022

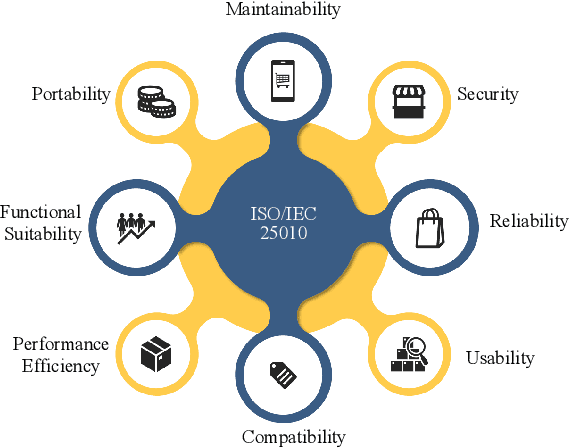

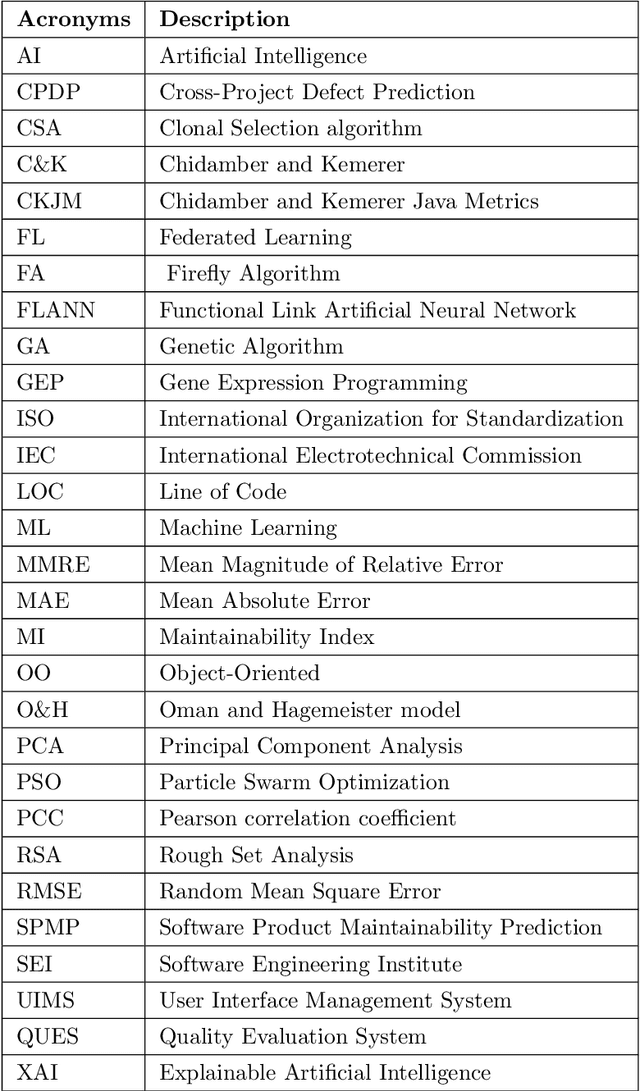

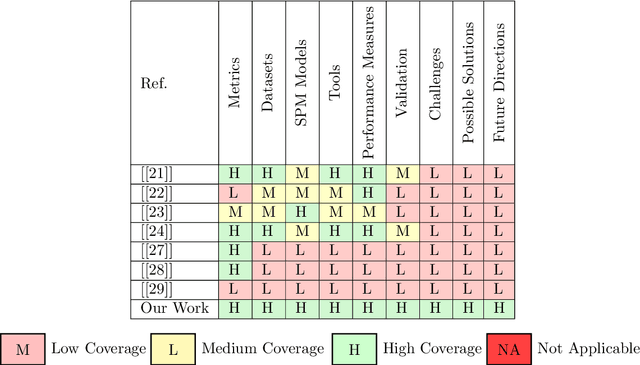

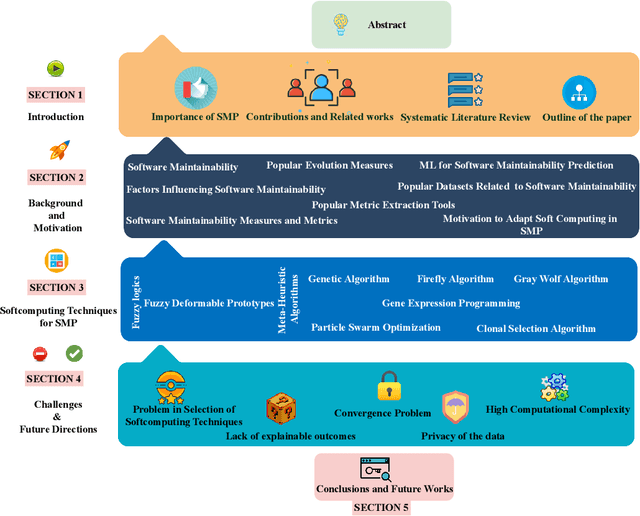

Abstract:The software is changing rapidly with the invention of advanced technologies and methodologies. The ability to rapidly and successfully upgrade software in response to changing business requirements is more vital than ever. For the long-term management of software products, measuring software maintainability is crucial. The use of soft computing techniques for software maintainability prediction has shown immense promise in software maintenance process by providing accurate prediction of software maintainability. To better understand the role of soft computing techniques for software maintainability prediction, we aim to provide a systematic literature review of soft computing techniques for software maintainability prediction. Firstly, we provide a detailed overview of software maintainability. Following this, we explore the fundamentals of software maintainability and the reasons for adopting soft computing methodologies for predicting software maintainability. Later, we examine the soft computing approaches employed in the process of software maintainability prediction. Furthermore, we discuss the difficulties and potential solutions associated with the use of soft computing techniques to predict software maintainability. Finally, we conclude the review with some promising future directions to drive further research innovations and developments in this promising area.

Metaverse for Healthcare: A Survey on Potential Applications, Challenges and Future Directions

Sep 09, 2022

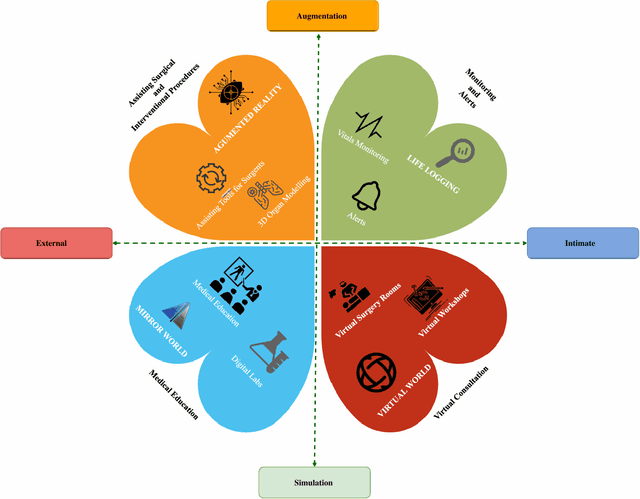

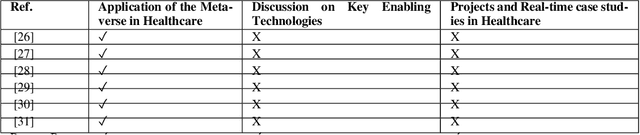

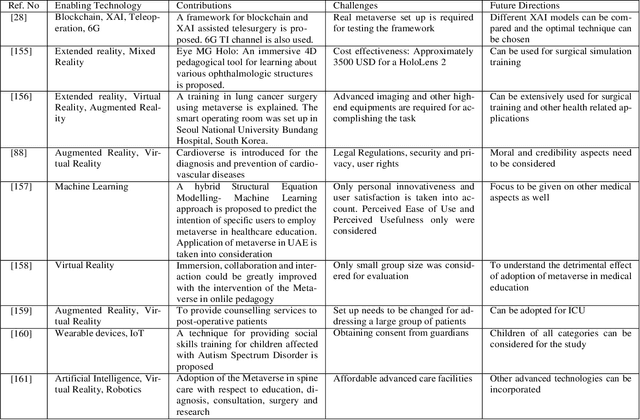

Abstract:The rapid progress in digitalization and automation have led to an accelerated growth in healthcare, generating novel models that are creating new channels for rendering treatment with reduced cost. The Metaverse is an emerging technology in the digital space which has huge potential in healthcare, enabling realistic experiences to the patients as well as the medical practitioners. The Metaverse is a confluence of multiple enabling technologies such as artificial intelligence, virtual reality, augmented reality, internet of medical devices, robotics, quantum computing, etc. through which new directions for providing quality healthcare treatment and services can be explored. The amalgamation of these technologies ensures immersive, intimate and personalized patient care. It also provides adaptive intelligent solutions that eliminates the barriers between healthcare providers and receivers. This article provides a comprehensive review of the Metaverse for healthcare, emphasizing on the state of the art, the enabling technologies for adopting the Metaverse for healthcare, the potential applications and the related projects. The issues in the adaptation of the Metaverse for healthcare applications are also identified and the plausible solutions are highlighted as part of future research directions.

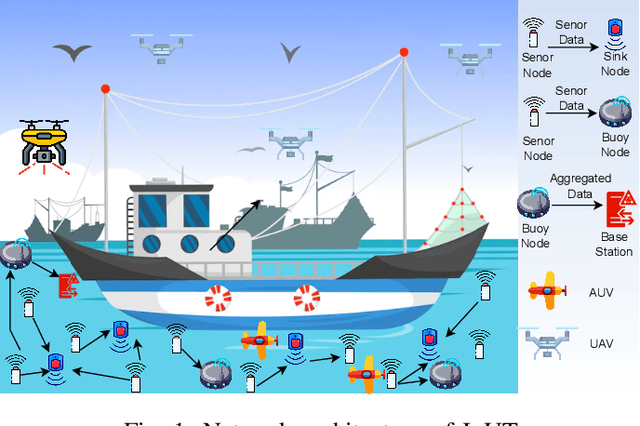

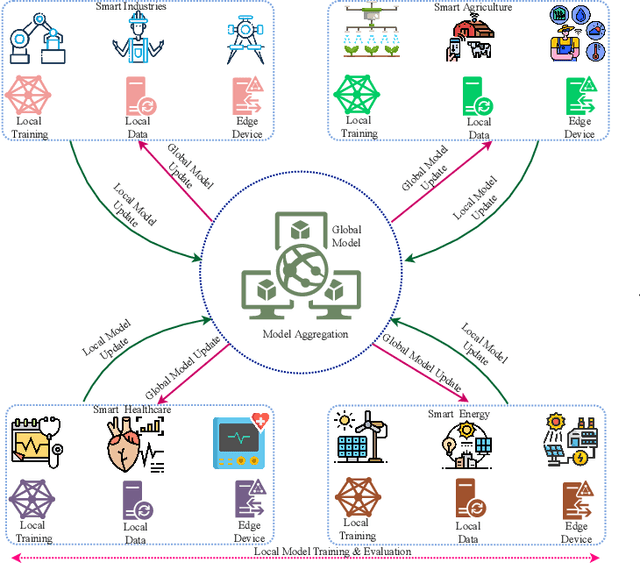

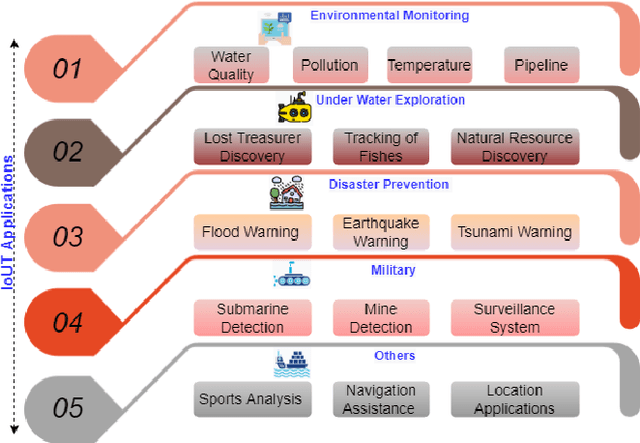

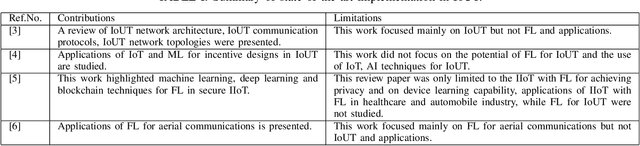

Federated Learning for IoUT: Concepts, Applications, Challenges and Opportunities

Jul 28, 2022

Abstract:Internet of Underwater Things (IoUT) have gained rapid momentum over the past decade with applications spanning from environmental monitoring and exploration, defence applications, etc. The traditional IoUT systems use machine learning (ML) approaches which cater the needs of reliability, efficiency and timeliness. However, an extensive review of the various studies conducted highlight the significance of data privacy and security in IoUT frameworks as a predominant factor in achieving desired outcomes in mission critical applications. Federated learning (FL) is a secured, decentralized framework which is a recent development in machine learning, that will help in fulfilling the challenges faced by conventional ML approaches in IoUT. This paper presents an overview of the various applications of FL in IoUT, its challenges, open issues and indicates direction of future research prospects.

XAI for Cybersecurity: State of the Art, Challenges, Open Issues and Future Directions

Jun 03, 2022

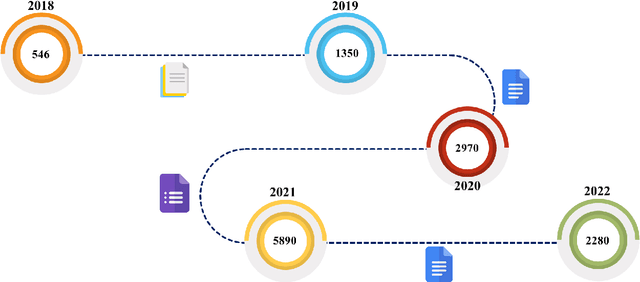

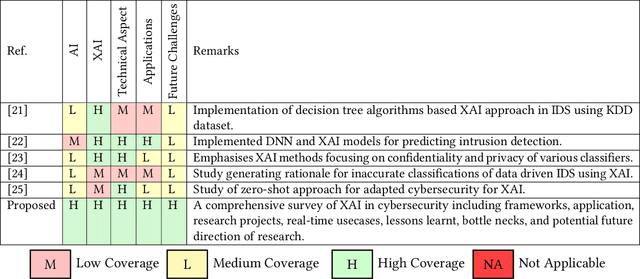

Abstract:In the past few years, artificial intelligence (AI) techniques have been implemented in almost all verticals of human life. However, the results generated from the AI models often lag explainability. AI models often appear as a blackbox wherein developers are unable to explain or trace back the reasoning behind a specific decision. Explainable AI (XAI) is a rapid growing field of research which helps to extract information and also visualize the results generated with an optimum transparency. The present study provides and extensive review of the use of XAI in cybersecurity. Cybersecurity enables protection of systems, networks and programs from different types of attacks. The use of XAI has immense potential in predicting such attacks. The paper provides a brief overview on cybersecurity and the various forms of attack. Then the use of traditional AI techniques and its associated challenges are discussed which opens its doors towards use of XAI in various applications. The XAI implementations of various research projects and industry are also presented. Finally, the lessons learnt from these applications are highlighted which act as a guide for future scope of research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge