Thierry Mora

MINIMALIST: Mutual INformatIon Maximization for Amortized Likelihood Inference from Sampled Trajectories

Jun 03, 2021

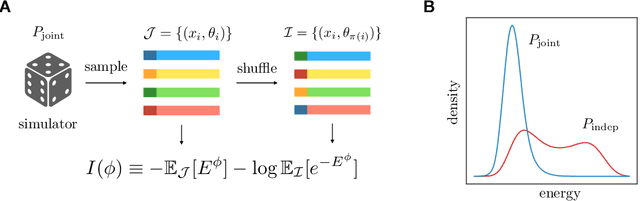

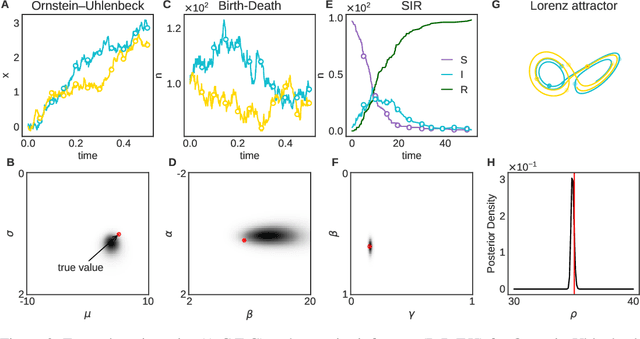

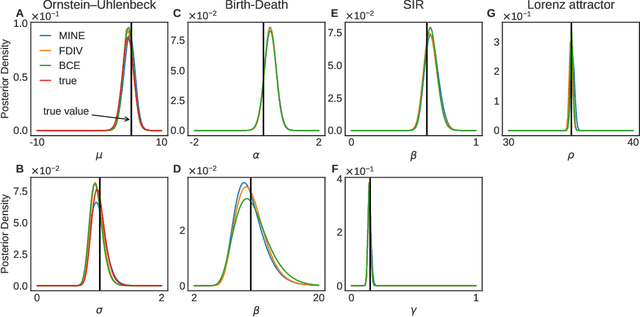

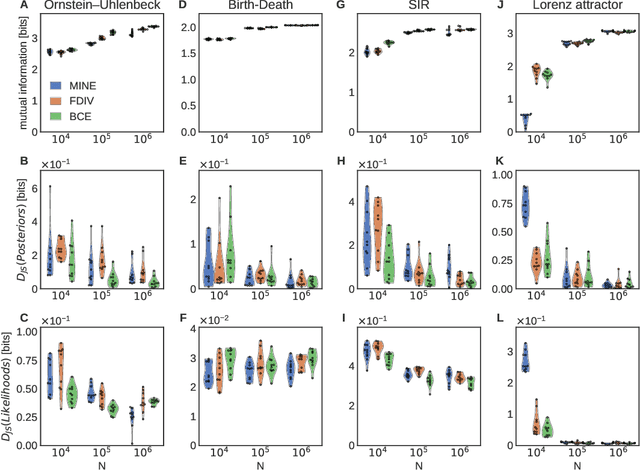

Abstract:Simulation-based inference enables learning the parameters of a model even when its likelihood cannot be computed in practice. One class of methods uses data simulated with different parameters to infer an amortized estimator for the likelihood-to-evidence ratio, or equivalently the posterior function. We show that this approach can be formulated in terms of mutual information maximization between model parameters and simulated data. We use this equivalence to reinterpret existing approaches for amortized inference, and propose two new methods that rely on lower bounds of the mutual information. We apply our framework to the inference of parameters of stochastic processes and chaotic dynamical systems from sampled trajectories, using artificial neural networks for posterior prediction. Our approach provides a unified framework that leverages the power of mutual information estimators for inference.

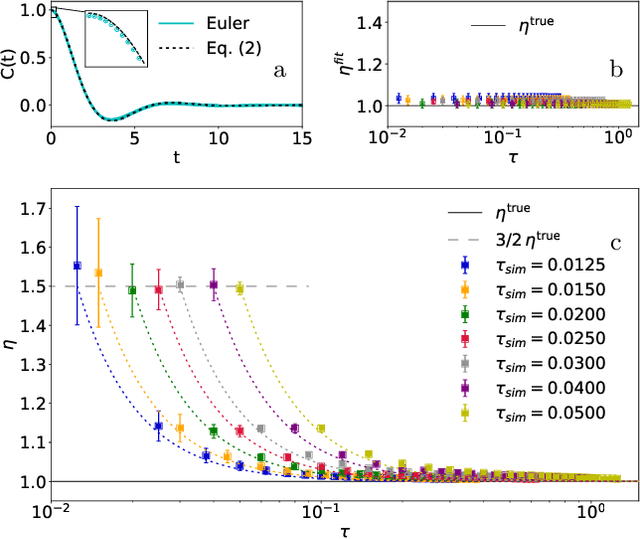

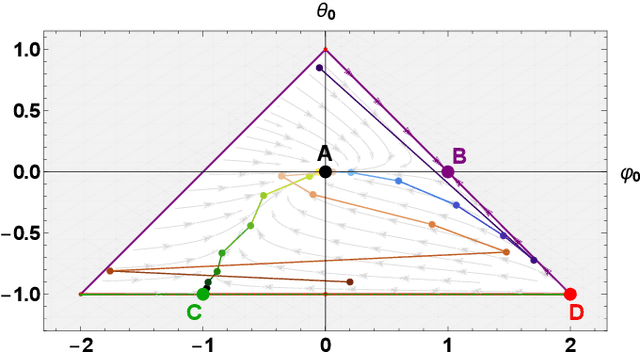

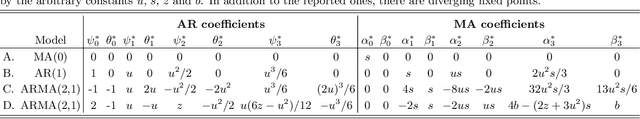

The Connection between Discrete- and Continuous-Time Descriptions of Gaussian Continuous Processes

Jan 20, 2021

Abstract:Learning the continuous equations of motion from discrete observations is a common task in all areas of physics. However, not any discretization of a Gaussian continuous-time stochastic process can be adopted in parametric inference. We show that discretizations yielding consistent estimators have the property of `invariance under coarse-graining', and correspond to fixed points of a renormalization group map on the space of autoregressive moving average (ARMA) models (for linear processes). This result explains why combining differencing schemes for derivatives reconstruction and local-in-time inference approaches does not work for time series analysis of second or higher order stochastic differential equations, even if the corresponding integration schemes may be acceptably good for numerical simulations.

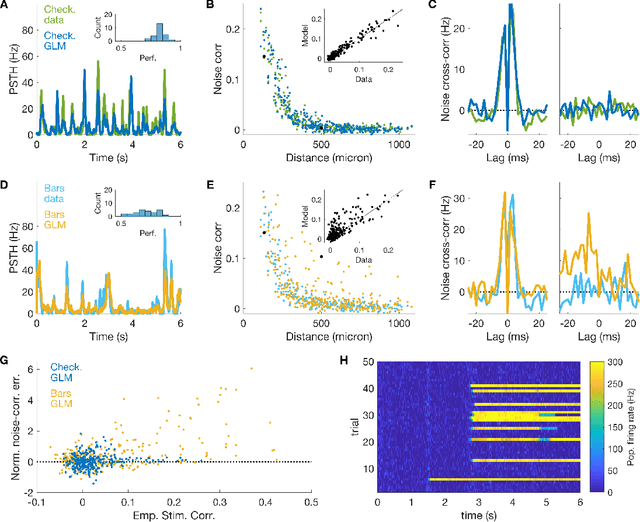

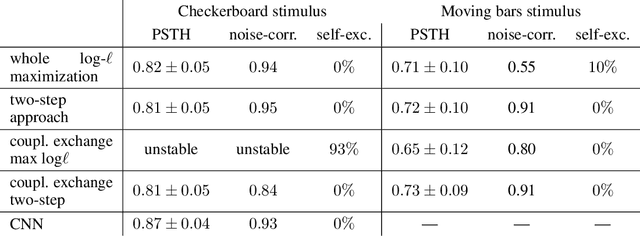

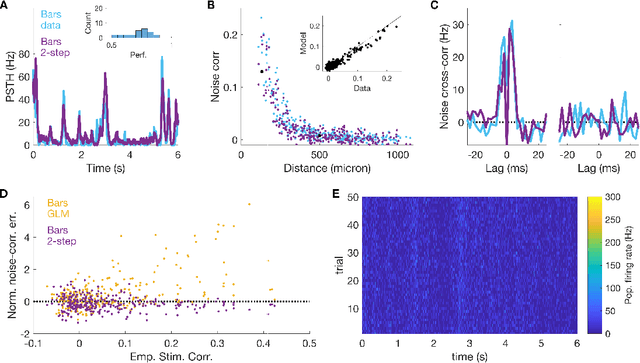

A new inference approach for training shallow and deep generalized linear models of noisy interacting neurons

Jun 16, 2020

Abstract:Generalized linear models are one of the most efficient paradigms for predicting the correlated stochastic activity of neuronal networks in response to external stimuli, with applications in many brain areas. However, when dealing with complex stimuli, their parameters often do not generalize across different stimulus statistics, leading to degraded performance and blowup instabilities. Here, we develop a two-step inference strategy that allows us to train robust generalized linear models of interacting neurons, by explicitly separating the effects of stimulus correlations and noise correlations in each training step. Applying this approach to the responses of retinal ganglion cells to complex visual stimuli, we show that, compared to classical methods, the models trained in this way exhibit improved performance, are more stable, yield robust interaction networks, and generalize well across complex visual statistics. The method can be extended to deep convolutional neural networks, leading to models with high predictive accuracy for both the neuron firing rates and their correlations.

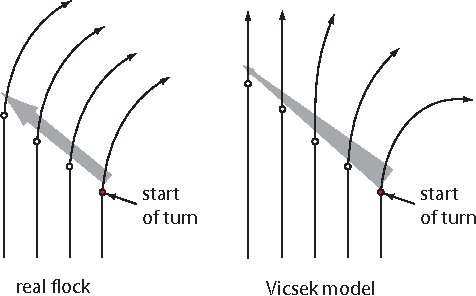

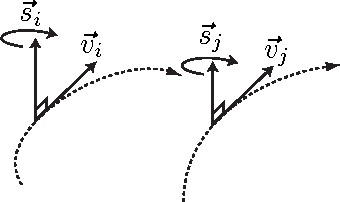

Flocking and turning: a new model for self-organized collective motion

Jan 21, 2015

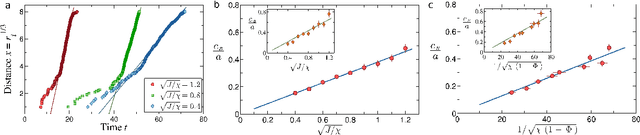

Abstract:Birds in a flock move in a correlated way, resulting in large polarization of velocities. A good understanding of this collective behavior exists for linear motion of the flock. Yet observing actual birds, the center of mass of the group often turns giving rise to more complicated dynamics, still keeping strong polarization of the flock. Here we propose novel dynamical equations for the collective motion of polarized animal groups that account for correlated turning including solely social forces. We exploit rotational symmetries and conservation laws of the problem to formulate a theory in terms of generalized coordinates of motion for the velocity directions akin to a Hamiltonian formulation for rotations. We explicitly derive the correspondence between this formulation and the dynamics of the individual velocities, thus obtaining a new model of collective motion. In the appropriate overdamped limit we recover the well-known Vicsek model, which dissipates rotational information and does not allow for polarized turns. Although the new model has its most vivid success in describing turning groups, its dynamics is intrinsically different from previous ones in a wide dynamical regime, while reducing to the hydrodynamic description of Toner and Tu at very large length-scales. The derived framework is therefore general and it may describe the collective motion of any strongly polarized active matter system.

* Accepted for the Special Issue of the Journal of Statistical Physics: Collective Behavior in Biological Systems, 17 pages, 4 figures, 3 videos

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge