Giulio Isacchini

MINIMALIST: Mutual INformatIon Maximization for Amortized Likelihood Inference from Sampled Trajectories

Jun 03, 2021

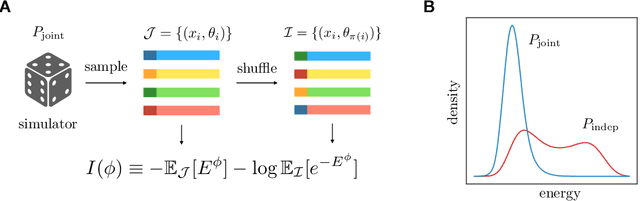

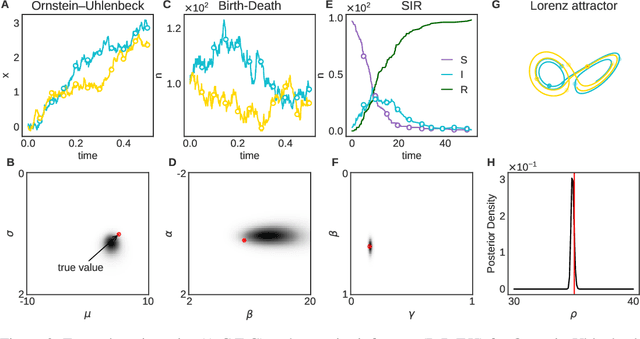

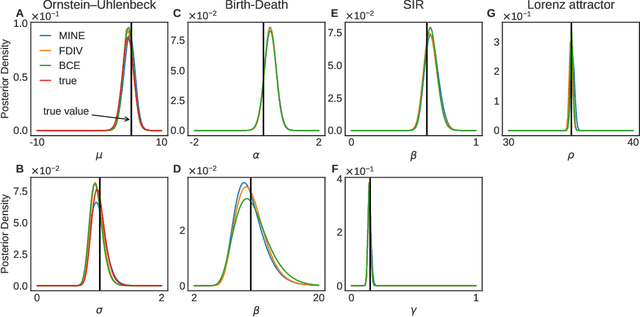

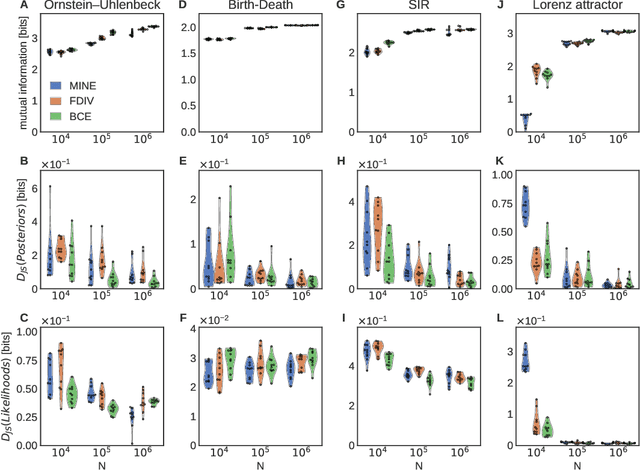

Abstract:Simulation-based inference enables learning the parameters of a model even when its likelihood cannot be computed in practice. One class of methods uses data simulated with different parameters to infer an amortized estimator for the likelihood-to-evidence ratio, or equivalently the posterior function. We show that this approach can be formulated in terms of mutual information maximization between model parameters and simulated data. We use this equivalence to reinterpret existing approaches for amortized inference, and propose two new methods that rely on lower bounds of the mutual information. We apply our framework to the inference of parameters of stochastic processes and chaotic dynamical systems from sampled trajectories, using artificial neural networks for posterior prediction. Our approach provides a unified framework that leverages the power of mutual information estimators for inference.

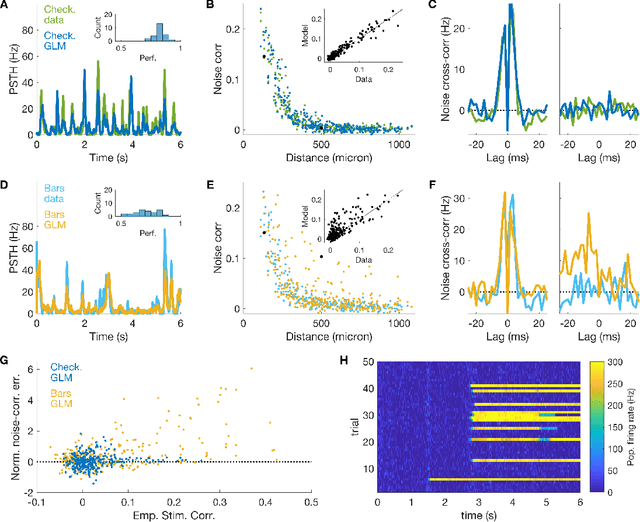

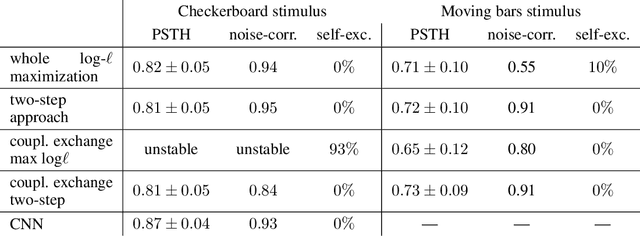

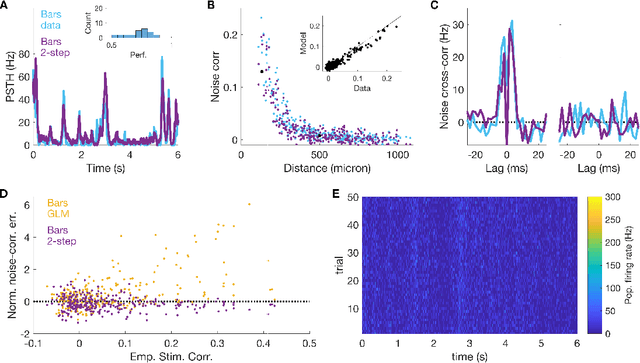

A new inference approach for training shallow and deep generalized linear models of noisy interacting neurons

Jun 16, 2020

Abstract:Generalized linear models are one of the most efficient paradigms for predicting the correlated stochastic activity of neuronal networks in response to external stimuli, with applications in many brain areas. However, when dealing with complex stimuli, their parameters often do not generalize across different stimulus statistics, leading to degraded performance and blowup instabilities. Here, we develop a two-step inference strategy that allows us to train robust generalized linear models of interacting neurons, by explicitly separating the effects of stimulus correlations and noise correlations in each training step. Applying this approach to the responses of retinal ganglion cells to complex visual stimuli, we show that, compared to classical methods, the models trained in this way exhibit improved performance, are more stable, yield robust interaction networks, and generalize well across complex visual statistics. The method can be extended to deep convolutional neural networks, leading to models with high predictive accuracy for both the neuron firing rates and their correlations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge