Leonardo Parisi

CoMo: A novel co-moving 3D camera system

Jan 26, 2021

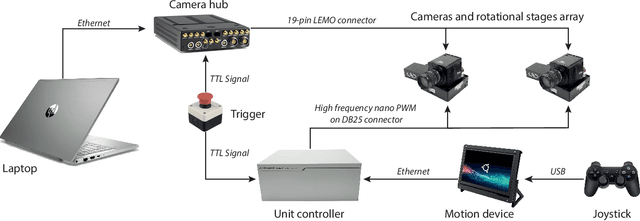

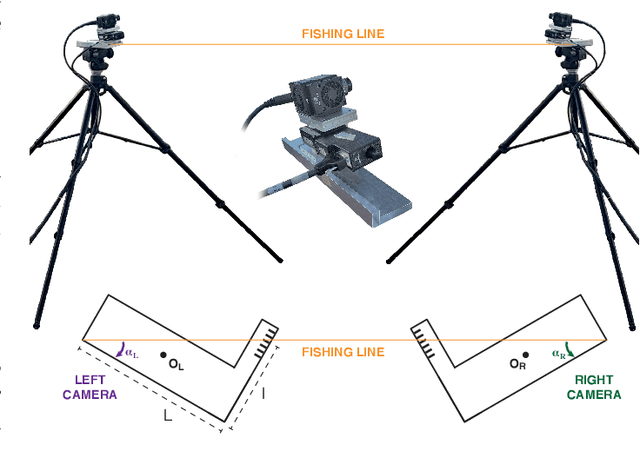

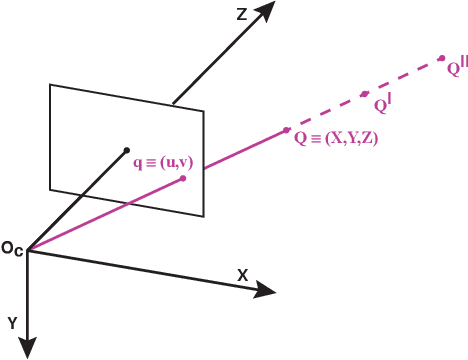

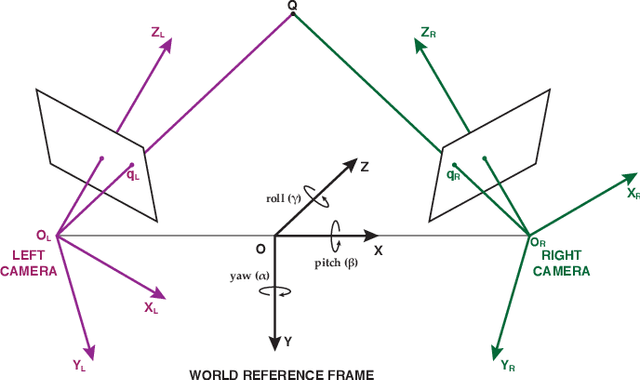

Abstract:Motivated by the theoretical interest in reconstructing long 3D trajectories of individual birds in large flocks, we developed CoMo, a co-moving camera system of two synchronized high speed cameras coupled with rotational stages, which allow us to dynamically follow the motion of a target flock. With the rotation of the cameras we overcome the limitations of standard static systems that restrict the duration of the collected data to the short interval of time in which targets are in the cameras common field of view, but at the same time we change in time the external parameters of the system, which have then to be calibrated frame-by-frame. We address the calibration of the external parameters measuring the position of the cameras and their three angles of yaw, pitch and roll in the system "home" configuration (rotational stage at an angle equal to 0deg and combining this static information with the time dependent rotation due to the stages. We evaluate the robustness and accuracy of the system by comparing reconstructed and measured 3D distances in what we call 3D tests, which show a relative error of the order of 1%. The novelty of the work presented in this paper is not only on the system itself, but also on the approach we use in the tests, which we show to be a very powerful tool in detecting and fixing calibration inaccuracies and that, for this reason, may be relevant for a broad audience.

Stereo camera system calibration: the need of two sets of parameters

Jan 14, 2021

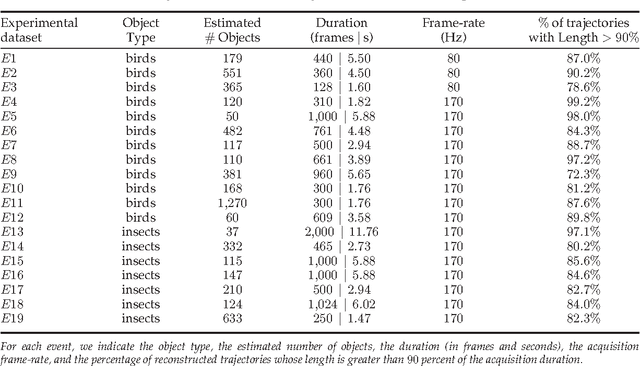

Abstract:The reconstruction of a scene via a stereo-camera system is a two-steps process, where at first images from different cameras are matched to identify the set of point-to-point correspondences that then will actually be reconstructed in the three dimensional real world. The performance of the system strongly relies of the calibration procedure, which has to be carefully designed to guarantee optimal results. We implemented three different calibration methods and we compared their performance over 19 datasets. We present the experimental evidence that, due to the image noise, a single set of parameters is not sufficient to achieve high accuracy in the identification of the correspondences and in the 3D reconstruction at the same time. We propose to calibrate the system twice to estimate two different sets of parameters: the one obtained by minimizing the reprojection error that will be used when dealing with quantities defined in the 2D space of the cameras, and the one obtained by minimizing the reconstruction error that will be used when dealing with quantities defined in the real 3D world.

SpaRTA - Tracking across occlusions via global partitioning of 3D clouds of points

Feb 16, 2018

Abstract:Any 3D tracking algorithm has to deal with occlusions: multiple targets get so close to each other that the loss of their identities becomes likely. In the best case scenario, trajectories are interrupted, thus curbing the completeness of the data-set; in the worse case scenario, identity switches arise, potentially affecting in severe ways the very quality of the data. Here, we present a novel tracking method that addresses the problem of occlusions within large groups of featureless objects by means of three steps: i) it represents each target as a cloud of points in 3D; ii) once a 3D cluster corresponding to an occlusion occurs, it defines a partitioning problem by introducing a cost function that uses both attractive and repulsive spatio-temporal proximity links; iii) it minimizes the cost function through a semi-definite optimization technique specifically designed to cope with link frustration. The algorithm is independent of the specific experimental method used to collect the data. By performing tests on public data-sets, we show that the new algorithm produces a significant improvement over the state-of-the-art tracking methods, both by reducing the number of identity switches and by increasing the accuracy of the actual positions of the targets in real space.

Towards a tracking algorithm based on the clustering of spatio-temporal clouds of points

Nov 04, 2015

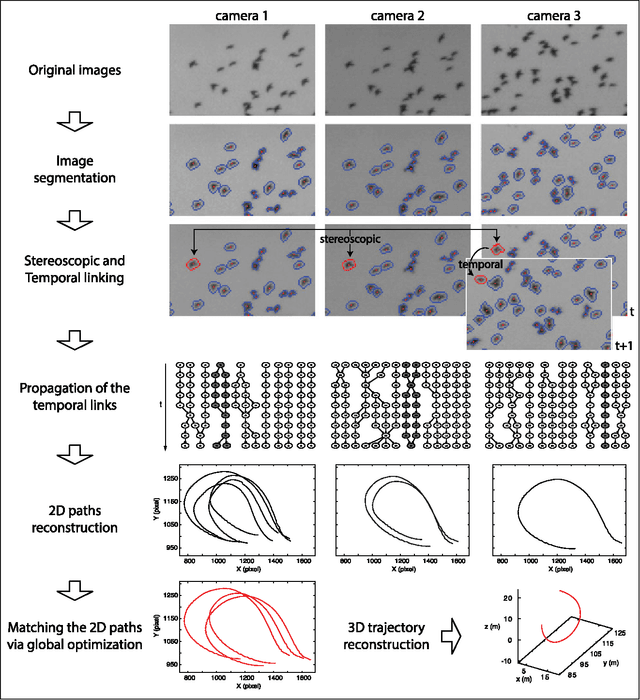

Abstract:The interest in 3D dynamical tracking is growing in fields such as robotics, biology and fluid dynamics. Recently, a major source of progress in 3D tracking has been the study of collective behaviour in biological systems, where the trajectories of individual animals moving within large and dense groups need to be reconstructed to understand the behavioural interaction rules. Experimental data in this field are generally noisy and at low spatial resolution, so that individuals appear as small featureless objects and trajectories must be retrieved by making use of epipolar information only. Moreover, optical occlusions often occur: in a multi-camera system one or more objects become indistinguishable in one view, potentially jeopardizing the conservation of identity over long-time trajectories. The most advanced 3D tracking algorithms overcome optical occlusions making use of set-cover techniques, which however have to solve NP-hard optimization problems. Moreover, current methods are not able to cope with occlusions arising from actual physical proximity of objects in 3D space. Here, we present a new method designed to work directly in 3D space and time, creating (3D+1) clouds of points representing the full spatio-temporal evolution of the moving targets. We can then use a simple connected components labeling routine, which is linear in time, to solve optical occlusions, hence lowering from NP to P the complexity of the problem. Finally, we use normalized cut spectral clustering to tackle 3D physical proximity.

GReTA - a novel Global and Recursive Tracking Algorithm in three dimensions

Apr 17, 2015

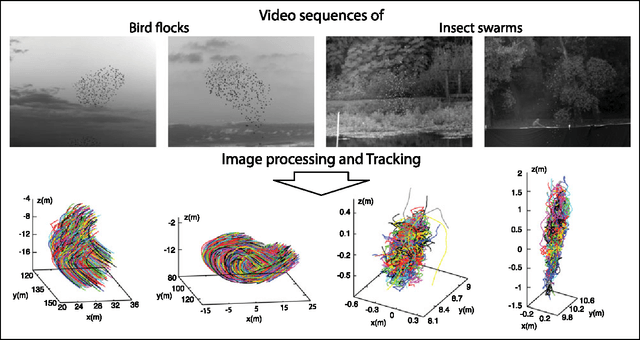

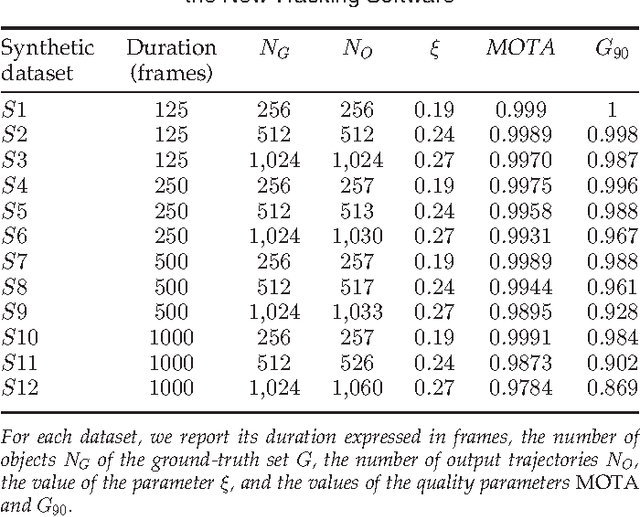

Abstract:Tracking multiple moving targets allows quantitative measure of the dynamic behavior in systems as diverse as animal groups in biology, turbulence in fluid dynamics and crowd and traffic control. In three dimensions, tracking several targets becomes increasingly hard since optical occlusions are very likely, i.e. two featureless targets frequently overlap for several frames. Occlusions are particularly frequent in biological groups such as bird flocks, fish schools, and insect swarms, a fact that has severely limited collective animal behavior field studies in the past. This paper presents a 3D tracking method that is robust in the case of severe occlusions. To ensure robustness, we adopt a global optimization approach that works on all objects and frames at once. To achieve practicality and scalability, we employ a divide and conquer formulation, thanks to which the computational complexity of the problem is reduced by orders of magnitude. We tested our algorithm with synthetic data, with experimental data of bird flocks and insect swarms and with public benchmark datasets, and show that our system yields high quality trajectories for hundreds of moving targets with severe overlap. The results obtained on very heterogeneous data show the potential applicability of our method to the most diverse experimental situations.

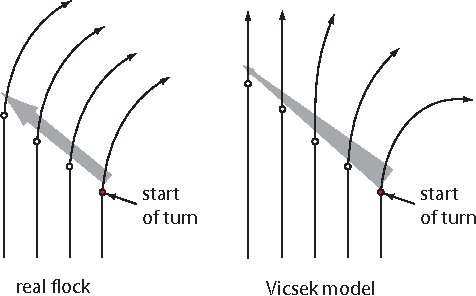

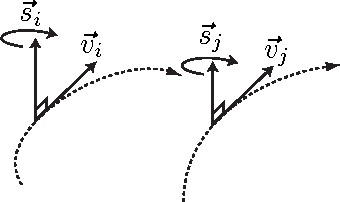

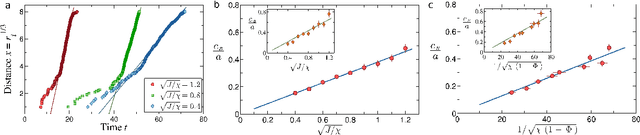

Flocking and turning: a new model for self-organized collective motion

Jan 21, 2015

Abstract:Birds in a flock move in a correlated way, resulting in large polarization of velocities. A good understanding of this collective behavior exists for linear motion of the flock. Yet observing actual birds, the center of mass of the group often turns giving rise to more complicated dynamics, still keeping strong polarization of the flock. Here we propose novel dynamical equations for the collective motion of polarized animal groups that account for correlated turning including solely social forces. We exploit rotational symmetries and conservation laws of the problem to formulate a theory in terms of generalized coordinates of motion for the velocity directions akin to a Hamiltonian formulation for rotations. We explicitly derive the correspondence between this formulation and the dynamics of the individual velocities, thus obtaining a new model of collective motion. In the appropriate overdamped limit we recover the well-known Vicsek model, which dissipates rotational information and does not allow for polarized turns. Although the new model has its most vivid success in describing turning groups, its dynamics is intrinsically different from previous ones in a wide dynamical regime, while reducing to the hydrodynamic description of Toner and Tu at very large length-scales. The derived framework is therefore general and it may describe the collective motion of any strongly polarized active matter system.

* Accepted for the Special Issue of the Journal of Statistical Physics: Collective Behavior in Biological Systems, 17 pages, 4 figures, 3 videos

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge