Fabio Pellacini

Structured Pattern Expansion with Diffusion Models

Nov 12, 2024

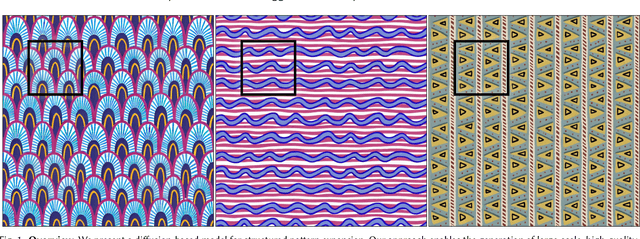

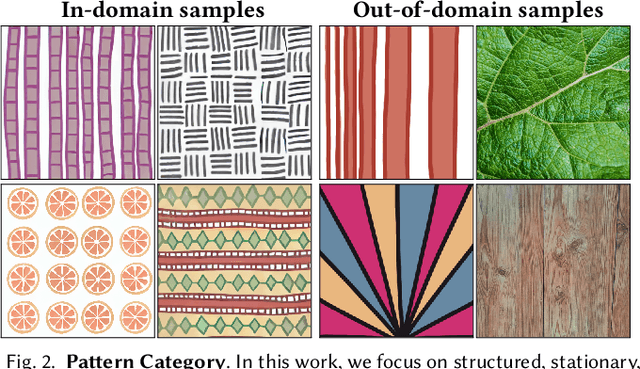

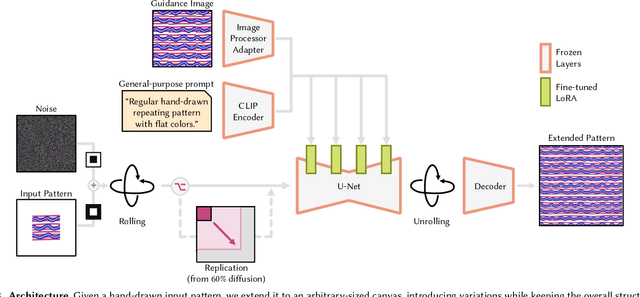

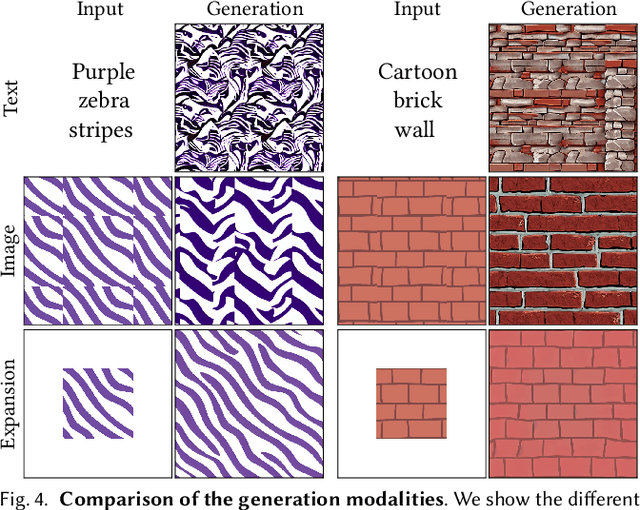

Abstract:Recent advances in diffusion models have significantly improved the synthesis of materials, textures, and 3D shapes. By conditioning these models via text or images, users can guide the generation, reducing the time required to create digital assets. In this paper, we address the synthesis of structured, stationary patterns, where diffusion models are generally less reliable and, more importantly, less controllable. Our approach leverages the generative capabilities of diffusion models specifically adapted for the pattern domain. It enables users to exercise direct control over the synthesis by expanding a partially hand-drawn pattern into a larger design while preserving the structure and details of the input. To enhance pattern quality, we fine-tune an image-pretrained diffusion model on structured patterns using Low-Rank Adaptation (LoRA), apply a noise rolling technique to ensure tileability, and utilize a patch-based approach to facilitate the generation of large-scale assets. We demonstrate the effectiveness of our method through a comprehensive set of experiments, showing that it outperforms existing models in generating diverse, consistent patterns that respond directly to user input.

Environment Maps Editing using Inverse Rendering and Adversarial Implicit Functions

Oct 24, 2024

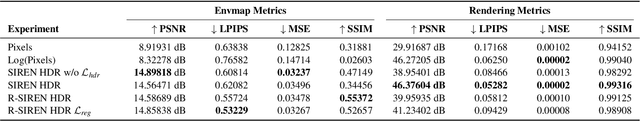

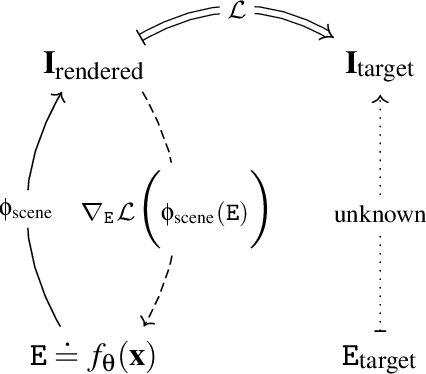

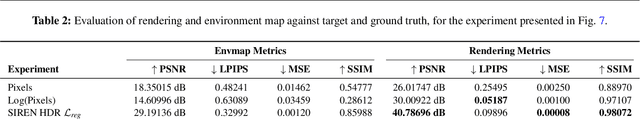

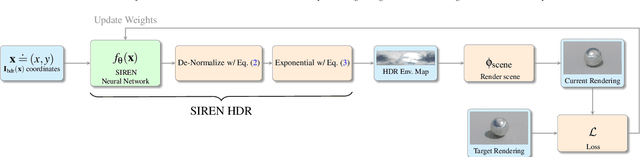

Abstract:Editing High Dynamic Range (HDR) environment maps using an inverse differentiable rendering architecture is a complex inverse problem due to the sparsity of relevant pixels and the challenges in balancing light sources and background. The pixels illuminating the objects are a small fraction of the total image, leading to noise and convergence issues when the optimization directly involves pixel values. HDR images, with pixel values beyond the typical Standard Dynamic Range (SDR), pose additional challenges. Higher learning rates corrupt the background during optimization, while lower learning rates fail to manipulate light sources. Our work introduces a novel method for editing HDR environment maps using a differentiable rendering, addressing sparsity and variance between values. Instead of introducing strong priors that extract the relevant HDR pixels and separate the light sources, or using tricks such as optimizing the HDR image in the log space, we propose to model the optimized environment map with a new variant of implicit neural representations able to handle HDR images. The neural representation is trained with adversarial perturbations over the weights to ensure smooth changes in the output when it receives gradients from the inverse rendering. In this way, we obtain novel and cheap environment maps without relying on latent spaces of expensive generative models, maintaining the original visual consistency. Experimental results demonstrate the method's effectiveness in reconstructing the desired lighting effects while preserving the fidelity of the map and reflections on objects in the scene. Our approach can pave the way to interesting tasks, such as estimating a new environment map given a rendering with novel light sources, maintaining the initial perceptual features, and enabling brush stroke-based editing of existing environment maps.

Texture Retrieval in the Wild through detection-based attributes

Oct 04, 2019

Abstract:Capturing the essence of a textile image in a robust way is important to retrieve it in a large repository, especially if it has been acquired in the wild (by taking a photo of the textile of interest). In this paper we show that a texel-based representation fits well with this task. In particular, we refer to Texel-Att, a recent texel-based descriptor which has shown to capture fine grained variations of a texture, for retrieval purposes. After a brief explanation of Texel-Att, we will show in our experiments that this descriptor is robust to distortions resulting from acquisitions in the wild by setting up an experiment in which textures from the ElBa (an Element-Based texture dataset) are artificially distorted and then used to retrieve the original image. We compare our approach with existing descriptors using a simple ranking framework based on distance functions. Results show that even under extreme conditions (such as a down-sampling with a factor of 10), we perform better than alternative approaches.

Texel-Att: Representing and Classifying Element-based Textures by Attributes

Aug 30, 2019

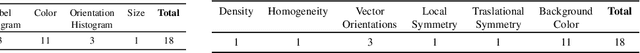

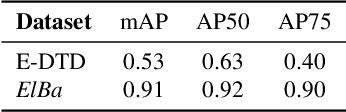

Abstract:Element-based textures are a kind of texture formed by nameable elements, the texels [1], distributed according to specific statistical distributions; it is of primary importance in many sectors, namely textile, fashion and interior design industry. State-of-theart texture descriptors fail to properly characterize element-based texture, so we present Texel-Att to fill this gap. Texel-Att is the first fine-grained, attribute-based representation and classification framework for element-based textures. It first individuates texels, characterizing them with individual attributes; subsequently, texels are grouped and characterized through layout attributes, which give the Texel-Att representation. Texels are detected by a Mask-RCNN, trained on a brand-new element-based texture dataset, ElBa, containing 30K texture images with 3M fully-annotated texels. Examples of individual and layout attributes are exhibited to give a glimpse on the level of achievable graininess. In the experiments, we present detection results to show that texels can be precisely individuated, even on textures "in the wild"; to this sake, we individuate the element-based classes of the Describable Texture Dataset (DTD), where almost 900K texels have been manually annotated, leading to the Element-based DTD (E-DTD). Subsequently, classification and ranking results demonstrate the expressivity of Texel-Att on ElBa and E-DTD, overcoming the alternative features and relative attributes, doubling the best performance in some cases; finally, we report interactive search results on ElBa and E-DTD: with Texel-Att on the E-DTD dataset we are able to individuate within 10 iterations the desired texture in the 90% of cases, against the 71% obtained with a combination of the finest existing attributes so far. Dataset and code is available at https://github.com/godimarcovr/Texel-Att

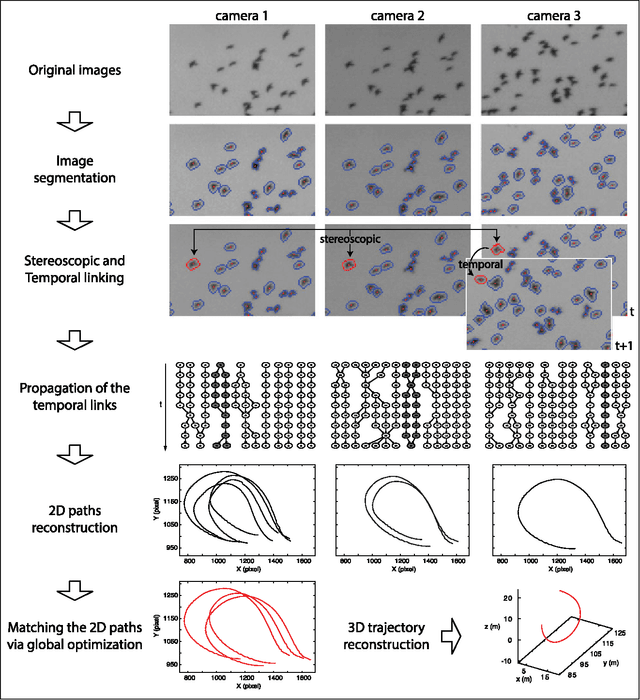

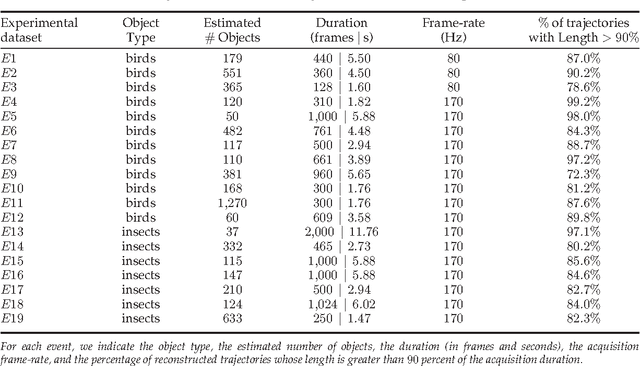

GReTA - a novel Global and Recursive Tracking Algorithm in three dimensions

Apr 17, 2015

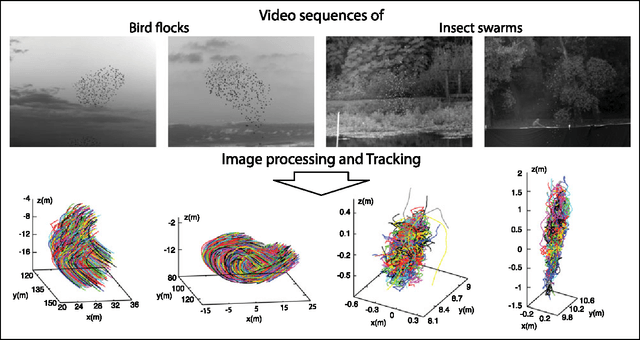

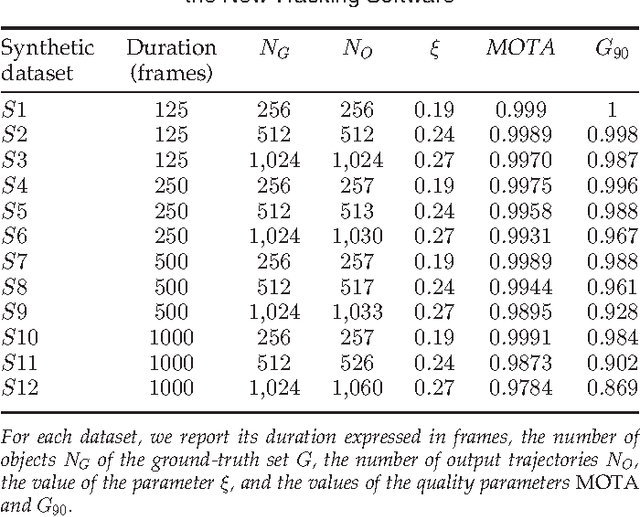

Abstract:Tracking multiple moving targets allows quantitative measure of the dynamic behavior in systems as diverse as animal groups in biology, turbulence in fluid dynamics and crowd and traffic control. In three dimensions, tracking several targets becomes increasingly hard since optical occlusions are very likely, i.e. two featureless targets frequently overlap for several frames. Occlusions are particularly frequent in biological groups such as bird flocks, fish schools, and insect swarms, a fact that has severely limited collective animal behavior field studies in the past. This paper presents a 3D tracking method that is robust in the case of severe occlusions. To ensure robustness, we adopt a global optimization approach that works on all objects and frames at once. To achieve practicality and scalability, we employ a divide and conquer formulation, thanks to which the computational complexity of the problem is reduced by orders of magnitude. We tested our algorithm with synthetic data, with experimental data of bird flocks and insect swarms and with public benchmark datasets, and show that our system yields high quality trajectories for hundreds of moving targets with severe overlap. The results obtained on very heterogeneous data show the potential applicability of our method to the most diverse experimental situations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge