Tessa van der Heiden

Reliably Re-Acting to Partner's Actions with the Social Intrinsic Motivation of Transfer Empowerment

Mar 07, 2022

Abstract:We consider multi-agent reinforcement learning (MARL) for cooperative communication and coordination tasks. MARL agents can be brittle because they can overfit their training partners' policies. This overfitting can produce agents that adopt policies that act under the expectation that other agents will act in a certain way rather than react to their actions. Our objective is to bias the learning process towards finding reactive strategies towards other agents' behaviors. Our method, transfer empowerment, measures the potential influence between agents' actions. Results from three simulated cooperation scenarios support our hypothesis that transfer empowerment improves MARL performance. We discuss how transfer empowerment could be a useful principle to guide multi-agent coordination by ensuring reactiveness to one's partner.

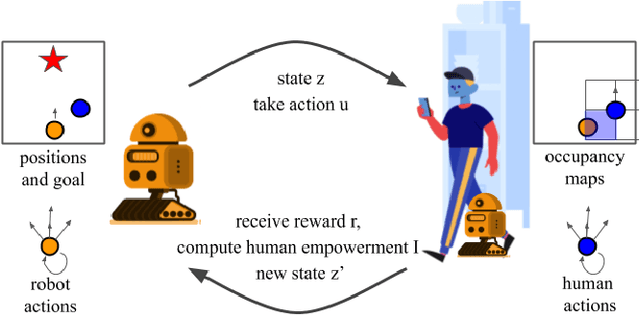

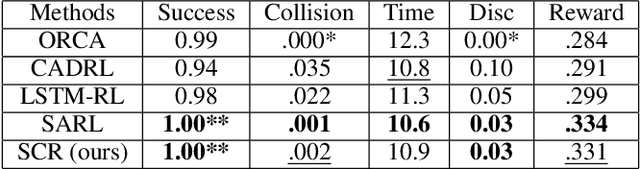

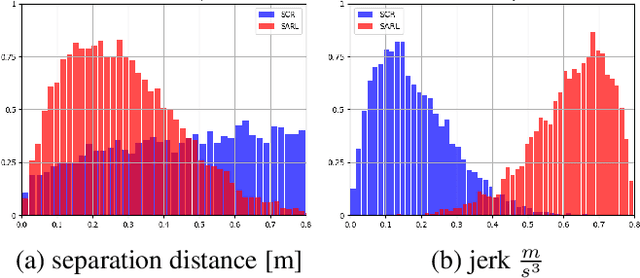

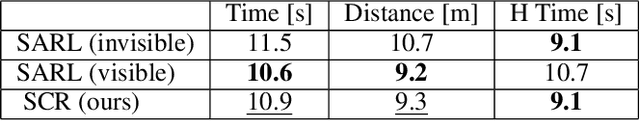

Social navigation with human empowerment driven reinforcement learning

Mar 21, 2020

Abstract:The next generation of mobile robots needs to be socially-compliant to be accepted by humans. As simple as this task may seem, defining compliance formally is not trivial. Yet, classical reinforcement learning (RL) relies upon hard-coded reward signals. In this work, we go beyond this approach and provide the agent with intrinsic motivation using empowerment. Empowerment maximizes the influence of an agent on its near future and has been shown to be a good model for biological behaviors. It also has been used for artificial agents to learn complicated and generalized actions. Self-empowerment maximizes the influence of an agent on its future. On the contrary, our robot strives for the empowerment of people in its environment, so they are not disturbed by the robot when pursuing their goals. We show that our robot has a positive influence on humans, as it minimizes the travel time and distance of humans while moving efficiently to its own goal. The method can be used in any multi-agent system that requires a robot to solve a particular task involving humans interactions.

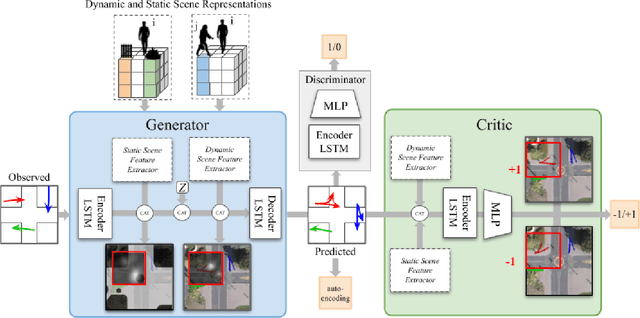

SafeCritic: Collision-Aware Trajectory Prediction

Oct 15, 2019

Abstract:Navigating complex urban environments safely is a key to realize fully autonomous systems. Predicting future locations of vulnerable road users, such as pedestrians and cyclists, thus, has received a lot of attention in the recent years. While previous works have addressed modeling interactions with the static (obstacles) and dynamic (humans) environment agents, we address an important gap in trajectory prediction. We propose SafeCritic, a model that synergizes generative adversarial networks for generating multiple "real" trajectories with reinforcement learning to generate "safe" trajectories. The Discriminator evaluates the generated candidates on whether they are consistent with the observed inputs. The Critic network is environmentally aware to prune trajectories that are in collision or are in violation with the environment. The auto-encoding loss stabilizes training and prevents mode-collapse. We demonstrate results on two large scale data sets with a considerable improvement over state-of-the-art. We also show that the Critic is able to classify the safety of trajectories.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge